Marc Queudot

Quick Starting Dialog Systems with Paraphrase Generation

Apr 06, 2022

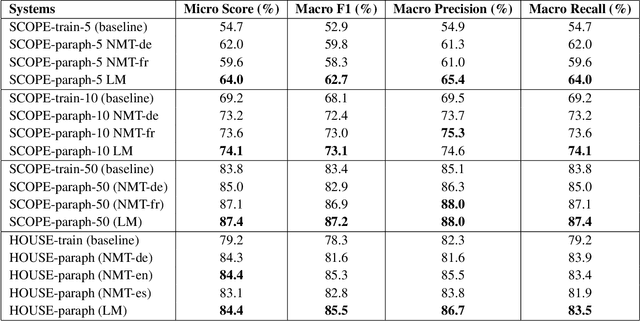

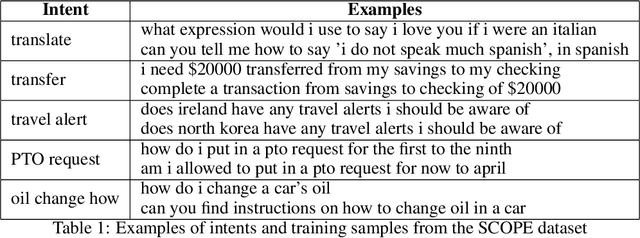

Abstract:Acquiring training data to improve the robustness of dialog systems can be a painstakingly long process. In this work, we propose a method to reduce the cost and effort of creating new conversational agents by artificially generating more data from existing examples, using paraphrase generation. Our proposed approach can kick-start a dialog system with little human effort, and brings its performance to a level satisfactory enough for allowing actual interactions with real end-users. We experimented with two neural paraphrasing approaches, namely Neural Machine Translation and a Transformer-based seq2seq model. We present the results obtained with two datasets in English and in French:~a crowd-sourced public intent classification dataset and our own corporate dialog system dataset. We show that our proposed approach increased the generalization capabilities of the intent classification model on both datasets, reducing the effort required to initialize a new dialog system and helping to deploy this technology at scale within an organization.

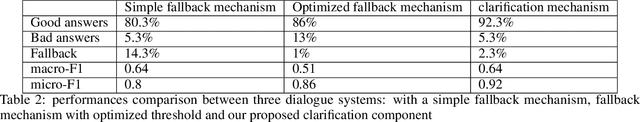

Multi-stage Clarification in Conversational AI: The case of Question-Answering Dialogue Systems

Oct 28, 2021

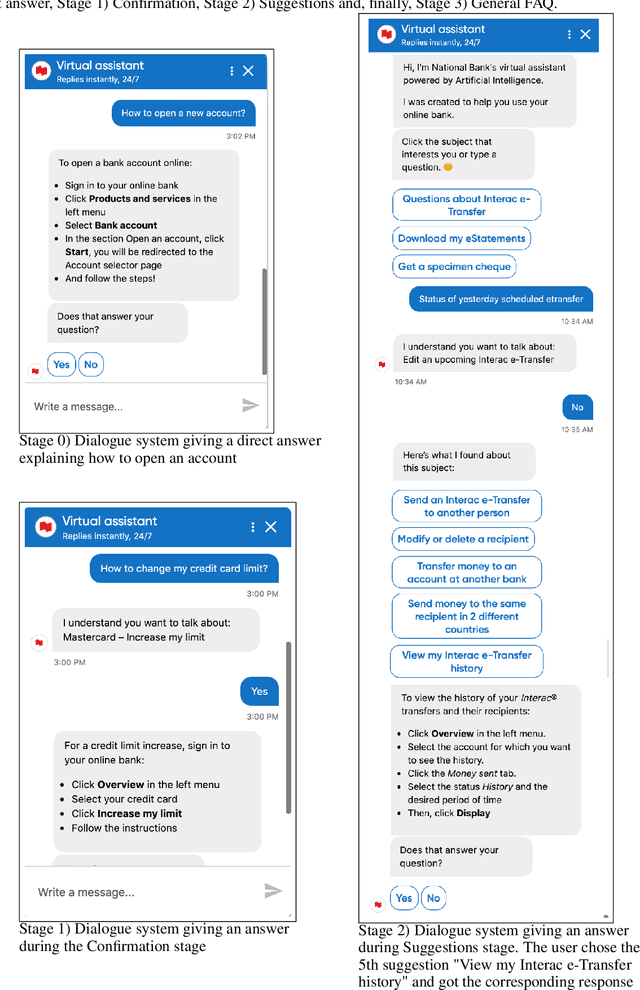

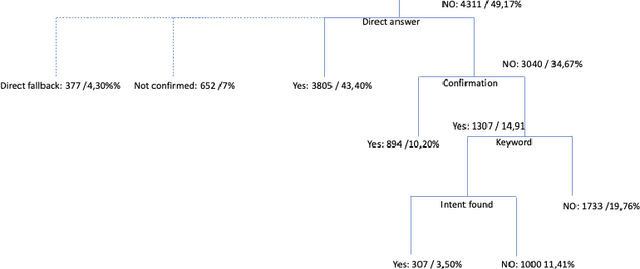

Abstract:Clarification resolution plays an important role in various information retrieval tasks such as interactive question answering and conversational search. In such context, the user often formulates their information needs as short and ambiguous queries, some popular search interfaces then prompt the user to confirm her intent (e.g. "Did you mean ... ?") or to rephrase if needed. When it comes to dialogue systems, having fluid user-bot exchanges is key to good user experience. In the absence of such clarification mechanism, one of the following responses is given to the user: 1) A direct answer, which can potentially be non-relevant if the intent was not clear, 2) a generic fallback message informing the user that the retrieval tool is incapable of handling the query. Both scenarios might raise frustration and degrade the user experience. To this end, we propose a multi-stage clarification mechanism for prompting clarification and query selection in the context of a question answering dialogue system. We show that our proposed mechanism improves the overall user experience and outperforms competitive baselines with two datasets, namely the public in-scope out-of-scope dataset and a commercial dataset based on real user logs.

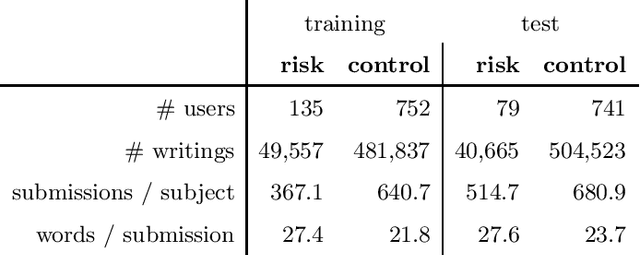

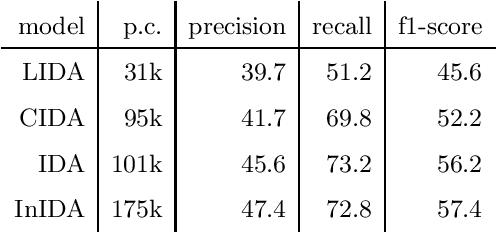

Inter and Intra Document Attention for Depression Risk Assessment

Jun 30, 2019

Abstract:We take interest in the early assessment of risk for depression in social media users. We focus on the eRisk 2018 dataset, which represents users as a sequence of their written online contributions. We implement four RNN-based systems to classify the users. We explore several aggregations methods to combine predictions on individual posts. Our best model reads through all writings of a user in parallel but uses an attention mechanism to prioritize the most important ones at each timestep.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge