Marc Erich Latoschik

Motion-Based User Identification across XR and Metaverse Applications by Deep Classification and Similarity Learning

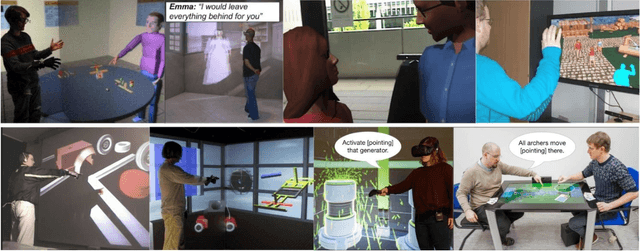

Sep 10, 2025Abstract:This paper examines the generalization capacity of two state-of-the-art classification and similarity learning models in reliably identifying users based on their motions in various Extended Reality (XR) applications. We developed a novel dataset containing a wide range of motion data from 49 users in five different XR applications: four XR games with distinct tasks and action patterns, and an additional social XR application with no predefined task sets. The dataset is used to evaluate the performance and, in particular, the generalization capacity of the two models across applications. Our results indicate that while the models can accurately identify individuals within the same application, their ability to identify users across different XR applications remains limited. Overall, our results provide insight into current models generalization capabilities and suitability as biometric methods for user verification and identification. The results also serve as a much-needed risk assessment of hazardous and unwanted user identification in XR and Metaverse applications. Our cross-application XR motion dataset and code are made available to the public to encourage similar research on the generalization of motion-based user identification in typical Metaverse application use cases.

Unobtrusive In-Situ Measurement of Behavior Change by Deep Metric Similarity Learning of Motion Patterns

Sep 04, 2025Abstract:This paper introduces an unobtrusive in-situ measurement method to detect user behavior changes during arbitrary exposures in XR systems. Here, such behavior changes are typically associated with the Proteus effect or bodily affordances elicited by different avatars that the users embody in XR. We present a biometric user model based on deep metric similarity learning, which uses high-dimensional embeddings as reference vectors to identify behavior changes of individual users. We evaluate our model against two alternative approaches: a (non-learned) motion analysis based on central tendencies of movement patterns and subjective post-exposure embodiment questionnaires frequently used in various XR exposures. In a within-subject study, participants performed a fruit collection task while embodying avatars of different body heights (short, actual-height, and tall). Subjective assessments confirmed the effective manipulation of perceived body schema, while the (non-learned) objective analyses of head and hand movements revealed significant differences across conditions. Our similarity learning model trained on the motion data successfully identified the elicited behavior change for various query and reference data pairings of the avatar conditions. The approach has several advantages in comparison to existing methods: 1) In-situ measurement without additional user input, 2) generalizable and scalable motion analysis for various use cases, 3) user-specific analysis on the individual level, and 4) with a trained model, users can be added and evaluated in real time to study how avatar changes affect behavior.

MAILS -- Meta AI Literacy Scale: Development and Testing of an AI Literacy Questionnaire Based on Well-Founded Competency Models and Psychological Change- and Meta-Competencies

Feb 18, 2023Abstract:The goal of the present paper is to develop and validate a questionnaire to assess AI literacy. In particular, the questionnaire should be deeply grounded in the existing literature on AI literacy, should be modular (i.e., including different facets that can be used independently of each other) to be flexibly applicable in professional life depending on the goals and use cases, and should meet psychological requirements and thus includes further psychological competencies in addition to the typical facets of AIL. We derived 60 items to represent different facets of AI Literacy according to Ng and colleagues conceptualisation of AI literacy and additional 12 items to represent psychological competencies such as problem solving, learning, and emotion regulation in regard to AI. For this purpose, data were collected online from 300 German-speaking adults. The items were tested for factorial structure in confirmatory factor analyses. The result is a measurement instrument that measures AI literacy with the facets Use & apply AI, Understand AI, Detect AI, and AI Ethics and the ability to Create AI as a separate construct, and AI Self-efficacy in learning and problem solving and AI Self-management. This study contributes to the research on AI literacy by providing a measurement instrument relying on profound competency models. In addition, higher-order psychological competencies are included that are particularly important in the context of pervasive change through AI systems.

Extensible Motion-based Identification of XR Users with Non-Specific Motion

Feb 15, 2023Abstract:Recently emerged solutions demonstrate that the movements of users interacting with extended reality (XR) applications carry identifying information and can be leveraged for identification. While such solutions can identify XR users within a few seconds, current systems require one or the other trade-off: either they apply simple distance-based approaches that can only be used for specific predetermined motions. Or they use classification-based approaches that use more powerful machine learning models and thus also work for arbitrary motions, but require full retraining to enroll new users, which can be prohibitively expensive. In this paper, we propose to combine the strengths of both approaches by using an embedding-based approach that leverages deep metric learning. We train the model on a dataset of users playing the VR game "Half-Life: Alyx" and conduct multiple experiments and analyses. The results show that the embedding-based method 1) is able to identify new users from non-specific movements using only a few minutes of reference data, 2) can enroll new users within seconds, while retraining a comparable classification-based approach takes almost a day, 3) is more reliable than a baseline classification-based approach when only little reference data is available, 4) can be used to identify new users from another dataset recorded with different VR devices. Altogether, our solution is a foundation for easily extensible XR user identification systems, applicable even to non-specific movements. It also paves the way for production-ready models that could be used by XR practitioners without the requirements of expertise, hardware, or data for training deep learning models.

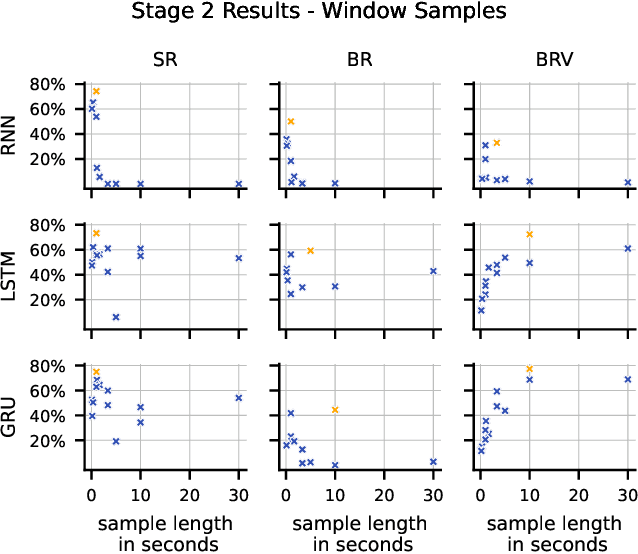

Comparison of Data Representations and Machine Learning Architectures for User Identification on Arbitrary Motion Sequences

Oct 02, 2022

Abstract:Reliable and robust user identification and authentication are important and often necessary requirements for many digital services. It becomes paramount in social virtual reality (VR) to ensure trust, specifically in digital encounters with lifelike realistic-looking avatars as faithful replications of real persons. Recent research has shown great interest in providing new solutions that verify the identity of extended reality (XR) systems. This paper compares different machine learning approaches to identify users based on arbitrary sequences of head and hand movements, a data stream provided by the majority of today's XR systems. We compare three different potential representations of the motion data from heads and hands (scene-relative, body-relative, and body-relative velocities), and by comparing the performances of five different machine learning architectures (random forest, multilayer perceptron, fully recurrent neural network, long-short term memory, gated recurrent unit). We use the publicly available dataset "Talking with Hands" and publish all our code to allow reproducibility and to provide baselines for future work. After hyperparameter optimization, the combination of a long-short term memory architecture and body-relative data outperformed competing combinations: the model correctly identifies any of the 34 subjects with an accuracy of 100\% within 150 seconds. The code for models, training and evaluation is made publicly available. Altogether, our approach provides an effective foundation for behaviometric-based identification and authentication to guide researchers and practitioners.

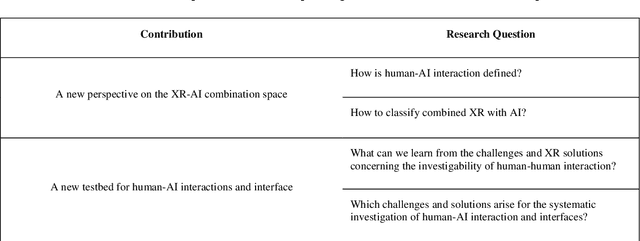

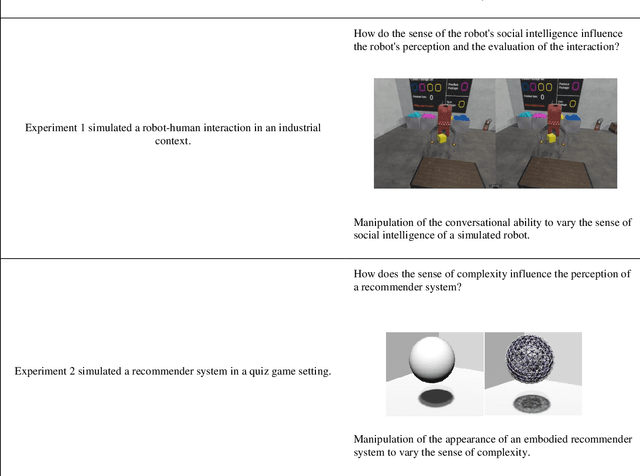

eXtended Artificial Intelligence: New Prospects of Human-AI Interaction Research

Apr 05, 2021

Abstract:Artificial Intelligence (AI) covers a broad spectrum of computational problems and use cases. Many of those implicate profound and sometimes intricate questions of how humans interact or should interact with AIs. Moreover, many users or future users do have abstract ideas of what AI is, significantly depending on the specific embodiment of AI applications. Human-centered-design approaches would suggest evaluating the impact of different embodiments on human perception of and interaction with AI. An approach that is difficult to realize due to the sheer complexity of application fields and embodiments in reality. However, here XR opens new possibilities to research human-AI interactions. The article's contribution is twofold: First, it provides a theoretical treatment and model of human-AI interaction based on an XR-AI continuum as a framework for and a perspective of different approaches of XR-AI combinations. It motivates XR-AI combinations as a method to learn about the effects of prospective human-AI interfaces and shows why the combination of XR and AI fruitfully contributes to a valid and systematic investigation of human-AI interactions and interfaces. Second, the article provides two exemplary experiments investigating the aforementioned approach for two distinct AI-systems. The first experiment reveals an interesting gender effect in human-robot interaction, while the second experiment reveals an Eliza effect of a recommender system. Here the article introduces two paradigmatic implementations of the proposed XR testbed for human-AI interactions and interfaces and shows how a valid and systematic investigation can be conducted. In sum, the article opens new perspectives on how XR benefits human-centered AI design and development.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge