Manuel Roveri

SQUAD: Scalable Quorum Adaptive Decisions via ensemble of early exit neural networks

Jan 30, 2026Abstract:Early-exit neural networks have become popular for reducing inference latency by allowing intermediate predictions when sufficient confidence is achieved. However, standard approaches typically rely on single-model confidence thresholds, which are frequently unreliable due to inherent calibration issues. To address this, we introduce SQUAD (Scalable Quorum Adaptive Decisions), the first inference scheme that integrates early-exit mechanisms with distributed ensemble learning, improving uncertainty estimation while reducing the inference time. Unlike traditional methods that depend on individual confidence scores, SQUAD employs a quorum-based stopping criterion on early-exit learners by collecting intermediate predictions incrementally in order of computational complexity until a consensus is reached and halting the computation at that exit if the consensus is statistically significant. To maximize the efficacy of this voting mechanism, we also introduce QUEST (Quorum Search Technique), a Neural Architecture Search method to select early-exit learners with optimized hierarchical diversity, ensuring learners are complementary at every intermediate layer. This consensus-driven approach yields statistically robust early exits, improving the test accuracy up to 5.95% compared to state-of-the-art dynamic solutions with a comparable computational cost and reducing the inference latency up to 70.60% compared to static ensembles while maintaining a good accuracy.

SEAL: Searching Expandable Architectures for Incremental Learning

May 15, 2025Abstract:Incremental learning is a machine learning paradigm where a model learns from a sequential stream of tasks. This setting poses a key challenge: balancing plasticity (learning new tasks) and stability (preserving past knowledge). Neural Architecture Search (NAS), a branch of AutoML, automates the design of the architecture of Deep Neural Networks and has shown success in static settings. However, existing NAS-based approaches to incremental learning often rely on expanding the model at every task, making them impractical in resource-constrained environments. In this work, we introduce SEAL, a NAS-based framework tailored for data-incremental learning, a scenario where disjoint data samples arrive sequentially and are not stored for future access. SEAL adapts the model structure dynamically by expanding it only when necessary, based on a capacity estimation metric. Stability is preserved through cross-distillation training after each expansion step. The NAS component jointly searches for both the architecture and the optimal expansion policy. Experiments across multiple benchmarks demonstrate that SEAL effectively reduces forgetting and enhances accuracy while maintaining a lower model size compared to prior methods. These results highlight the promise of combining NAS and selective expansion for efficient, adaptive learning in incremental scenarios.

TActiLE: Tiny Active LEarning for wearable devices

May 02, 2025Abstract:Tiny Machine Learning (TinyML) algorithms have seen extensive use in recent years, enabling wearable devices to be not only connected but also genuinely intelligent by running machine learning (ML) computations directly on-device. Among such devices, smart glasses have particularly benefited from TinyML advancements. TinyML facilitates the on-device execution of the inference phase of ML algorithms on embedded and wearable devices, and more recently, it has expanded into On-device Learning (ODL), which allows both inference and learning phases to occur directly on the device. The application of ODL techniques to wearable devices is particularly compelling, as it enables the development of more personalized models that adapt based on the data of the user. However, one of the major challenges of ODL algorithms is the scarcity of labeled data collected on-device. In smart wearable contexts, requiring users to manually label large amounts of data is often impractical and could lead to user disengagement with the technology. To address this issue, this paper explores the application of Active Learning (AL) techniques, i.e., techniques that aim at minimizing the labeling effort, by actively selecting from a large quantity of unlabeled data only a small subset to be labeled and added to the training set of the algorithm. In particular, we propose TActiLE, a novel AL algorithm that selects from the stream of on-device sensor data the ones that would help the ML algorithm improve the most once coupled with labels provided by the user. TActiLE is the first Active Learning technique specifically designed for the TinyML context. We evaluate its effectiveness and efficiency through experiments on multiple image classification datasets. The results demonstrate its suitability for tiny and wearable devices.

DYNAMAX: Dynamic computing for Transformers and Mamba based architectures

Apr 29, 2025Abstract:Early exits (EEs) offer a promising approach to reducing computational costs and latency by dynamically terminating inference once a satisfactory prediction confidence on a data sample is achieved. Although many works integrate EEs into encoder-only Transformers, their application to decoder-only architectures and, more importantly, Mamba models, a novel family of state-space architectures in the LLM realm, remains insufficiently explored. This work introduces DYNAMAX, the first framework to exploit the unique properties of Mamba architectures for early exit mechanisms. We not only integrate EEs into Mamba but also repurpose Mamba as an efficient EE classifier for both Mamba-based and transformer-based LLMs, showcasing its versatility. Our experiments employ the Mistral 7B transformer compared to the Codestral 7B Mamba model, using data sets such as TruthfulQA, CoQA, and TriviaQA to evaluate computational savings, accuracy, and consistency. The results highlight the adaptability of Mamba as a powerful EE classifier and its efficiency in balancing computational cost and performance quality across NLP tasks. By leveraging Mamba's inherent design for dynamic processing, we open pathways for scalable and efficient inference in embedded applications and resource-constrained environments. This study underscores the transformative potential of Mamba in redefining dynamic computing paradigms for LLMs.

Architecture-Aware Minimization (A$^2$M): How to Find Flat Minima in Neural Architecture Search

Mar 13, 2025Abstract:Neural Architecture Search (NAS) has become an essential tool for designing effective and efficient neural networks. In this paper, we investigate the geometric properties of neural architecture spaces commonly used in differentiable NAS methods, specifically NAS-Bench-201 and DARTS. By defining flatness metrics such as neighborhoods and loss barriers along paths in architecture space, we reveal locality and flatness characteristics analogous to the well-known properties of neural network loss landscapes in weight space. In particular, we find that highly accurate architectures cluster together in flat regions, while suboptimal architectures remain isolated, unveiling the detailed geometrical structure of the architecture search landscape. Building on these insights, we propose Architecture-Aware Minimization (A$^2$M), a novel analytically derived algorithmic framework that explicitly biases, for the first time, the gradient of differentiable NAS methods towards flat minima in architecture space. A$^2$M consistently improves generalization over state-of-the-art DARTS-based algorithms on benchmark datasets including CIFAR-10, CIFAR-100, and ImageNet16-120, across both NAS-Bench-201 and DARTS search spaces. Notably, A$^2$M is able to increase the test accuracy, on average across different differentiable NAS methods, by +3.60\% on CIFAR-10, +4.60\% on CIFAR-100, and +3.64\% on ImageNet16-120, demonstrating its superior effectiveness in practice. A$^2$M can be easily integrated into existing differentiable NAS frameworks, offering a versatile tool for future research and applications in automated machine learning. We open-source our code at https://github.com/AI-Tech-Research-Lab/AsquaredM.

Dendron: Enhancing Human Activity Recognition with On-Device TinyML Learning

Mar 03, 2025Abstract:Human activity recognition (HAR) is a research field that employs Machine Learning (ML) techniques to identify user activities. Recent studies have prioritized the development of HAR solutions directly executed on wearable devices, enabling the on-device activity recognition. This approach is supported by the Tiny Machine Learning (TinyML) paradigm, which integrates ML within embedded devices with limited resources. However, existing approaches in the field lack in the capability for on-device learning of new HAR tasks, particularly when supervised data are scarce. To address this limitation, our paper introduces Dendron, a novel TinyML methodology designed to facilitate the on-device learning of new tasks for HAR, even in conditions of limited supervised data. Experimental results on two public-available datasets and an off-the-shelf device (STM32-NUCLEO-F401RE) show the effectiveness and efficiency of the proposed solution.

Quantifying Cryptocurrency Unpredictability: A Comprehensive Study of Complexity and Forecasting

Feb 13, 2025Abstract:This paper offers a thorough examination of the univariate predictability in cryptocurrency time-series. By exploiting a combination of complexity measure and model predictions we explore the cryptocurrencies time-series forecasting task focusing on the exchange rate in USD of Litecoin, Binance Coin, Bitcoin, Ethereum, and XRP. On one hand, to assess the complexity and the randomness of these time-series, a comparative analysis has been performed using Brownian and colored noises as a benchmark. The results obtained from the Complexity-Entropy causality plane and power density spectrum analysis reveal that cryptocurrency time-series exhibit characteristics closely resembling those of Brownian noise when analyzed in a univariate context. On the other hand, the application of a wide range of statistical, machine and deep learning models for time-series forecasting demonstrates the low predictability of cryptocurrencies. Notably, our analysis reveals that simpler models such as Naive models consistently outperform the more complex machine and deep learning ones in terms of forecasting accuracy across different forecast horizons and time windows. The combined study of complexity and forecasting accuracies highlights the difficulty of predicting the cryptocurrency market. These findings provide valuable insights into the inherent characteristics of the cryptocurrency data and highlight the need to reassess the challenges associated with predicting cryptocurrency's price movements.

* This is the author's accepted manuscript, modified per ACM self-archiving policy. The definitive Version of Record is available at https://doi.org/10.1145/3703412.3703420

Training Multi-Layer Binary Neural Networks With Local Binary Error Signals

Nov 28, 2024Abstract:Binary Neural Networks (BNNs) hold the potential for significantly reducing computational complexity and memory demand in machine and deep learning. However, most successful training algorithms for BNNs rely on quantization-aware floating-point Stochastic Gradient Descent (SGD), with full-precision hidden weights used during training. The binarized weights are only used at inference time, hindering the full exploitation of binary operations during the training process. In contrast to the existing literature, we introduce, for the first time, a multi-layer training algorithm for BNNs that does not require the computation of back-propagated full-precision gradients. Specifically, the proposed algorithm is based on local binary error signals and binary weight updates, employing integer-valued hidden weights that serve as a synaptic metaplasticity mechanism, thereby establishing it as a neurobiologically plausible algorithm. The binary-native and gradient-free algorithm proposed in this paper is capable of training binary multi-layer perceptrons (BMLPs) with binary inputs, weights, and activations, by using exclusively XNOR, Popcount, and increment/decrement operations, hence effectively paving the way for a new class of operation-optimized training algorithms. Experimental results on BMLPs fully trained in a binary-native and gradient-free manner on multi-class image classification benchmarks demonstrate an accuracy improvement of up to +13.36% compared to the fully binary state-of-the-art solution, showing minimal accuracy degradation compared to the same architecture trained with full-precision SGD and floating-point weights, activations, and inputs. The proposed algorithm is made available to the scientific community as a public repository.

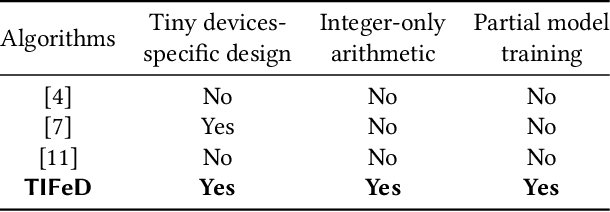

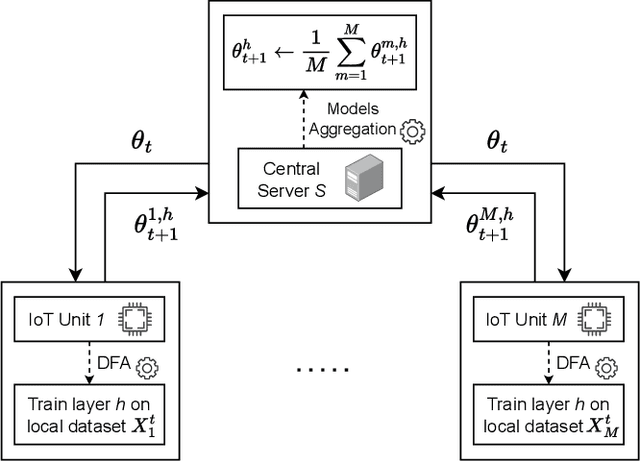

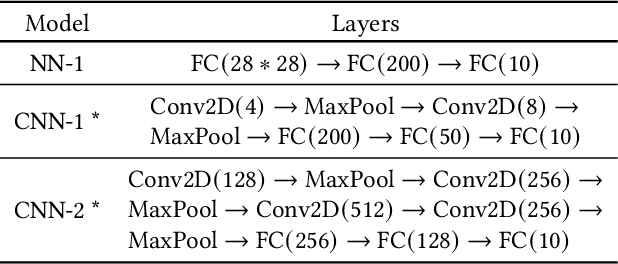

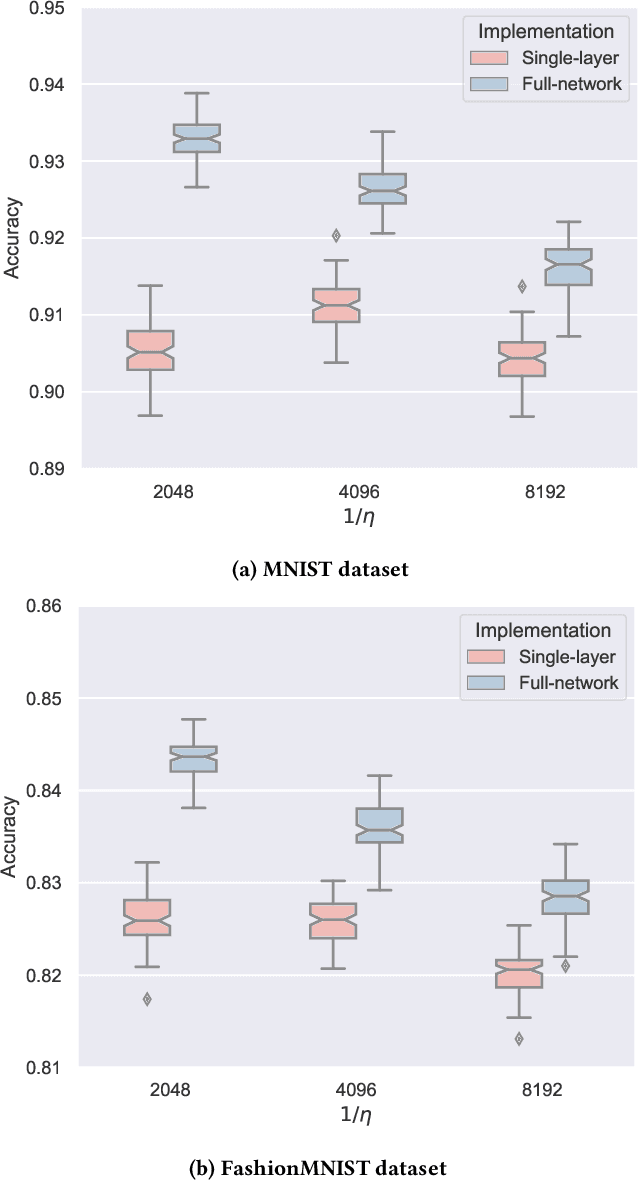

TIFeD: a Tiny Integer-based Federated learning algorithm with Direct feedback alignment

Nov 25, 2024

Abstract:Training machine and deep learning models directly on extremely resource-constrained devices is the next challenge in the field of tiny machine learning. The related literature in this field is very limited, since most of the solutions focus only on on-device inference or model adaptation through online learning, leaving the training to be carried out on external Cloud services. An interesting technological perspective is to exploit Federated Learning (FL), which allows multiple devices to collaboratively train a shared model in a distributed way. However, the main drawback of state-of-the-art FL algorithms is that they are not suitable for running on tiny devices. For the first time in the literature, in this paper we introduce TIFeD, a Tiny Integer-based Federated learning algorithm with Direct Feedback Alignment (DFA) entirely implemented by using an integer-only arithmetic and being specifically designed to operate on devices with limited resources in terms of memory, computation and energy. Besides the traditional full-network operating modality, in which each device of the FL setting trains the entire neural network on its own local data, we propose an innovative single-layer TIFeD implementation, which enables each device to train only a portion of the neural network model and opens the door to a new way of distributing the learning procedure across multiple devices. The experimental results show the feasibility and effectiveness of the proposed solution. The proposed TIFeD algorithm, with its full-network and single-layer implementations, is made available to the scientific community as a public repository.

StreamTinyNet: video streaming analysis with spatial-temporal TinyML

Jul 22, 2024Abstract:Tiny Machine Learning (TinyML) is a branch of Machine Learning (ML) that constitutes a bridge between the ML world and the embedded system ecosystem (i.e., Internet of Things devices, embedded devices, and edge computing units), enabling the execution of ML algorithms on devices constrained in terms of memory, computational capabilities, and power consumption. Video Streaming Analysis (VSA), one of the most interesting tasks of TinyML, consists in scanning a sequence of frames in a streaming manner, with the goal of identifying interesting patterns. Given the strict constraints of these tiny devices, all the current solutions rely on performing a frame-by-frame analysis, hence not exploiting the temporal component in the stream of data. In this paper, we present StreamTinyNet, the first TinyML architecture to perform multiple-frame VSA, enabling a variety of use cases that requires spatial-temporal analysis that were previously impossible to be carried out at a TinyML level. Experimental results on public-available datasets show the effectiveness and efficiency of the proposed solution. Finally, StreamTinyNet has been ported and tested on the Arduino Nicla Vision, showing the feasibility of what proposed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge