Mahmoud Nabil Mahmoud

Visual Heading Prediction for Autonomous Aerial Vehicles

Dec 10, 2025Abstract:The integration of Unmanned Aerial Vehicles (UAVs) and Unmanned Ground Vehicles (UGVs) is increasingly central to the development of intelligent autonomous systems for applications such as search and rescue, environmental monitoring, and logistics. However, precise coordination between these platforms in real-time scenarios presents major challenges, particularly when external localization infrastructure such as GPS or GNSS is unavailable or degraded [1]. This paper proposes a vision-based, data-driven framework for real-time UAV-UGV integration, with a focus on robust UGV detection and heading angle prediction for navigation and coordination. The system employs a fine-tuned YOLOv5 model to detect UGVs and extract bounding box features, which are then used by a lightweight artificial neural network (ANN) to estimate the UAV's required heading angle. A VICON motion capture system was used to generate ground-truth data during training, resulting in a dataset of over 13,000 annotated images collected in a controlled lab environment. The trained ANN achieves a mean absolute error of 0.1506° and a root mean squared error of 0.1957°, offering accurate heading angle predictions using only monocular camera inputs. Experimental evaluations achieve 95% accuracy in UGV detection. This work contributes a vision-based, infrastructure- independent solution that demonstrates strong potential for deployment in GPS/GNSS-denied environments, supporting reliable multi-agent coordination under realistic dynamic conditions. A demonstration video showcasing the system's real-time performance, including UGV detection, heading angle prediction, and UAV alignment under dynamic conditions, is available at: https://github.com/Kooroshraf/UAV-UGV-Integration

AgriRegion: Region-Aware Retrieval for High-Fidelity Agricultural Advice

Dec 10, 2025Abstract:Large Language Models (LLMs) have demonstrated significant potential in democratizing access to information. However, in the domain of agriculture, general-purpose models frequently suffer from contextual hallucination, which provides non-factual advice or answers are scientifically sound in one region but disastrous in another due to variations in soil, climate, and local regulations. We introduce AgriRegion, a Retrieval-Augmented Generation (RAG) framework designed specifically for high-fidelity, region-aware agricultural advisory. Unlike standard RAG approaches that rely solely on semantic similarity, AgriRegion incorporates a geospatial metadata injection layer and a region-prioritized re-ranking mechanism. By restricting the knowledge base to verified local agricultural extension services and enforcing geo-spatial constraints during retrieval, AgriRegion ensures that the advice regarding planting schedules, pest control, and fertilization is locally accurate. We create a novel benchmark dataset, AgriRegion-Eval, which comprises 160 domain-specific questions across 12 agricultural subfields. Experiments demonstrate that AgriRegion reduces hallucinations by 10-20% compared to state-of-the-art LLMs systems and significantly improves trust scores according to a comprehensive evaluation.

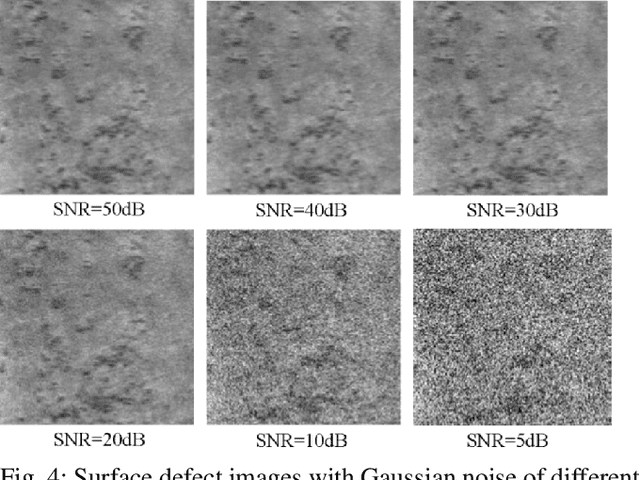

A Robust Completed Local Binary Pattern for Surface Defect Detection

Dec 07, 2021

Abstract:In this paper, we present a Robust Completed Local Binary Pattern (RCLBP) framework for a surface defect detection task. Our approach uses a combination of Non-Local (NL) means filter with wavelet thresholding and Completed Local Binary Pattern (CLBP) to extract robust features which are fed into classifiers for surface defects detection. This paper combines three components: A denoising technique based on Non-Local (NL) means filter with wavelet thresholding is established to denoise the noisy image while preserving the textures and edges. Second, discriminative features are extracted using the CLBP technique. Finally, the discriminative features are fed into the classifiers to build the detection model and evaluate the performance of the proposed framework. The performance of the defect detection models are evaluated using a real-world steel surface defect database from Northeastern University (NEU). Experimental results demonstrate that the proposed approach RCLBP is noise robust and can be applied for surface defect detection under varying conditions of intra-class and inter-class changes and with illumination changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge