Maha Shadaydeh

Computer Vision Group, Friedrich Schiller University of Jena

Mitigating Spurious Correlations in Patch-wise Tumor Classification on High-Resolution Multimodal Images

Nov 17, 2025Abstract:Patch-wise multi-label classification provides an efficient alternative to full pixel-wise segmentation on high-resolution images, particularly when the objective is to determine the presence or absence of target objects within a patch rather than their precise spatial extent. This formulation substantially reduces annotation cost, simplifies training, and allows flexible patch sizing aligned with the desired level of decision granularity. In this work, we focus on a special case, patch-wise binary classification, applied to the detection of a single class of interest (tumor) on high-resolution multimodal nonlinear microscopy images. We show that, although this simplified formulation enables efficient model development, it can introduce spurious correlations between patch composition and labels: tumor patches tend to contain larger tissue regions, whereas non-tumor patches often consist mostly of background with small tissue areas. We further quantify the bias in model predictions caused by this spurious correlation, and propose to use a debiasing strategy to mitigate its effect. Specifically, we apply GERNE, a debiasing method that can be adapted to maximize worst-group accuracy (WGA). Our results show an improvement in WGA by approximately 7% compared to ERM for two different thresholds used to binarize the spurious feature. This enhancement boosts model performance on critical minority cases, such as tumor patches with small tissues and non-tumor patches with large tissues, and underscores the importance of spurious correlation-aware learning in patch-wise classification problems.

Gradient Extrapolation for Debiased Representation Learning

Mar 17, 2025Abstract:Machine learning classification models trained with empirical risk minimization (ERM) often inadvertently rely on spurious correlations. When absent in the test data, these unintended associations between non-target attributes and target labels lead to poor generalization. This paper addresses this problem from a model optimization perspective and proposes a novel method, Gradient Extrapolation for Debiased Representation Learning (GERNE), designed to learn debiased representations in both known and unknown attribute training cases. GERNE uses two distinct batches with different amounts of spurious correlations to define the target gradient as the linear extrapolation of two gradients computed from each batch's loss. It is demonstrated that the extrapolated gradient, if directed toward the gradient of the batch with fewer amount of spurious correlation, can guide the training process toward learning a debiased model. GERNE can serve as a general framework for debiasing with methods, such as ERM, reweighting, and resampling, being shown as special cases. The theoretical upper and lower bounds of the extrapolation factor are derived to ensure convergence. By adjusting this factor, GERNE can be adapted to maximize the Group-Balanced Accuracy (GBA) or the Worst-Group Accuracy. The proposed approach is validated on five vision and one NLP benchmarks, demonstrating competitive and often superior performance compared to state-of-the-art baseline methods.

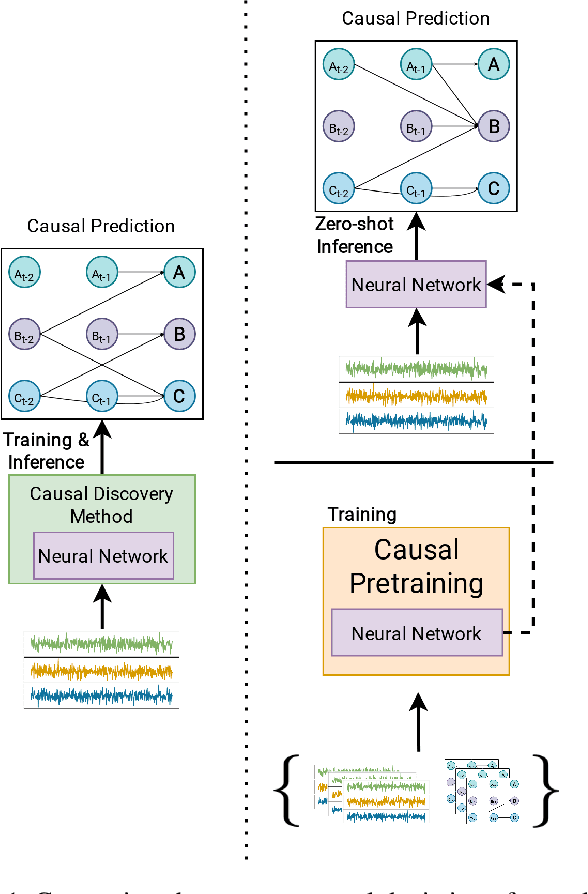

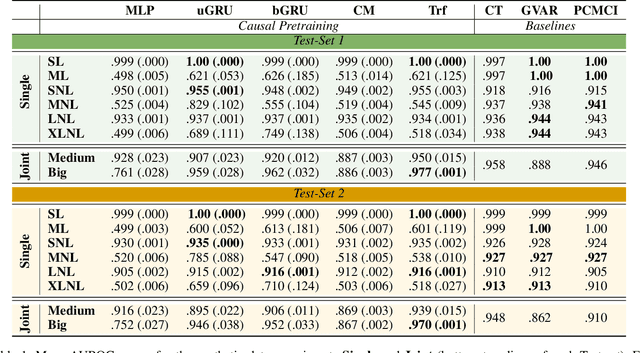

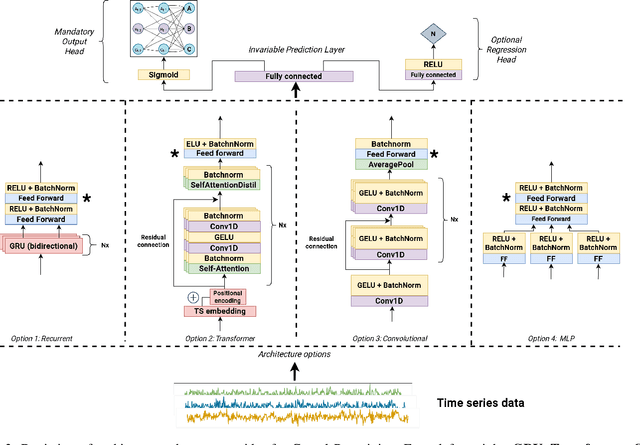

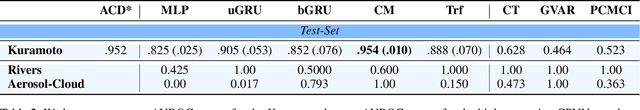

Embracing the black box: Heading towards foundation models for causal discovery from time series data

Feb 14, 2024

Abstract:Causal discovery from time series data encompasses many existing solutions, including those based on deep learning techniques. However, these methods typically do not endorse one of the most prevalent paradigms in deep learning: End-to-end learning. To address this gap, we explore what we call Causal Pretraining. A methodology that aims to learn a direct mapping from multivariate time series to the underlying causal graphs in a supervised manner. Our empirical findings suggest that causal discovery in a supervised manner is possible, assuming that the training and test time series samples share most of their dynamics. More importantly, we found evidence that the performance of Causal Pretraining can increase with data and model size, even if the additional data do not share the same dynamics. Further, we provide examples where causal discovery for real-world data with causally pretrained neural networks is possible within limits. We argue that this hints at the possibility of a foundation model for causal discovery.

Deep Learning-based Group Causal Inference in Multivariate Time-series

Jan 16, 2024

Abstract:Causal inference in a nonlinear system of multivariate timeseries is instrumental in disentangling the intricate web of relationships among variables, enabling us to make more accurate predictions and gain deeper insights into real-world complex systems. Causality methods typically identify the causal structure of a multivariate system by considering the cause-effect relationship of each pair of variables while ignoring the collective effect of a group of variables or interactions involving more than two-time series variables. In this work, we test model invariance by group-level interventions on the trained deep networks to infer causal direction in groups of variables, such as climate and ecosystem, brain networks, etc. Extensive testing with synthetic and real-world time series data shows a significant improvement of our method over other applied group causality methods and provides us insights into real-world time series. The code for our method can be found at:https://github.com/wasimahmadpk/gCause.

Simplified Concrete Dropout -- Improving the Generation of Attribution Masks for Fine-grained Classification

Jul 27, 2023Abstract:Fine-grained classification is a particular case of a classification problem, aiming to classify objects that share the visual appearance and can only be distinguished by subtle differences. Fine-grained classification models are often deployed to determine animal species or individuals in automated animal monitoring systems. Precise visual explanations of the model's decision are crucial to analyze systematic errors. Attention- or gradient-based methods are commonly used to identify regions in the image that contribute the most to the classification decision. These methods deliver either too coarse or too noisy explanations, unsuitable for identifying subtle visual differences reliably. However, perturbation-based methods can precisely identify pixels causally responsible for the classification result. Fill-in of the dropout (FIDO) algorithm is one of those methods. It utilizes the concrete dropout (CD) to sample a set of attribution masks and updates the sampling parameters based on the output of the classification model. A known problem of the algorithm is a high variance in the gradient estimates, which the authors have mitigated until now by mini-batch updates of the sampling parameters. This paper presents a solution to circumvent these computational instabilities by simplifying the CD sampling and reducing reliance on large mini-batch sizes. First, it allows estimating the parameters with smaller mini-batch sizes without losing the quality of the estimates but with a reduced computational effort. Furthermore, our solution produces finer and more coherent attribution masks. Finally, we use the resulting attribution masks to improve the classification performance of a trained model without additional fine-tuning of the model.

Sequential Causal Effect Variational Autoencoder: Time Series Causal Link Estimation under Hidden Confounding

Sep 23, 2022

Abstract:Estimating causal effects from observational data in the presence of latent variables sometimes leads to spurious relationships which can be misconceived as causal. This is an important issue in many fields such as finance and climate science. We propose Sequential Causal Effect Variational Autoencoder (SCEVAE), a novel method for time series causality analysis under hidden confounding. It is based on the CEVAE framework and recurrent neural networks. The causal link's intensity of the confounded variables is calculated by using direct causal criteria based on Pearl's do-calculus. We show the efficacy of SCEVAE by applying it to synthetic datasets with both linear and nonlinear causal links. Furthermore, we apply our method to real aerosol-cloud-climate observation data. We compare our approach to a time series deconfounding method with and without substitute confounders on the synthetic data. We demonstrate that our method performs better by comparing both methods to the ground truth. In the case of real data, we use the expert knowledge of causal links and show how the use of correct proxy variables aids data reconstruction.

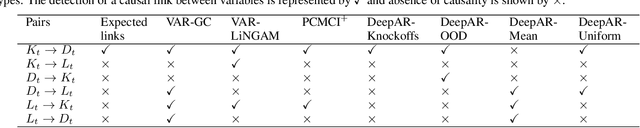

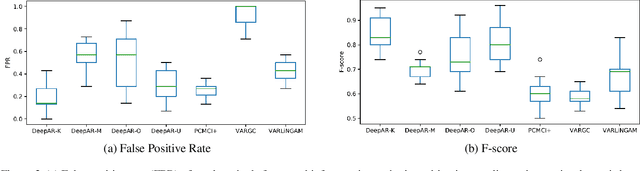

Causal Discovery using Model Invariance through Knockoff Interventions

Jul 08, 2022

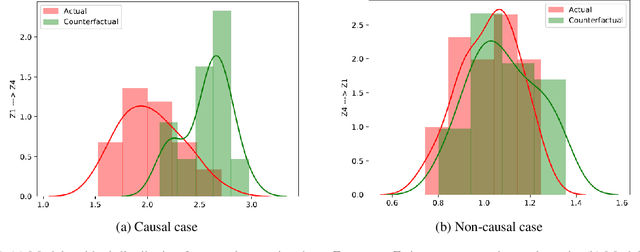

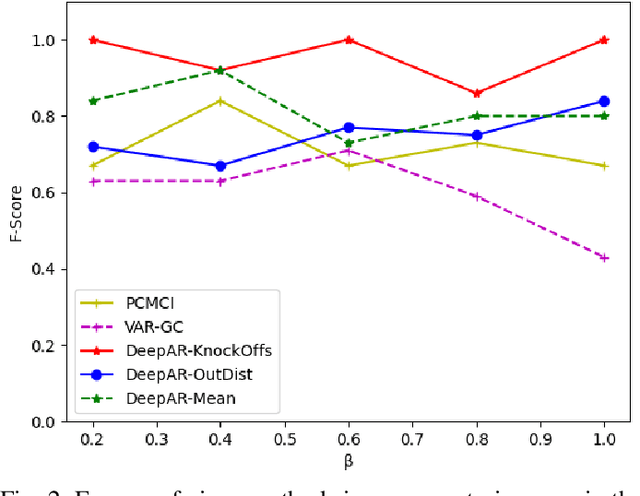

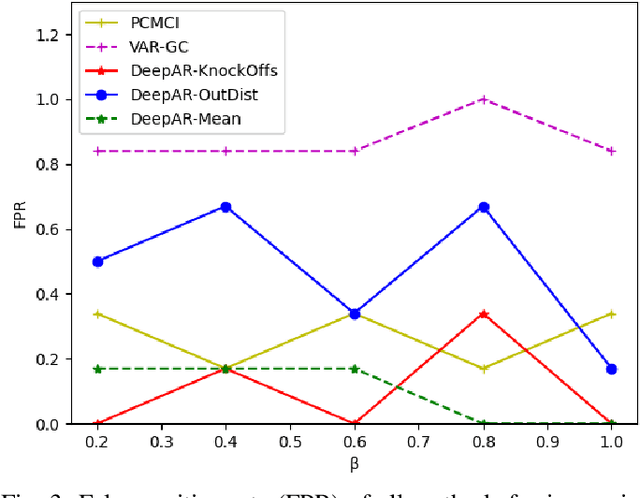

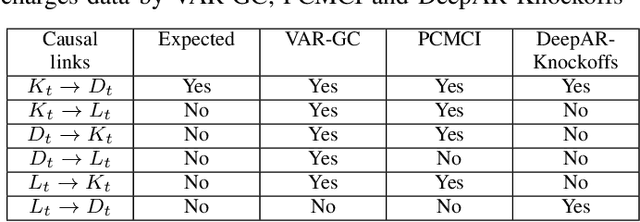

Abstract:Cause-effect analysis is crucial to understand the underlying mechanism of a system. We propose to exploit model invariance through interventions on the predictors to infer causality in nonlinear multivariate systems of time series. We model nonlinear interactions in time series using DeepAR and then expose the model to different environments using Knockoffs-based interventions to test model invariance. Knockoff samples are pairwise exchangeable, in-distribution and statistically null variables generated without knowing the response. We test model invariance where we show that the distribution of the response residual does not change significantly upon interventions on non-causal predictors. We evaluate our method on real and synthetically generated time series. Overall our method outperforms other widely used causality methods, i.e, VAR Granger causality, VARLiNGAM and PCMCI+.

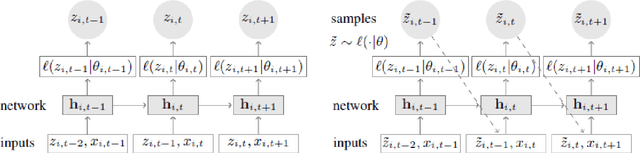

Causal Inference in Non-linear Time-series using Deep Networks and Knockoff Counterfactuals

Oct 18, 2021

Abstract:Estimating causal relations is vital in understanding the complex interactions in multivariate time series. Non-linear coupling of variables is one of the major challenges inaccurate estimation of cause-effect relations. In this paper, we propose to use deep autoregressive networks (DeepAR) in tandem with counterfactual analysis to infer nonlinear causal relations in multivariate time series. We extend the concept of Granger causality using probabilistic forecasting with DeepAR. Since deep networks can neither handle missing input nor out-of-distribution intervention, we propose to use the Knockoffs framework (Barberand Cand`es, 2015) for generating intervention variables and consequently counterfactual probabilistic forecasting. Knockoff samples are independent of their output given the observed variables and exchangeable with their counterpart variables without changing the underlying distribution of the data. We test our method on synthetic as well as real-world time series datasets. Overall our method outperforms the widely used vector autoregressive Granger causality and PCMCI in detecting nonlinear causal dependency in multivariate time series.

Anomaly Attribution of Multivariate Time Series using Counterfactual Reasoning

Sep 14, 2021

Abstract:There are numerous methods for detecting anomalies in time series, but that is only the first step to understanding them. We strive to exceed this by explaining those anomalies. Thus we develop a novel attribution scheme for multivariate time series relying on counterfactual reasoning. We aim to answer the counterfactual question of would the anomalous event have occurred if the subset of the involved variables had been more similarly distributed to the data outside of the anomalous interval. Specifically, we detect anomalous intervals using the Maximally Divergent Interval (MDI) algorithm, replace a subset of variables with their in-distribution values within the detected interval and observe if the interval has become less anomalous, by re-scoring it with MDI. We evaluate our method on multivariate temporal and spatio-temporal data and confirm the accuracy of our anomaly attribution of multiple well-understood extreme climate events such as heatwaves and hurricanes.

Counterfactual Generation with Knockoffs

Feb 01, 2021

Abstract:Human interpretability of deep neural networks' decisions is crucial, especially in domains where these directly affect human lives. Counterfactual explanations of already trained neural networks can be generated by perturbing input features and attributing importance according to the change in the classifier's outcome after perturbation. Perturbation can be done by replacing features using heuristic or generative in-filling methods. The choice of in-filling function significantly impacts the number of artifacts, i.e., false-positive attributions. Heuristic methods result in false-positive artifacts because the image after the perturbation is far from the original data distribution. Generative in-filling methods reduce artifacts by producing in-filling values that respect the original data distribution. However, current generative in-filling methods may also increase false-negatives due to the high correlation of in-filling values with the original data. In this paper, we propose to alleviate this by generating in-fillings with the statistically-grounded Knockoffs framework, which was developed by Barber and Cand\`es in 2015 as a tool for variable selection with controllable false discovery rate. Knockoffs are statistically null-variables as decorrelated as possible from the original data, which can be swapped with the originals without changing the underlying data distribution. A comparison of different in-filling methods indicates that in-filling with knockoffs can reveal explanations in a more causal sense while still maintaining the compactness of the explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge