M. Eren Akbiyik

G-MEMP: Gaze-Enhanced Multimodal Ego-Motion Prediction in Driving

Dec 13, 2023Abstract:Understanding the decision-making process of drivers is one of the keys to ensuring road safety. While the driver intent and the resulting ego-motion trajectory are valuable in developing driver-assistance systems, existing methods mostly focus on the motions of other vehicles. In contrast, we focus on inferring the ego trajectory of a driver's vehicle using their gaze data. For this purpose, we first collect a new dataset, GEM, which contains high-fidelity ego-motion videos paired with drivers' eye-tracking data and GPS coordinates. Next, we develop G-MEMP, a novel multimodal ego-trajectory prediction network that combines GPS and video input with gaze data. We also propose a new metric called Path Complexity Index (PCI) to measure the trajectory complexity. We perform extensive evaluations of the proposed method on both GEM and DR(eye)VE, an existing benchmark dataset. The results show that G-MEMP significantly outperforms state-of-the-art methods in both benchmarks. Furthermore, ablation studies demonstrate over 20% improvement in average displacement using gaze data, particularly in challenging driving scenarios with a high PCI. The data, code, and models can be found at https://eth-ait.github.io/g-memp/.

Data Augmentation in Training CNNs: Injecting Noise to Images

Jul 12, 2023

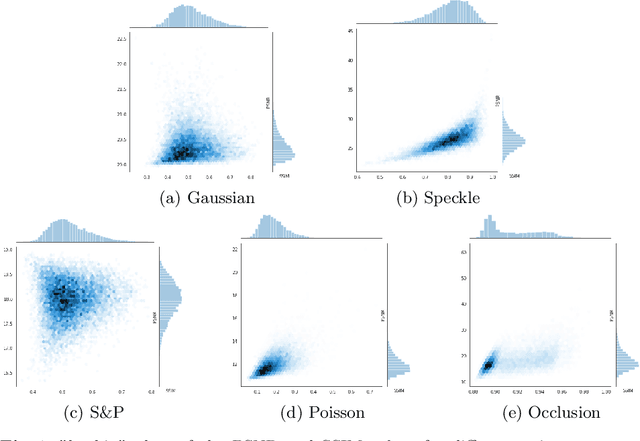

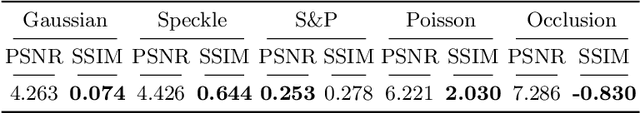

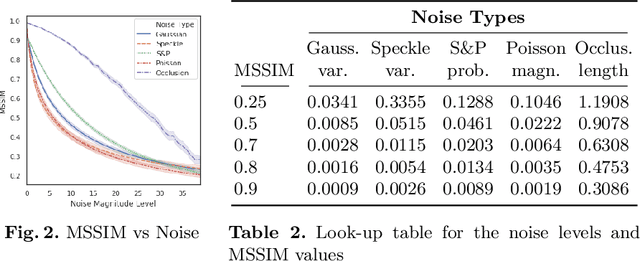

Abstract:Noise injection is a fundamental tool for data augmentation, and yet there is no widely accepted procedure to incorporate it with learning frameworks. This study analyzes the effects of adding or applying different noise models of varying magnitudes to Convolutional Neural Network (CNN) architectures. Noise models that are distributed with different density functions are given common magnitude levels via Structural Similarity (SSIM) metric in order to create an appropriate ground for comparison. The basic results are conforming with the most of the common notions in machine learning, and also introduce some novel heuristics and recommendations on noise injection. The new approaches will provide better understanding on optimal learning procedures for image classification.

Ask "Who", Not "What": Bitcoin Volatility Forecasting with Twitter Data

Oct 27, 2021

Abstract:Understanding the variations in trading price (volatility), and its response to external information is a well-studied topic in finance. In this study, we focus on volatility predictions for a relatively new asset class of cryptocurrencies (in particular, Bitcoin) using deep learning representations of public social media data from Twitter. For the field work, we extracted semantic information and user interaction statistics from over 30 million Bitcoin-related tweets, in conjunction with 15-minute intraday price data over a 144-day horizon. Using this data, we built several deep learning architectures that utilized a combination of the gathered information. For all architectures, we conducted ablation studies to assess the influence of each component and feature set in our model. We found statistical evidences for the hypotheses that: (i) temporal convolutional networks perform significantly better than both autoregressive and other deep learning-based models in the literature, and (ii) the tweet author meta-information, even detached from the tweet itself, is a better predictor than the semantic content and tweet volume statistics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge