Lydia Abady

A Siamese-based Verification System for Open-set Architecture Attribution of Synthetic Images

Jul 19, 2023Abstract:Despite the wide variety of methods developed for synthetic image attribution, most of them can only attribute images generated by models or architectures included in the training set and do not work with unknown architectures, hindering their applicability in real-world scenarios. In this paper, we propose a verification framework that relies on a Siamese Network to address the problem of open-set attribution of synthetic images to the architecture that generated them. We consider two different settings. In the first setting, the system determines whether two images have been produced by the same generative architecture or not. In the second setting, the system verifies a claim about the architecture used to generate a synthetic image, utilizing one or multiple reference images generated by the claimed architecture. The main strength of the proposed system is its ability to operate in both closed and open-set scenarios so that the input images, either the query and reference images, can belong to the architectures considered during training or not. Experimental evaluations encompassing various generative architectures such as GANs, diffusion models, and transformers, focusing on synthetic face image generation, confirm the excellent performance of our method in both closed and open-set settings, as well as its strong generalization capabilities.

A One-Class Classifier for the Detection of GAN Manipulated Multi-Spectral Satellite Images

May 19, 2023

Abstract:The highly realistic image quality achieved by current image generative models has many academic and industrial applications. To limit the use of such models to benign applications, though, it is necessary that tools to conclusively detect whether an image has been generated synthetically or not are developed. For this reason, several detectors have been developed providing excellent performance in computer vision applications, however, they can not be applied as they are to multispectral satellite images, and hence new models must be trained. In general, two-class classifiers can achieve very good detection accuracies, however they are not able to generalise to image domains and generative models architectures different than those used during training. For this reason, in this paper, we propose a one-class classifier based on Vector Quantized Variational Autoencoder 2 (VQ-VAE 2) features to overcome the limitations of two-class classifiers. First, we emphasize the generalization problem that binary classifiers suffer from by training and testing an EfficientNet-B4 architecture on multiple multispectral datasets. Then we show that, since the VQ-VAE 2 based classifier is trained only on pristine images, it is able to detect images belonging to different domains and generated by architectures that have not been used during training. Last, we compare the two classifiers head-to-head on the same generated datasets, highlighting the superiori generalization capabilities of the VQ-VAE 2-based detector.

An Overview on the Generation and Detection of Synthetic and Manipulated Satellite Images

Sep 19, 2022

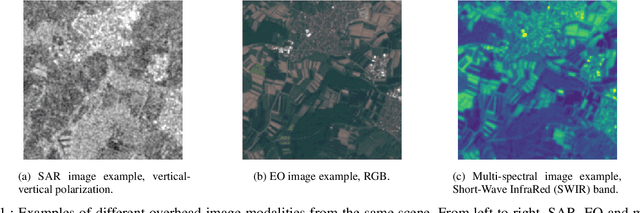

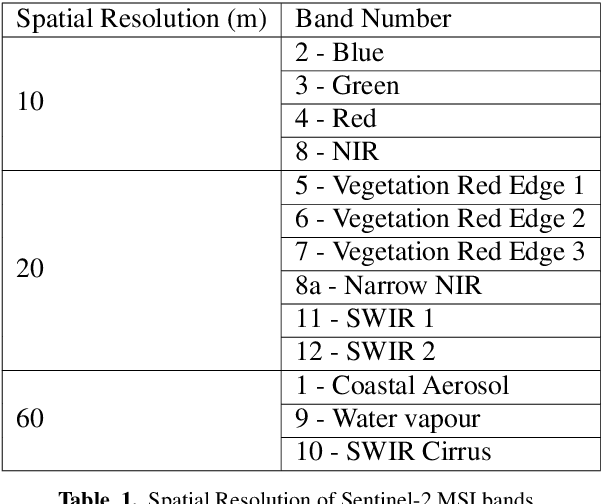

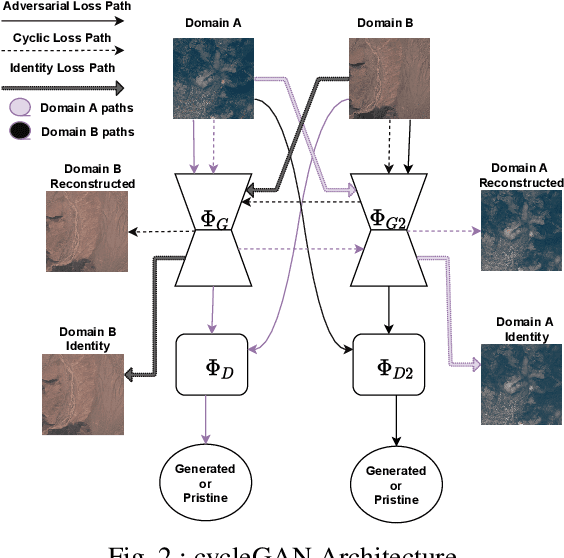

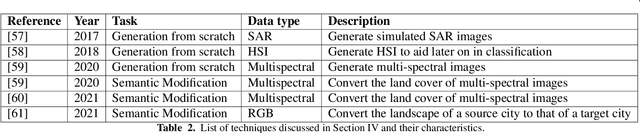

Abstract:Due to the reduction of technological costs and the increase of satellites launches, satellite images are becoming more popular and easier to obtain. Besides serving benevolent purposes, satellite data can also be used for malicious reasons such as misinformation. As a matter of fact, satellite images can be easily manipulated relying on general image editing tools. Moreover, with the surge of Deep Neural Networks (DNNs) that can generate realistic synthetic imagery belonging to various domains, additional threats related to the diffusion of synthetically generated satellite images are emerging. In this paper, we review the State of the Art (SOTA) on the generation and manipulation of satellite images. In particular, we focus on both the generation of synthetic satellite imagery from scratch, and the semantic manipulation of satellite images by means of image-transfer technologies, including the transformation of images obtained from one type of sensor to another one. We also describe forensic detection techniques that have been researched so far to classify and detect synthetic image forgeries. While we focus mostly on forensic techniques explicitly tailored to the detection of AI-generated synthetic contents, we also review some methods designed for general splicing detection, which can in principle also be used to spot AI manipulate images

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge