Luong Trung Nguyen

Semantic-Preserving Augmentation for Robust Image-Text Retrieval

Mar 10, 2023Abstract:Image text retrieval is a task to search for the proper textual descriptions of the visual world and vice versa. One challenge of this task is the vulnerability to input image and text corruptions. Such corruptions are often unobserved during the training, and degrade the retrieval model decision quality substantially. In this paper, we propose a novel image text retrieval technique, referred to as robust visual semantic embedding (RVSE), which consists of novel image-based and text-based augmentation techniques called semantic preserving augmentation for image (SPAugI) and text (SPAugT). Since SPAugI and SPAugT change the original data in a way that its semantic information is preserved, we enforce the feature extractors to generate semantic aware embedding vectors regardless of the corruption, improving the model robustness significantly. From extensive experiments using benchmark datasets, we show that RVSE outperforms conventional retrieval schemes in terms of image-text retrieval performance.

Gradual Federated Learning with Simulated Annealing

Oct 11, 2021

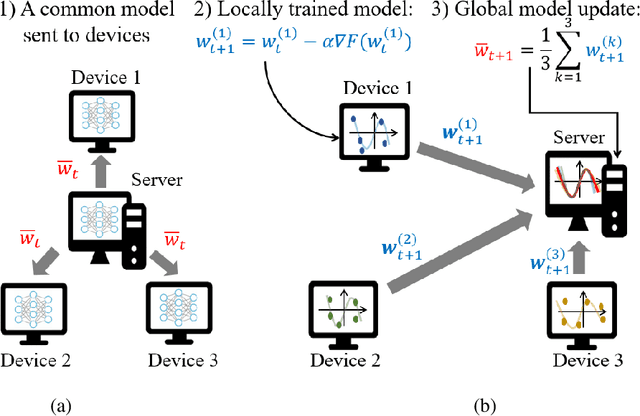

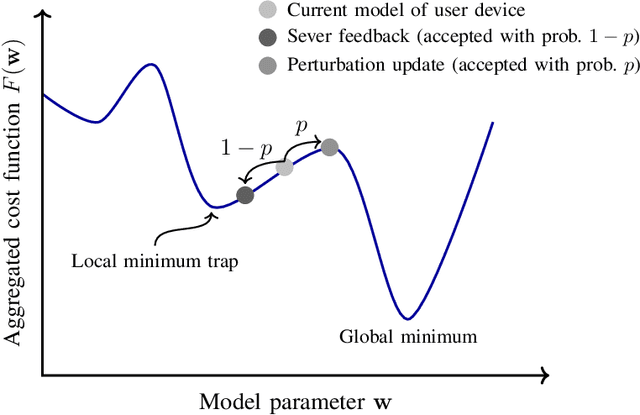

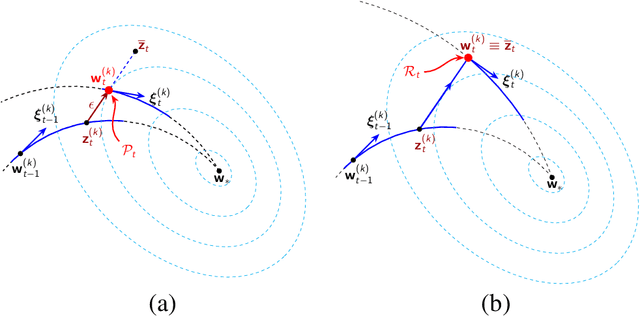

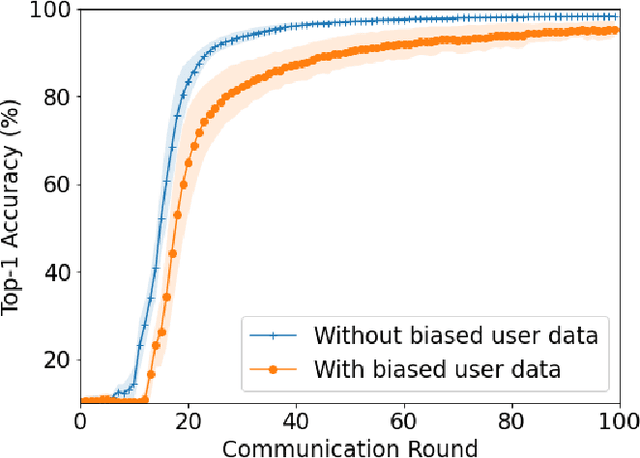

Abstract:Federated averaging (FedAvg) is a popular federated learning (FL) technique that updates the global model by averaging local models and then transmits the updated global model to devices for their local model update. One main limitation of FedAvg is that the average-based global model is not necessarily better than local models in the early stage of the training process so that FedAvg might diverge in realistic scenarios, especially when the data is non-identically distributed across devices and the number of data samples varies significantly from device to device. In this paper, we propose a new FL technique based on simulated annealing. The key idea of the proposed technique, henceforth referred to as \textit{simulated annealing-based FL} (SAFL), is to allow a device to choose its local model when the global model is immature. Specifically, by exploiting the simulated annealing strategy, we make each device choose its local model with high probability in early iterations when the global model is immature. From extensive numerical experiments using various benchmark datasets, we demonstrate that SAFL outperforms the conventional FedAvg technique in terms of the convergence speed and the classification accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge