Lukasz Tulczyjew

LLMcap: Large Language Model for Unsupervised PCAP Failure Detection

Jul 03, 2024Abstract:The integration of advanced technologies into telecommunication networks complicates troubleshooting, posing challenges for manual error identification in Packet Capture (PCAP) data. This manual approach, requiring substantial resources, becomes impractical at larger scales. Machine learning (ML) methods offer alternatives, but the scarcity of labeled data limits accuracy. In this study, we propose a self-supervised, large language model-based (LLMcap) method for PCAP failure detection. LLMcap leverages language-learning abilities and employs masked language modeling to learn grammar, context, and structure. Tested rigorously on various PCAPs, it demonstrates high accuracy despite the absence of labeled data during training, presenting a promising solution for efficient network analysis. Index Terms: Network troubleshooting, Packet Capture Analysis, Self-Supervised Learning, Large Language Model, Network Quality of Service, Network Performance.

Red Teaming Models for Hyperspectral Image Analysis Using Explainable AI

Mar 14, 2024

Abstract:Remote sensing (RS) applications in the space domain demand machine learning (ML) models that are reliable, robust, and quality-assured, making red teaming a vital approach for identifying and exposing potential flaws and biases. Since both fields advance independently, there is a notable gap in integrating red teaming strategies into RS. This paper introduces a methodology for examining ML models operating on hyperspectral images within the HYPERVIEW challenge, focusing on soil parameters' estimation. We use post-hoc explanation methods from the Explainable AI (XAI) domain to critically assess the best performing model that won the HYPERVIEW challenge and served as an inspiration for the model deployed on board the INTUITION-1 hyperspectral mission. Our approach effectively red teams the model by pinpointing and validating key shortcomings, constructing a model that achieves comparable performance using just 1% of the input features and a mere up to 5% performance loss. Additionally, we propose a novel way of visualizing explanations that integrate domain-specific information about hyperspectral bands (wavelengths) and data transformations to better suit interpreting models for hyperspectral image analysis.

A Multibranch Convolutional Neural Network for Hyperspectral Unmixing

Aug 03, 2022

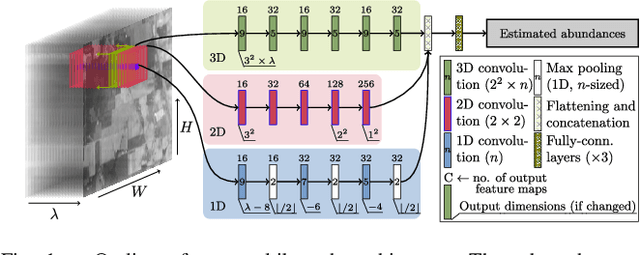

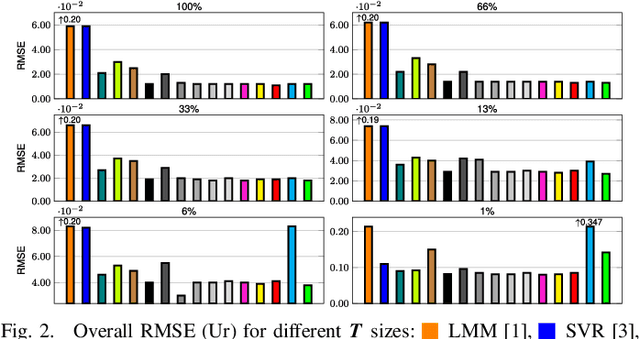

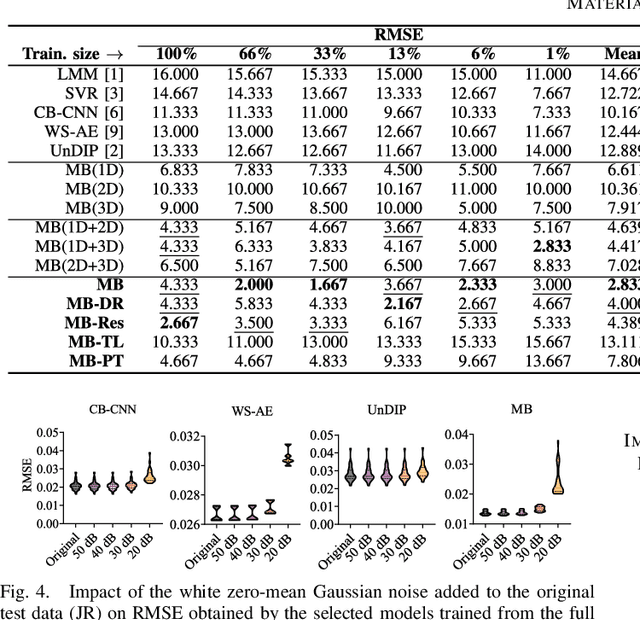

Abstract:Hyperspectral unmixing remains one of the most challenging tasks in the analysis of such data. Deep learning has been blooming in the field and proved to outperform other classic unmixing techniques, and can be effectively deployed onboard Earth observation satellites equipped with hyperspectral imagers. In this letter, we follow this research pathway and propose a multi-branch convolutional neural network that benefits from fusing spectral, spatial, and spectral-spatial features in the unmixing process. The results of our experiments, backed up with the ablation study, revealed that our techniques outperform others from the literature and lead to higher-quality fractional abundance estimation. Also, we investigated the influence of reducing the training sets on the capabilities of all algorithms and their robustness against noise, as capturing large and representative ground-truth sets is time-consuming and costly in practice, especially in emerging Earth observation scenarios.

* 14 pages (including supplementary material), published in IEEE Geoscience and Remote Sensing Letters

Graph Neural Networks Extract High-Resolution Cultivated Land Maps from Sentinel-2 Image Series

Aug 03, 2022

Abstract:Maintaining farm sustainability through optimizing the agricultural management practices helps build more planet-friendly environment. The emerging satellite missions can acquire multi- and hyperspectral imagery which captures more detailed spectral information concerning the scanned area, hence allows us to benefit from subtle spectral features during the analysis process in agricultural applications. We introduce an approach for extracting 2.5 m cultivated land maps from 10 m Sentinel-2 multispectral image series which benefits from a compact graph convolutional neural network. The experiments indicate that our models not only outperform classical and deep machine learning techniques through delivering higher-quality segmentation maps, but also dramatically reduce the memory footprint when compared to U-Nets (almost 8k trainable parameters of our models, with up to 31M parameters of U-Nets). Such memory frugality is pivotal in the missions which allow us to uplink a model to the AI-powered satellite once it is in orbit, as sending large nets is impossible due to the time constraints.

* 7 pages (including supplementary material), published in IEEE Geoscience and Remote Sensing Letters

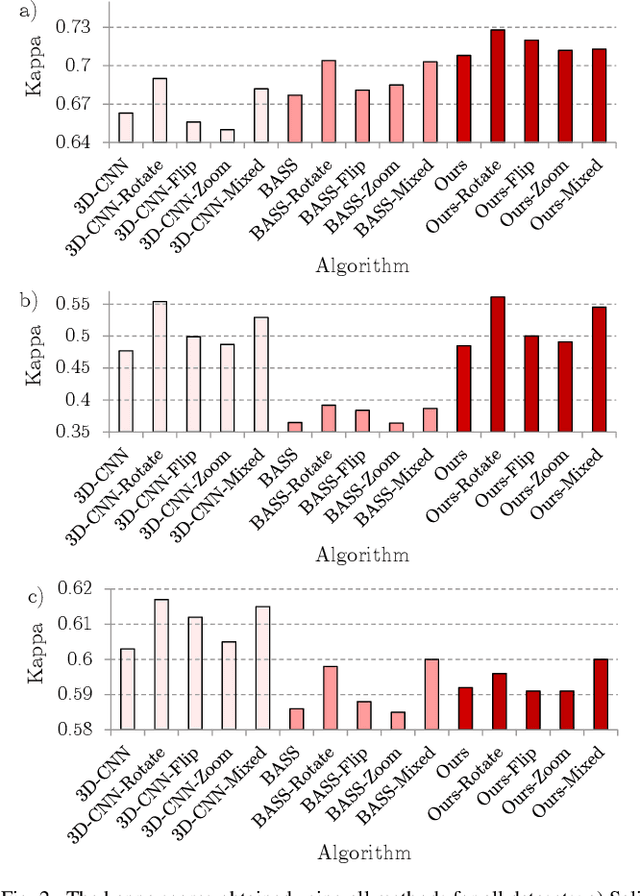

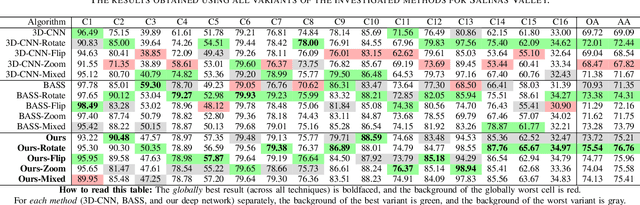

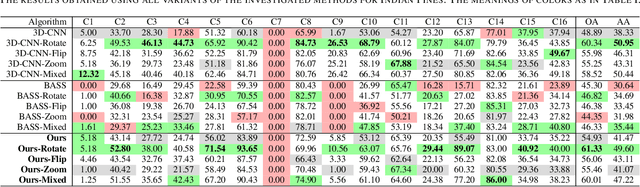

Segmenting Hyperspectral Images Using Spectral-Spatial Convolutional Neural Networks With Training-Time Data Augmentation

Jul 27, 2019

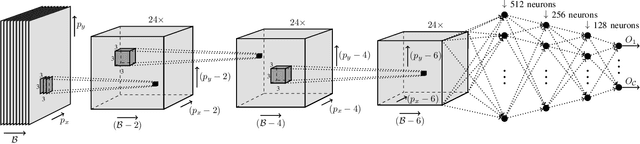

Abstract:Hyperspectral imaging provides detailed information about the scanned objects, as it captures their spectral characteristics within a large number of wavelength bands. Classification of such data has become an active research topic due to its wide applicability in a variety of fields. Deep learning has established the state of the art in the area, and it constitutes the current research mainstream. In this letter, we introduce a new spectral-spatial convolutional neural network, benefitting from a battery of data augmentation techniques which help deal with a real-life problem of lacking ground-truth training data. Our rigorous experiments showed that the proposed method outperforms other spectral-spatial techniques from the literature, and delivers precise hyperspectral classification in real time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge