Michal Myller

Segmenting Hyperspectral Images Using Spectral-Spatial Convolutional Neural Networks With Training-Time Data Augmentation

Jul 27, 2019

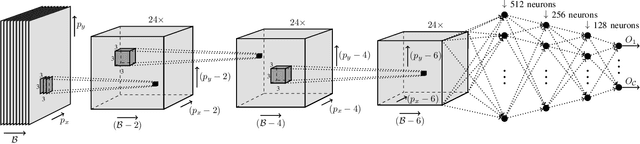

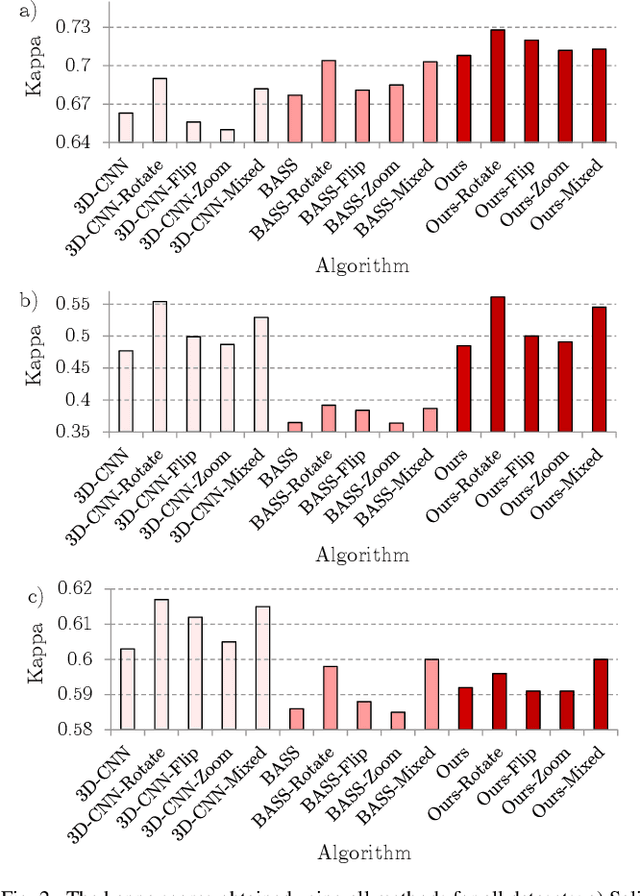

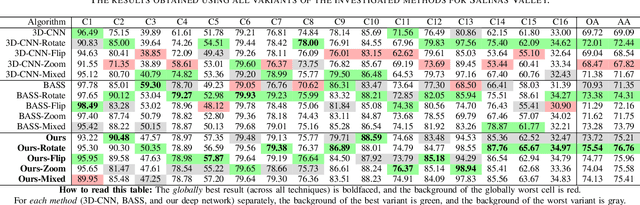

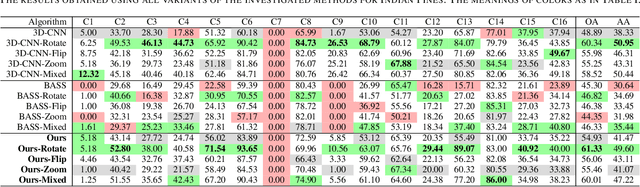

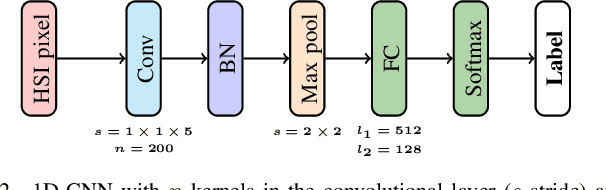

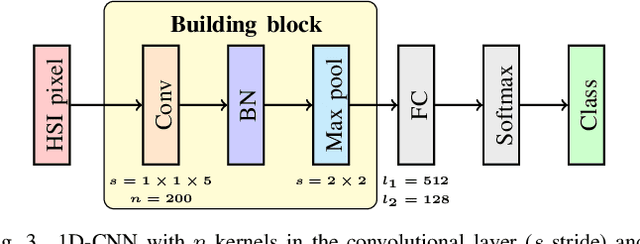

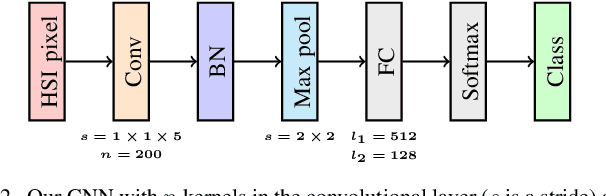

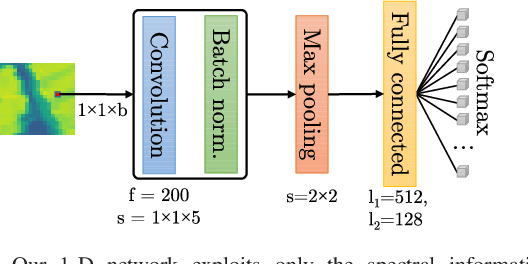

Abstract:Hyperspectral imaging provides detailed information about the scanned objects, as it captures their spectral characteristics within a large number of wavelength bands. Classification of such data has become an active research topic due to its wide applicability in a variety of fields. Deep learning has established the state of the art in the area, and it constitutes the current research mainstream. In this letter, we introduce a new spectral-spatial convolutional neural network, benefitting from a battery of data augmentation techniques which help deal with a real-life problem of lacking ground-truth training data. Our rigorous experiments showed that the proposed method outperforms other spectral-spatial techniques from the literature, and delivers precise hyperspectral classification in real time.

Unsupervised Segmentation of Hyperspectral Images Using 3D Convolutional Autoencoders

Jul 20, 2019

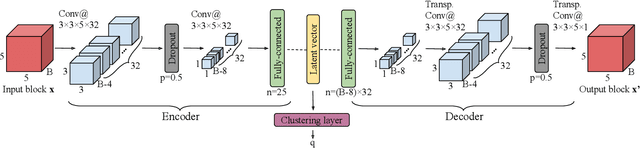

Abstract:Hyperspectral image analysis has become an important topic widely researched by the remote sensing community. Classification and segmentation of such imagery help understand the underlying materials within a scanned scene, since hyperspectral images convey a detailed information captured in a number of spectral bands. Although deep learning has established the state of the art in the field, it still remains challenging to train well-generalizing models due to the lack of ground-truth data. In this letter, we tackle this problem and propose an end-to-end approach to segment hyperspectral images in a fully unsupervised way. We introduce a new deep architecture which couples 3D convolutional autoencoders with clustering. Our multi-faceted experimental study---performed over benchmark and real-life data---revealed that our approach delivers high-quality segmentation without any prior class labels.

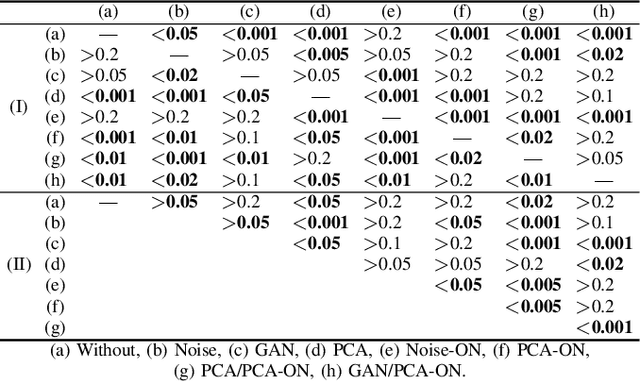

Transfer Learning for Segmenting Dimensionally-Reduced Hyperspectral Images

Jun 23, 2019

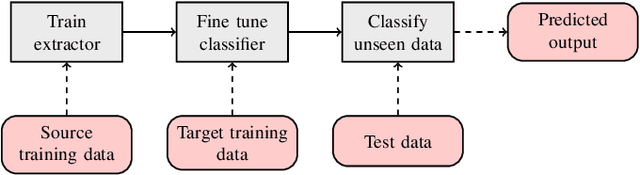

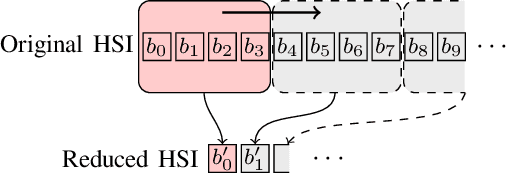

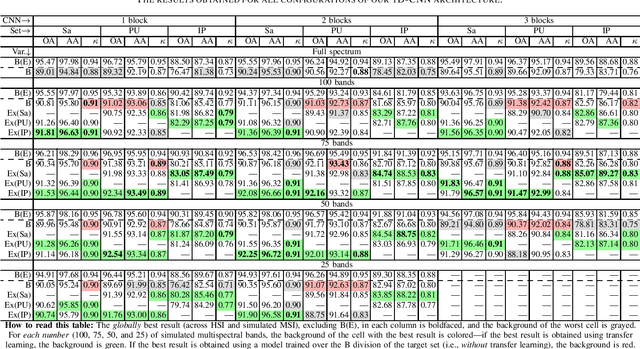

Abstract:Deep learning has established the state of the art in multiple fields, including hyperspectral image analysis. However, training large-capacity learners to segment such imagery requires representative training sets. Acquiring such data is human-dependent and time-consuming, especially in Earth observation scenarios, where the hyperspectral data transfer is very costly and time-constrained. In this letter, we show how to effectively deal with a limited number and size of available hyperspectral ground-truth sets, and apply transfer learning for building deep feature extractors. Also, we exploit spectral dimensionality reduction to make our technique applicable over hyperspectral data acquired using different sensors, which may capture different numbers of hyperspectral bands. The experiments, performed over several benchmarks and backed up with statistical tests, indicated that our approach allows us to effectively train well-generalizing deep convolutional neural nets even using significantly reduced data.

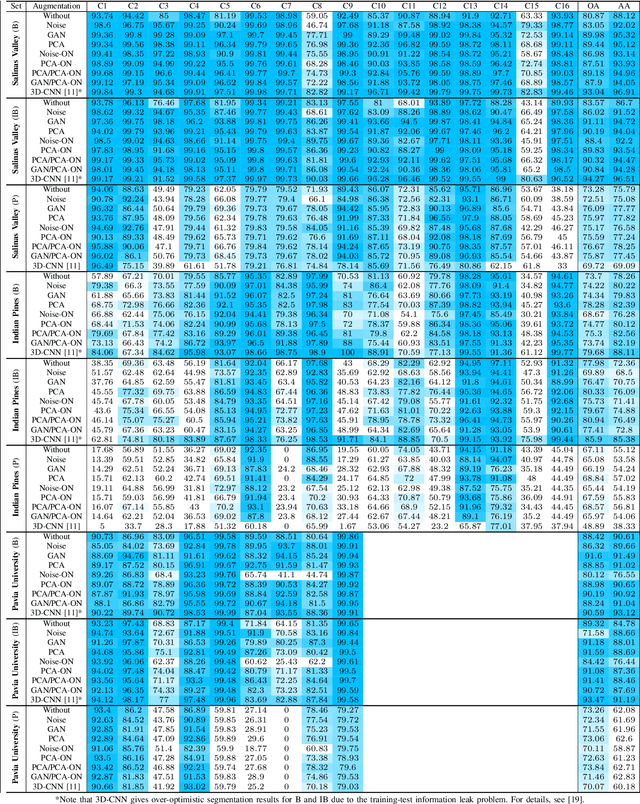

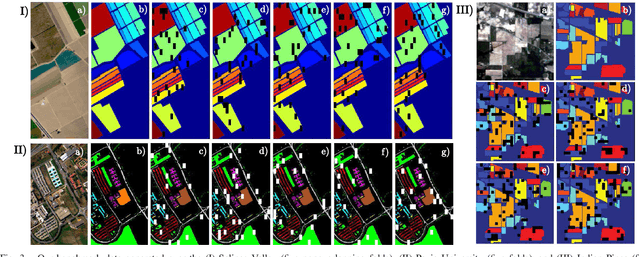

Hyperspectral Data Augmentation

Mar 13, 2019

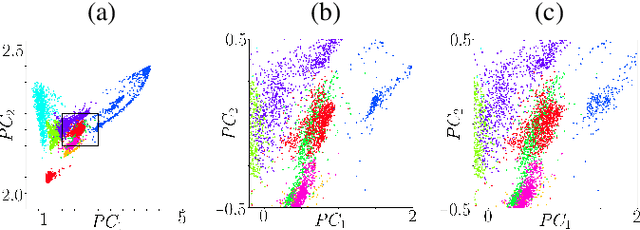

Abstract:Data augmentation is a popular technique which helps improve generalization capabilities of deep neural networks. It plays a pivotal role in remote-sensing scenarios in which the amount of high-quality ground truth data is limited, and acquiring new examples is costly or impossible. This is a common problem in hyperspectral imaging, where manual annotation of image data is difficult, expensive, and prone to human bias. In this letter, we propose online data augmentation of hyperspectral data which is executed during the inference rather than before the training of deep networks. This is in contrast to all other state-of-the-art hyperspectral augmentation algorithms which increase the size (and representativeness) of training sets. Additionally, we introduce a new principal component analysis based augmentation. The experiments revealed that our data augmentation algorithms improve generalization of deep networks, work in real-time, and the online approach can be effectively combined with offline techniques to enhance the classification accuracy.

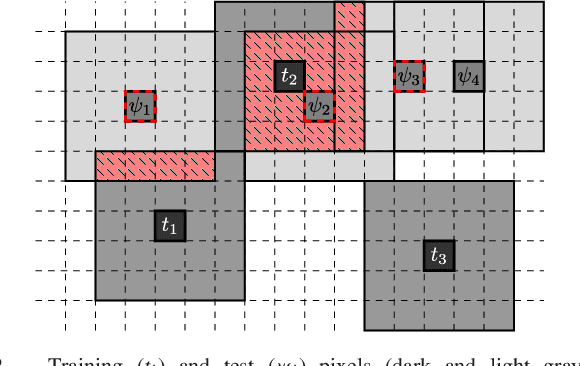

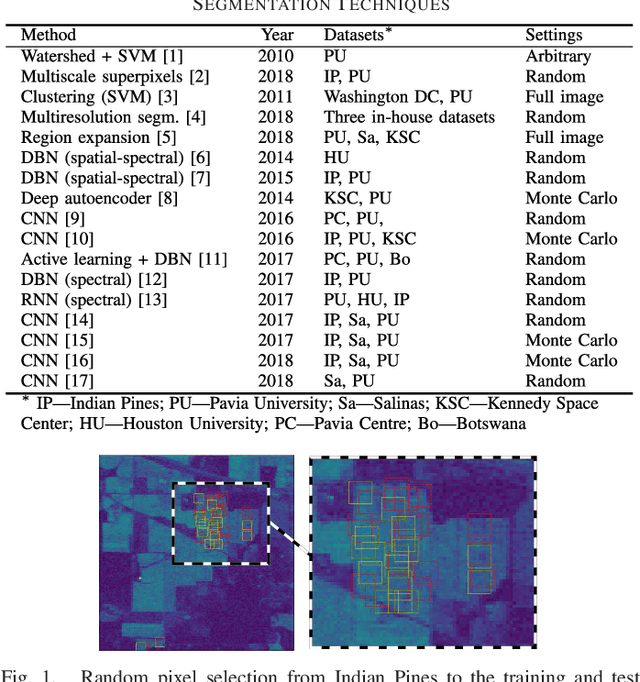

Validating Hyperspectral Image Segmentation

Nov 08, 2018

Abstract:Hyperspectral satellite imaging attracts enormous research attention in the remote sensing community, hence automated approaches for precise segmentation of such imagery are being rapidly developed. In this letter, we share our observations on the strategy for validating hyperspectral image segmentation algorithms currently followed in the literature, and show that it can lead to over-optimistic experimental insights. We introduce a new routine for generating segmentation benchmarks, and use it to elaborate ready-to-use hyperspectral training-test data partitions. They can be utilized for fair validation of new and existing algorithms without any training-test data leakage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge