Luis A. Pineda

Entropic Hetero-Associative Memory

Nov 02, 2024

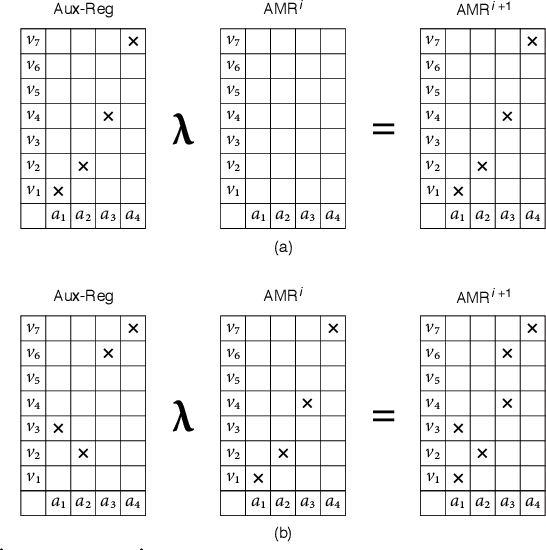

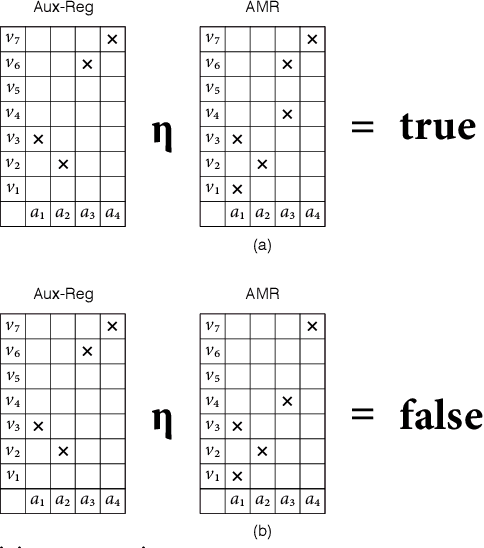

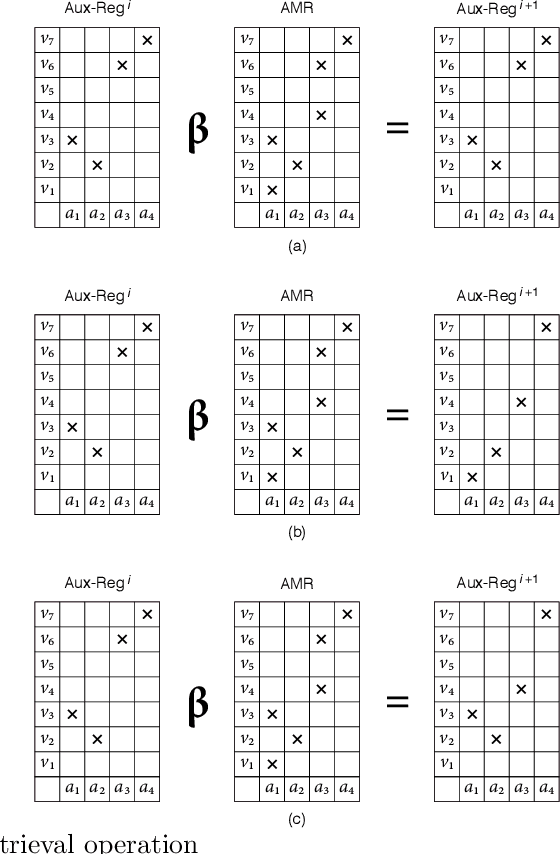

Abstract:The Entropic Associative Memory holds objects in a 2D relation or ``memory plane'' using a finite table as the medium. Memory objects are stored by reinforcing simultaneously the cells used by the cue, implementing a form of Hebb's learning rule. Stored objects are ``overlapped'' on the medium, hence the memory is indeterminate and has an entropy value at each state. The retrieval operation constructs an object from the cue and such indeterminate content. In this paper we present the extension to the hetero-associative case in which these properties are preserved. Pairs of hetero-associated objects, possibly of different domain and/or modalities, are held in a 4D relation. The memory retrieval operation selects a largely indeterminate 2D memory plane that is specific to the input cue; however, there is no cue left to retrieve an object from such latter plane. We propose three incremental methods to address such missing cue problem, which we call random, sample and test, and search and test. The model is assessed with composite recollections consisting of manuscripts digits and letters selected from the MNIST and the EMNIST corpora, respectively, such that cue digits retrieve their associated letters and vice versa. We show the memory performance and illustrate the memory retrieval operation using all three methods. The system shows promise for storing, recognizing and retrieving very large sets of object with very limited computing resources.

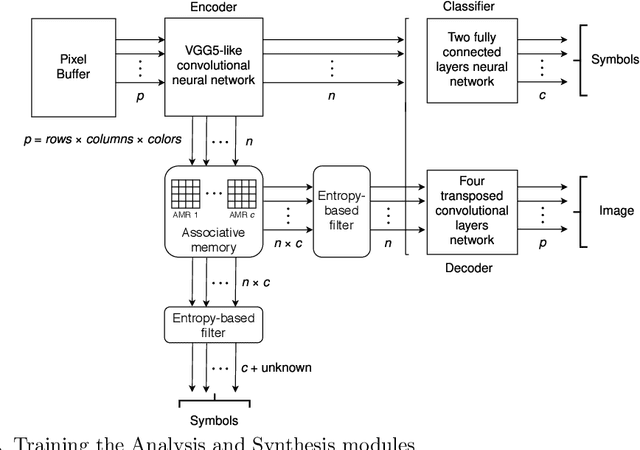

Entropic associative memory for real world images

May 21, 2024Abstract:The entropic associative memory (EAM) is a computational model of natural memory incorporating some of its putative properties of being associative, distributed, declarative, abstractive and constructive. Previous experiments satisfactorily tested the model on structured, homogeneous and conventional data: images of manuscripts digits and letters, images of clothing, and phone representations. In this work we show that EAM appropriately stores, recognizes and retrieves complex and unconventional images of animals and vehicles. Additionally, the memory system generates meaningful retrieval association chains for such complex images. The retrieved objects can be seen as proper memories, associated recollections or products of imagination.

Entropic Associative Memory for Manuscript Symbols

Feb 17, 2022

Abstract:Manuscript symbols can be stored, recognized and retrieved from an entropic digital memory that is associative and distributed but yet declarative; memory retrieval is a constructive operation, memory cues to objects not contained in the memory are rejected directly without search, and memory operations can be performed through parallel computations. Manuscript symbols, both letters and numerals, are represented in Associative Memory Registers that have an associated entropy. The memory recognition operation obeys an entropy trade-off between precision and recall, and the entropy level impacts on the quality of the objects recovered through the memory retrieval operation. The present proposal is contrasted in several dimensions with neural networks models of associative memory. We discuss the operational characteristics of the entropic associative memory for retrieving objects with both complete and incomplete information, such as severe occlusions. The experiments reported in this paper add evidence on the potential of this framework for developing practical applications and computational models of natural memory.

Deliberative and Conceptual Inference in Service Robots

Dec 13, 2020

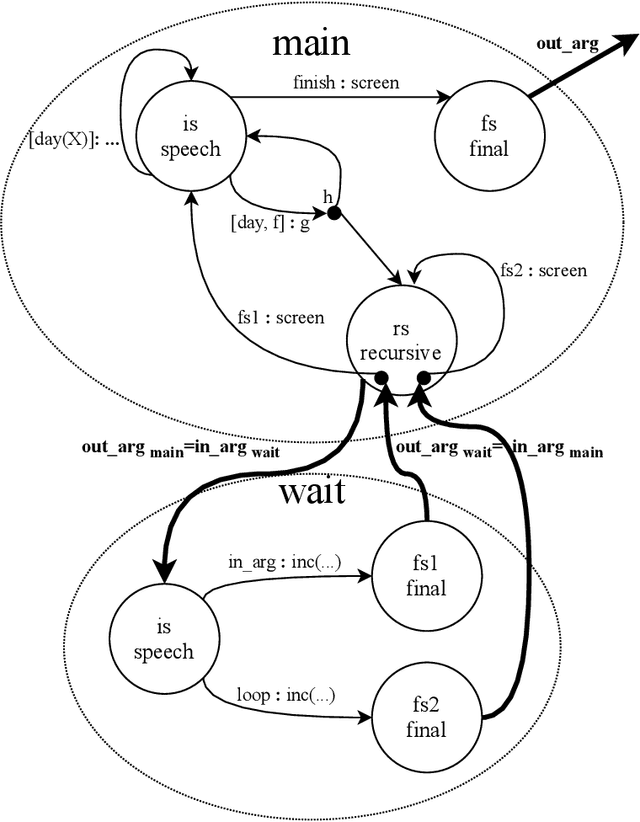

Abstract:Service robots need to reason to support people in daily life situations. Reasoning is an expensive resource that should be used on demand whenever the expectations of the robot do not match the situation of the world and the execution of the task is broken down; in such scenarios the robot must perform the common sense daily life inference cycle consisting on diagnosing what happened, deciding what to do about it, and inducing and executing a plan, recurring in such behavior until the service task can be resumed. Here we examine two strategies to implement this cycle: (1) a pipe-line strategy involving abduction, decision-making and planning, which we call deliberative inference and (2) the use of the knowledge and preferences stored in the robot's knowledge-base, which we call conceptual inference. The former involves an explicit definition of a problem space that is explored through heuristic search, and the latter is based on conceptual knowledge including the human user preferences, and its representation requires a non-monotonic knowledge-based system. We compare the strengths and limitations of both approaches. We also describe a service robot conceptual model and architecture capable of supporting the daily life inference cycle during the execution of a robotics service task. The model is centered in the declarative specification and interpretation of robot's communication and task structure. We also show the implementation of this framework in the fully autonomous robot Golem-III. The framework is illustrated with two demonstration scenarios.

An Entropic Associative Memory

Sep 28, 2020

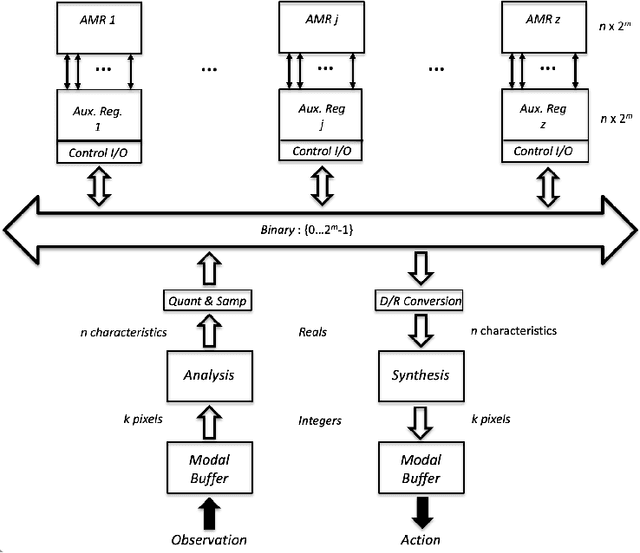

Abstract:Natural memories are associative, declarative and distributed. Symbolic computing memories resemble natural memories in their declarative character, and information can be stored and recovered explicitly; however, they lack the associative and distributed properties of natural memories. Sub-symbolic memories developed within the connectionist or artificial neural networks paradigm are associative and distributed, but are unable to express symbolic structure and information cannot be stored and retrieved explicitly; hence, they lack the declarative property. To address this dilemma, we use Relational-Indeterminate Computing to model associative memory registers that hold distributed representations of individual objects. This mode of computing has an intrinsic computing entropy which measures the indeterminacy of representations. This parameter determines the operational characteristics of the memory. Associative registers are embedded in an architecture that maps concrete images expressed in modality-specific buffers into abstract representations, and vice versa, and the memory system as a whole fulfills the three properties of natural memories. The system has been used to model a visual memory holding the representations of hand-written digits, and recognition and recall experiments show that there is a range of entropy values, not too low and not too high, in which associative memory registers have a satisfactory performance. The similarity between the cue and the object recovered in memory retrieve operations depends on the entropy of the memory register holding the representation of the corresponding object. The experiments were implemented in a simulation using a standard computer, but a parallel architecture may be built where the memory operations would take a very reduced number of computing steps.

Entropy, Computing and Rationality

Sep 21, 2020

Abstract:Making decisions freely presupposes that there is some indeterminacy in the environment and in the decision making engine. The former is reflected on the behavioral changes due to communicating: few changes indicate rigid environments; productive changes manifest a moderate indeterminacy, but a large communicating effort with few productive changes characterize a chaotic environment. Hence, communicating, effective decision making and productive behavioral changes are related. The entropy measures the indeterminacy of the environment, and there is an entropy range in which communicating supports effective decision making. This conjecture is referred to here as the The Potential Productivity of Decisions. The computing engine that is causal to decision making should also have some indeterminacy. However, computations performed by standard Turing Machines are predetermined. To overcome this limitation an entropic mode of computing that is called here Relational-Indeterminate is presented. Its implementation in a table format has been used to model an associative memory. The present theory and experiment suggest the Entropy Trade-off: There is an entropy range in which computing is effective but if the entropy is too low computations are too rigid and if it is too high computations are unfeasible. The entropy trade-off of computing engines corresponds to the potential productivity of decisions of the environment. The theory is referred to an Interaction-Oriented Cognitive Architecture. Memory, perception, action and thought involve a level of indeterminacy and decision making may be free in such degree. The overall theory supports an ecological view of rationality. The entropy of the brain has been measured in neuroscience studies and the present theory supports that the brain is an entropic machine. The paper is concluded with a number of predictions that may be tested empirically.

The Mode of Computing

Mar 25, 2019

Abstract:The Turing Machine is the paradigmatic case of computing machines, but there are others, such as Artificial Neural Networks, Table Computing, Relational-Indeterminate computing and diverse forms of analogical computing, each of which based on a particular underlying intuition of the phenomenon of computing. This variety can be captured in terms of system levels, re-interpreting and generalizing Newell's hierarchy, which includes the knowledge level at the top and the symbol level immediately below it. In this re-interpretation the knowledge level consists of human knowledge and the symbol level is generalized into a new level that here is called The Mode of Computing. Each computing paradigm uses a particular mode, and a central question for Cognition is what is the mode of natural computing. The mode of computing provides a novel perspective on the phenomena of computing, the representational and non-representational views of cognition, and consciousness.

A Distributed Extension of the Turing Machine

Mar 28, 2018

Abstract:The Turing Machine has two implicit properties that depend on its underlying notion of computing: the format is fully determinate and computations are information preserving. Distributed representations lack these properties and cannot be fully captured by Turing's standard model. To address this limitation a distributed extension of the Turing Machine is introduced in this paper. In the extended machine, functions and abstractions are expressed extensionally and computations are entropic. The machine is applied to the definition of an associative memory, with its corresponding memory register, recognition and retrieval operations. The memory is tested with an experiment for storing and recognizing hand written digits with satisfactory results. The experiment can be seen as a proof of concept that information can be stored and processed effectively in a highly distributed fashion using a symbolic but not fully determinate format. The new machine augments the symbolic mode of computing with consequences on the way Church Thesis is understood. The paper is concluded with a discussion of some implications of the extended machine for Artificial Intelligence and Cognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge