Arturo Rodríguez

Deliberative and Conceptual Inference in Service Robots

Dec 13, 2020

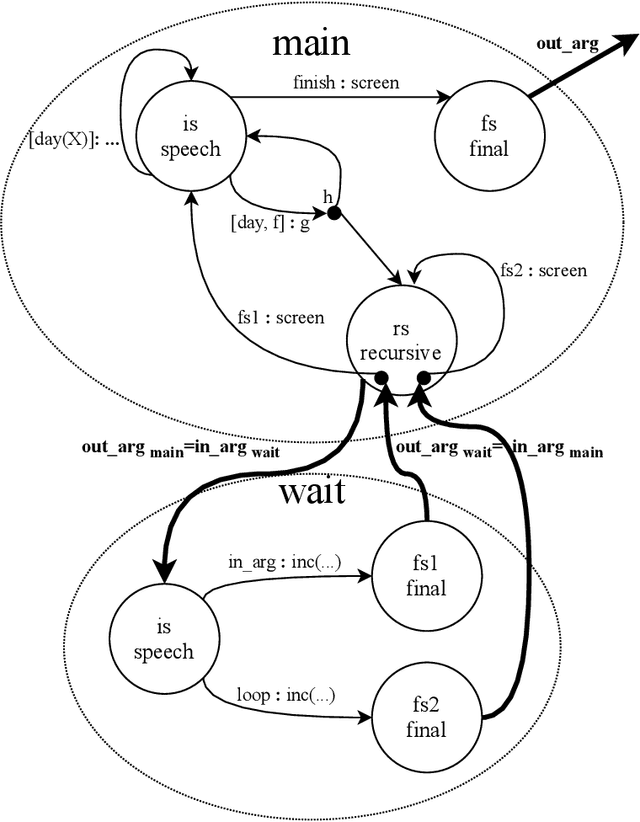

Abstract:Service robots need to reason to support people in daily life situations. Reasoning is an expensive resource that should be used on demand whenever the expectations of the robot do not match the situation of the world and the execution of the task is broken down; in such scenarios the robot must perform the common sense daily life inference cycle consisting on diagnosing what happened, deciding what to do about it, and inducing and executing a plan, recurring in such behavior until the service task can be resumed. Here we examine two strategies to implement this cycle: (1) a pipe-line strategy involving abduction, decision-making and planning, which we call deliberative inference and (2) the use of the knowledge and preferences stored in the robot's knowledge-base, which we call conceptual inference. The former involves an explicit definition of a problem space that is explored through heuristic search, and the latter is based on conceptual knowledge including the human user preferences, and its representation requires a non-monotonic knowledge-based system. We compare the strengths and limitations of both approaches. We also describe a service robot conceptual model and architecture capable of supporting the daily life inference cycle during the execution of a robotics service task. The model is centered in the declarative specification and interpretation of robot's communication and task structure. We also show the implementation of this framework in the fully autonomous robot Golem-III. The framework is illustrated with two demonstration scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge