Ludek Zalud

Localizing Multiple Radiation Sources Actively with a Particle Filter

May 24, 2023Abstract:The article discusses the localization of radiation sources whose number and other relevant parameters are not known in advance. The data collection is ensured by an autonomous mobile robot that performs a survey in a defined region of interest populated with static obstacles. The measurement trajectory is information-driven rather than pre-planned. The localization exploits a regularized particle filter estimating the sources' parameters continuously. The dynamic robot control switches between two modes, one attempting to minimize the Shannon entropy and the other aiming to reduce the variance of expected measurements in unexplored parts of the target area; both of the modes maintain safe clearance from the obstacles. The performance of the algorithms was tested in a simulation study based on real-world data acquired previously from three radiation sources exhibiting various activities. Our approach reduces the time necessary to explore the region and to find the sources by approximately 40 %; at present, however, the method is unable to reliably localize sources that have a relatively low intensity. In this context, additional research has been planned to increase the credibility and robustness of the procedure and to improve the robotic platform autonomy.

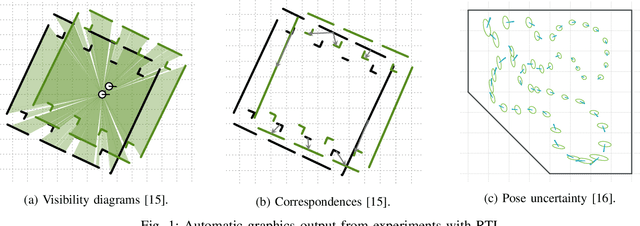

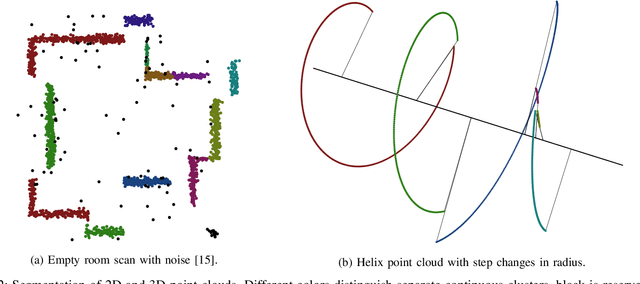

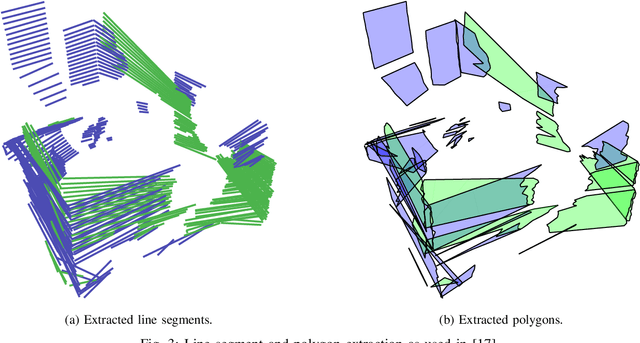

Robotic Template Library

Jul 01, 2021

Abstract:Robotic Template Library (RTL) is a set of tools for dealing with geometry and point cloud processing, especially in robotic applications. The software package covers basic objects such as vectors, line segments, quaternions, rigid transformations, etc., however, its main contribution lies in the more advanced modules: The segmentation module for batch or stream clustering of point clouds, the fast vectorization module for approximation of continuous point clouds by geometric objects of higher grade and the LaTeX export module enabling automated generation of high-quality visual outputs. It is a header-only library written in C++17, uses the Eigen library as a linear algebra back-end, and is designed with high computational performance in mind. RTL can be used in all robotic tasks such as motion planning, map building, object recognition and many others, but the point cloud processing utilities are general enough to be employed in any field touching object reconstruction and computer vision applications as well.

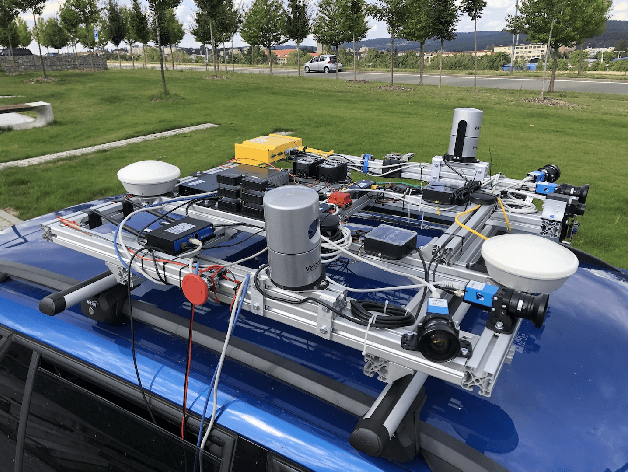

Brno Urban Dataset: Winter Extention

Jun 05, 2021

Abstract:Research on autonomous driving is advancing dramatically and requires new data and techniques to progress even further. To reflect this pressure, we present an extension of our recent work - the Brno Urban Dataset (BUD). The new data focus on winter conditions in various snow-covered environments and feature additional LiDAR and radar sensors for object detection in front of the vehicle. The improvement affects the old data as well. We provide YOLO detection annotations for all old RGB images in the dataset. The detections are further also transferred by our original algorithm into the infra-red (IR) images, captured by the thermal camera. To our best knowledge, it makes this dataset the largest source of machine-annotated thermal images currently available. The dataset is published under MIT license on https://github.com/Robotics-BUT/Brno-Urban-Dataset.

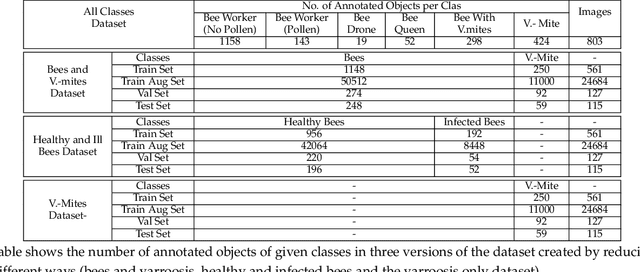

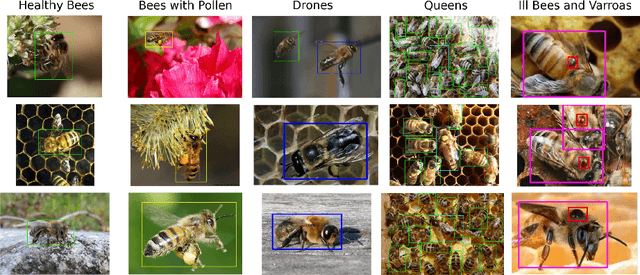

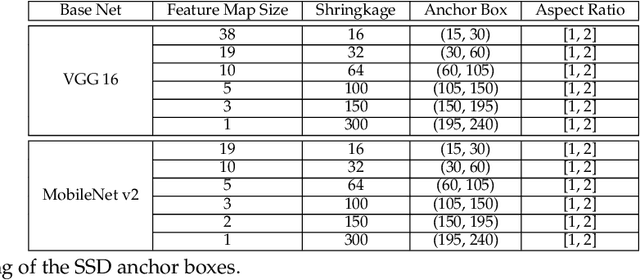

Visual diagnosis of the Varroa destructor parasitic mite in honeybees using object detector techniques

Feb 26, 2021

Abstract:The Varroa destructor mite is one of the most dangerous Honey Bee (Apis mellifera) parasites worldwide and the bee colonies have to be regularly monitored in order to control its spread. Here we present an object detector based method for health state monitoring of bee colonies. This method has the potential for online measurement and processing. In our experiment, we compare the YOLO and SSD object detectors along with the Deep SVDD anomaly detector. Based on the custom dataset with 600 ground-truth images of healthy and infected bees in various scenes, the detectors reached a high F1 score up to 0.874 in the infected bee detection and up to 0.727 in the detection of the Varroa Destructor mite itself. The results demonstrate the potential of this approach, which will be later used in the real-time computer vision based honey bee inspection system. To the best of our knowledge, this study is the first one using object detectors for this purpose. We expect that performance of those object detectors will enable us to inspect the health status of the honey bee colonies.

Atlas Fusion -- Modern Framework for Autonomous Agent Sensor Data Fusion

Oct 22, 2020

Abstract:In this paper, we present our new sensor fusion framework for self-driving cars and other autonomous robots. We have designed our framework as a universal and scalable platform for building up a robust 3D model of the agent's surrounding environment by fusing a wide range of various sensors into the data model that we can use as a basement for the decision making and planning algorithms. Our software currently covers the data fusion of the RGB and thermal cameras, 3D LiDARs, 3D IMU, and a GNSS positioning. The framework covers a complete pipeline from data loading, filtering, preprocessing, environment model construction, visualization, and data storage. The architecture allows the community to modify the existing setup or to extend our solution with new ideas. The entire software is fully compatible with ROS (Robotic Operation System), which allows the framework to cooperate with other ROS-based software. The source codes are fully available as an open-source under the MIT license. See https://github.com/Robotics-BUT/Atlas-Fusion.

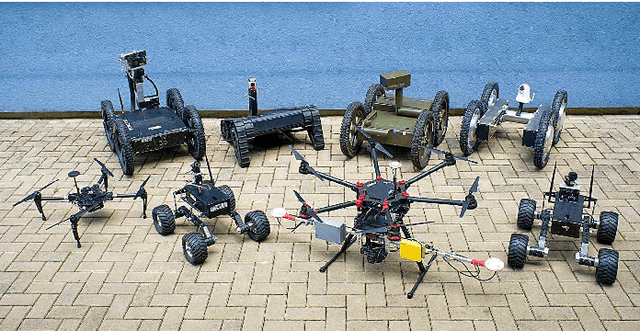

Using an Automated Heterogeneous Robotic System for Radiation Surveys

Jun 29, 2020

Abstract:During missions involving radiation exposure, unmanned robotic platforms may embody a valuable tool, especially thanks to their capability of replacing human operators in certain tasks to eliminate the health risks associated with such an environment. Moreover, rapid development of the technology allows us to increase the automation rate, making the human operator generally less important within the entire process. This article presents a multi-robotic system designed for highly automated radiation mapping and source localization. Our approach includes a three-phase procedure comprising sequential deployment of two diverse platforms, namely, an unmanned aircraft system (UAS) and an unmanned ground vehicle (UGV), to perform aerial photogrammetry, aerial radiation mapping, and terrestrial radiation mapping. The central idea is to produce a sparse dose rate map of the entire study site via the UAS and, subsequently, to perform detailed UGV-based mapping in limited radiation-contaminated regions. To accomplish these tasks, we designed numerous methods and data processing algorithms to facilitate, for example, digital elevation model (DEM)-based terrain following for the UAS, automatic selection of the regions of interest, obstacle map-based UGV trajectory planning, and source localization. The overall usability of the multi-robotic system was demonstrated by means of a one-day, authentic experiment, namely, a fictitious car accident including the loss of several radiation sources. The ability of the system to localize radiation hotspots and individual sources has been verified.

Brno Urban Dataset -- The New Data for Self-Driving Agents and Mapping Tasks

Sep 15, 2019

Abstract:Autonomous driving is a dynamically growing field of research, where quality and amount of experimental data is critical. Although several rich datasets are available these days, the demands of researchers and technical possibilities are evolving. Through this paper, we bring a new dataset recorded in Brno, Czech Republic. It offers data from four WUXGA cameras, two 3D LiDARs, inertial measurement unit, infrared camera and especially differential RTK GNSS receiver with centimetre accuracy which, to the best knowledge of the authors, is not available from any other public dataset so far. In addition, all the data are precisely timestamped with sub-millisecond precision to allow wider range of applications. At the time of publishing of this paper, recordings of more than 350 km of rides in varying environment are shared at: https: //github.com/RoboticsBUT/Brno-Urban-Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge