Lucas Weitzendorf

Multi-Head RAG: Solving Multi-Aspect Problems with LLMs

Jun 07, 2024

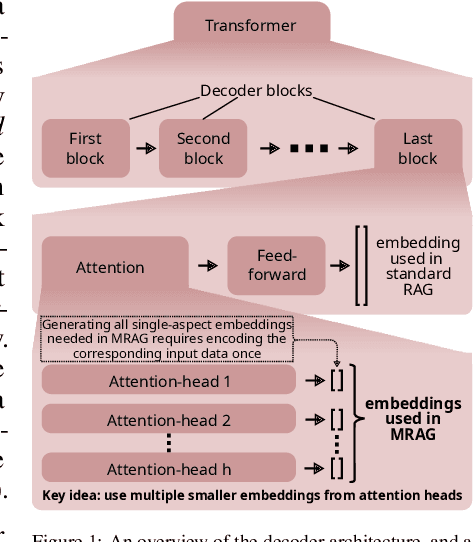

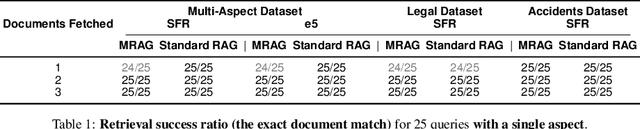

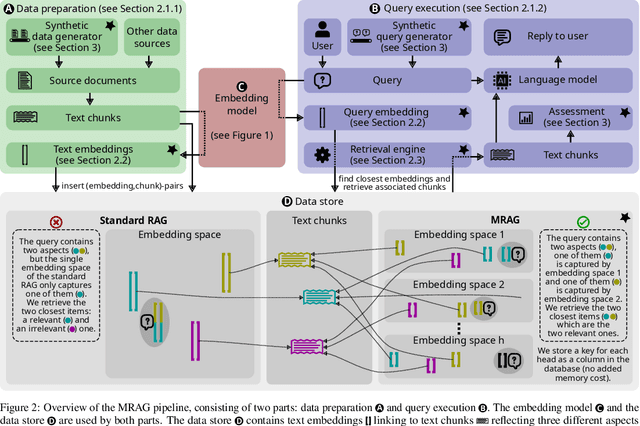

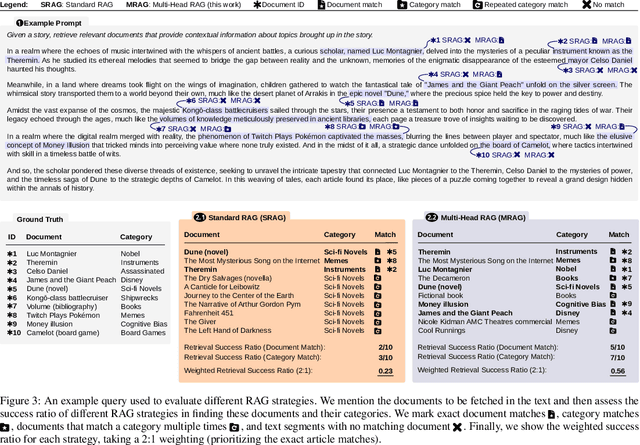

Abstract:Retrieval Augmented Generation (RAG) enhances the abilities of Large Language Models (LLMs) by enabling the retrieval of documents into the LLM context to provide more accurate and relevant responses. Existing RAG solutions do not focus on queries that may require fetching multiple documents with substantially different contents. Such queries occur frequently, but are challenging because the embeddings of these documents may be distant in the embedding space, making it hard to retrieve them all. This paper introduces Multi-Head RAG (MRAG), a novel scheme designed to address this gap with a simple yet powerful idea: leveraging activations of Transformer's multi-head attention layer, instead of the decoder layer, as keys for fetching multi-aspect documents. The driving motivation is that different attention heads can learn to capture different data aspects. Harnessing the corresponding activations results in embeddings that represent various facets of data items and queries, improving the retrieval accuracy for complex queries. We provide an evaluation methodology and metrics, synthetic datasets, and real-world use cases to demonstrate MRAG's effectiveness, showing improvements of up to 20% in relevance over standard RAG baselines. MRAG can be seamlessly integrated with existing RAG frameworks and benchmarking tools like RAGAS as well as different classes of data stores.

From a Point Cloud to a Simulation Model: Bayesian Segmentation and Entropy based Uncertainty Estimation for 3D Modelling

Feb 04, 2021

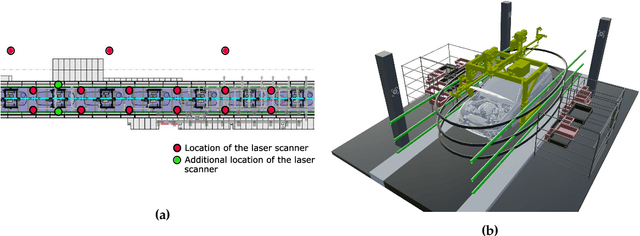

Abstract:The 3D modelling of indoor environments and the generation of process simulations play an important role in factory and assembly planning. In brownfield planning cases existing data are often outdated and incomplete especially for older plants, which were mostly planned in 2D. Thus, current environment models cannot be generated directly on the basis of existing data and a holistic approach on how to build such a factory model in a highly automated fashion is mostly non-existent. Major steps in generating an environment model in a production plant include data collection and pre-processing, object identification as well as pose estimation. In this work, we elaborate a methodical workflow, which starts with the digitalization of large-scale indoor environments and ends with the generation of a static environment or simulation model. The object identification step is realized using a Bayesian neural network capable of point cloud segmentation. We elaborate how the information on network uncertainty generated by a Bayesian segmentation framework can be used in order to build up a more accurate environment model. The steps of data collection and point cloud segmentation as well as the resulting model accuracy are evaluated on a real-world data set collected at the assembly line of a large-scale automotive production plant. The segmentation network is further evaluated on the publicly available Stanford Large-Scale 3D Indoor Spaces data set. The Bayesian segmentation network clearly surpasses the performance of the frequentist baseline and allows us to increase the accuracy of the model placement in a simulation scene considerably.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge