Christina Petschnigg

From a Point Cloud to a Simulation Model: Bayesian Segmentation and Entropy based Uncertainty Estimation for 3D Modelling

Feb 04, 2021

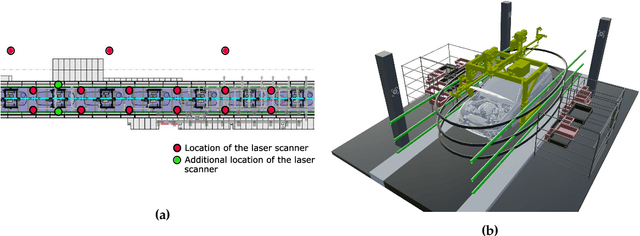

Abstract:The 3D modelling of indoor environments and the generation of process simulations play an important role in factory and assembly planning. In brownfield planning cases existing data are often outdated and incomplete especially for older plants, which were mostly planned in 2D. Thus, current environment models cannot be generated directly on the basis of existing data and a holistic approach on how to build such a factory model in a highly automated fashion is mostly non-existent. Major steps in generating an environment model in a production plant include data collection and pre-processing, object identification as well as pose estimation. In this work, we elaborate a methodical workflow, which starts with the digitalization of large-scale indoor environments and ends with the generation of a static environment or simulation model. The object identification step is realized using a Bayesian neural network capable of point cloud segmentation. We elaborate how the information on network uncertainty generated by a Bayesian segmentation framework can be used in order to build up a more accurate environment model. The steps of data collection and point cloud segmentation as well as the resulting model accuracy are evaluated on a real-world data set collected at the assembly line of a large-scale automotive production plant. The segmentation network is further evaluated on the publicly available Stanford Large-Scale 3D Indoor Spaces data set. The Bayesian segmentation network clearly surpasses the performance of the frequentist baseline and allows us to increase the accuracy of the model placement in a simulation scene considerably.

Uncertainty Estimation in Deep Neural Networks for Point Cloud Segmentation in Factory Planning

Dec 13, 2020

Abstract:The digital factory provides undoubtedly a great potential for future production systems in terms of efficiency and effectivity. A key aspect on the way to realize the digital copy of a real factory is the understanding of complex indoor environments on the basis of 3D data. In order to generate an accurate factory model including the major components, i.e. building parts, product assets and process details, the 3D data collected during digitalization can be processed with advanced methods of deep learning. In this work, we propose a fully Bayesian and an approximate Bayesian neural network for point cloud segmentation. This allows us to analyze how different ways of estimating uncertainty in these networks improve segmentation results on raw 3D point clouds. We achieve superior model performance for both, the Bayesian and the approximate Bayesian model compared to the frequentist one. This performance difference becomes even more striking when incorporating the networks' uncertainty in their predictions. For evaluation we use the scientific data set S3DIS as well as a data set, which was collected by the authors at a German automotive production plant. The methods proposed in this work lead to more accurate segmentation results and the incorporation of uncertainty information makes this approach especially applicable to safety critical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge