Luca Pasquini

Analysis of the MICCAI Brain Tumor Segmentation -- Metastases (BraTS-METS) 2025 Lighthouse Challenge: Brain Metastasis Segmentation on Pre- and Post-treatment MRI

Apr 16, 2025Abstract:Despite continuous advancements in cancer treatment, brain metastatic disease remains a significant complication of primary cancer and is associated with an unfavorable prognosis. One approach for improving diagnosis, management, and outcomes is to implement algorithms based on artificial intelligence for the automated segmentation of both pre- and post-treatment MRI brain images. Such algorithms rely on volumetric criteria for lesion identification and treatment response assessment, which are still not available in clinical practice. Therefore, it is critical to establish tools for rapid volumetric segmentations methods that can be translated to clinical practice and that are trained on high quality annotated data. The BraTS-METS 2025 Lighthouse Challenge aims to address this critical need by establishing inter-rater and intra-rater variability in dataset annotation by generating high quality annotated datasets from four individual instances of segmentation by neuroradiologists while being recorded on video (two instances doing "from scratch" and two instances after AI pre-segmentation). This high-quality annotated dataset will be used for testing phase in 2025 Lighthouse challenge and will be publicly released at the completion of the challenge. The 2025 Lighthouse challenge will also release the 2023 and 2024 segmented datasets that were annotated using an established pipeline of pre-segmentation, student annotation, two neuroradiologists checking, and one neuroradiologist finalizing the process. It builds upon its previous edition by including post-treatment cases in the dataset. Using these high-quality annotated datasets, the 2025 Lighthouse challenge plans to test benchmark algorithms for automated segmentation of pre-and post-treatment brain metastases (BM), trained on diverse and multi-institutional datasets of MRI images obtained from patients with brain metastases.

Deep neuroevolution to predict primary brain tumor grade from functional MRI adjacency matrices

Nov 26, 2022

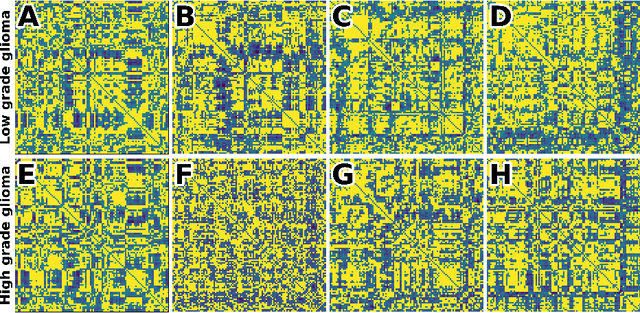

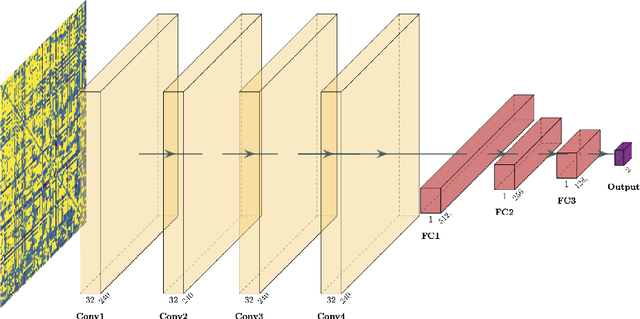

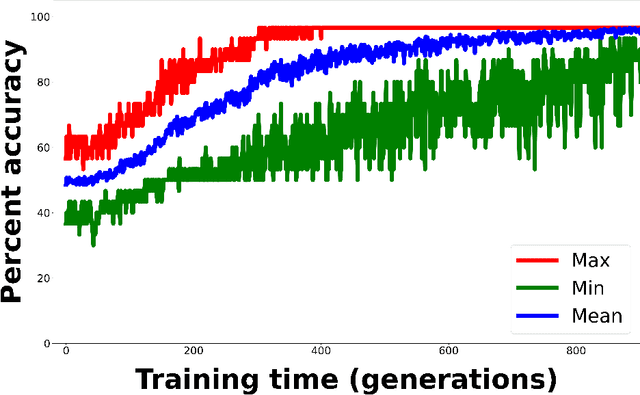

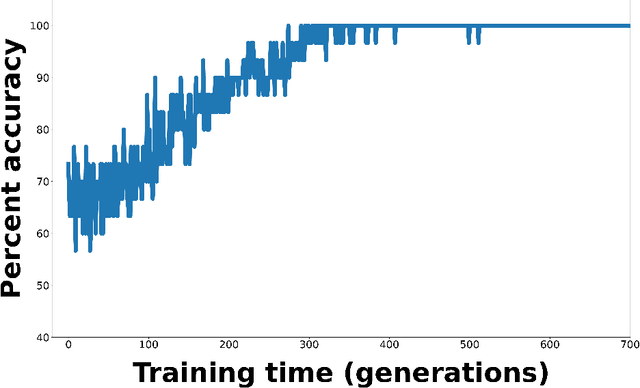

Abstract:Whereas MRI produces anatomic information about the brain, functional MRI (fMRI) tells us about neural activity within the brain, including how various regions communicate with each other. The full chorus of conversations within the brain is summarized elegantly in the adjacency matrix. Although information-rich, adjacency matrices typically provide little in the way of intuition. Whereas trained radiologists viewing anatomic MRI can readily distinguish between different kinds of brain cancer, a similar determination using adjacency matrices would exceed any expert's grasp. Artificial intelligence (AI) in radiology usually analyzes anatomic imaging, providing assistance to radiologists. For non-intuitive data types such as adjacency matrices, AI moves beyond the role of helpful assistant, emerging as indispensible. We sought here to show that AI can learn to discern between two important brain tumor types, high-grade glioma (HGG) and low-grade glioma (LGG), based on adjacency matrices. We trained a convolutional neural networks (CNN) with the method of deep neuroevolution (DNE), because of the latter's recent promising results; DNE has produced remarkably accurate CNNs even when relying on small and noisy training sets, or performing nuanced tasks. After training on just 30 adjacency matrices, our CNN could tell HGG apart from LGG with perfect testing set accuracy. Saliency maps revealed that the network learned highly sophisticated and complex features to achieve its success. Hence, we have shown that it is possible for AI to recognize brain tumor type from functional connectivity. In future work, we will apply DNE to other noisy and somewhat cryptic forms of medical data, including further explorations with fMRI.

Deep reinforcement learning for fMRI prediction of Autism Spectrum Disorder

Jun 17, 2022

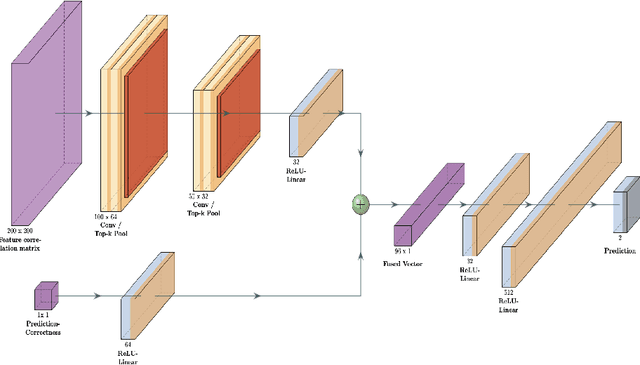

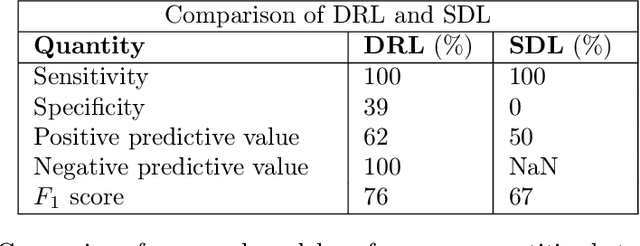

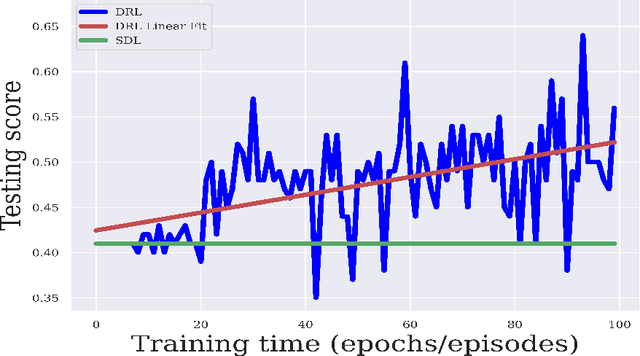

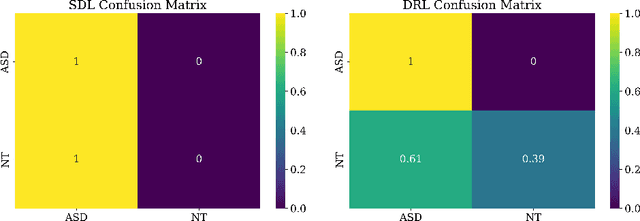

Abstract:Purpose : Because functional MRI (fMRI) data sets are in general small, we sought a data efficient approach to resting state fMRI classification of autism spectrum disorder (ASD) versus neurotypical (NT) controls. We hypothesized that a Deep Reinforcement Learning (DRL) classifier could learn effectively on a small fMRI training set. Methods : We trained a Deep Reinforcement Learning (DRL) classifier on 100 graph-label pairs from the Autism Brain Imaging Data Exchange (ABIDE) database. For comparison, we trained a Supervised Deep Learning (SDL) classifier on the same training set. Results : DRL significantly outperformed SDL, with a p-value of 2.4 x 10^(-7). DRL achieved superior results for a variety of classifier performance metrics, including an F1 score of 76, versus 67 for SDL. Whereas SDL quickly overfit the training data, DRL learned in a progressive manner that generalised to the separate testing set. Conclusion : DRL can learn to classify ASD versus NT in a data efficient manner, doing so for a small training set. Future work will involve optimizing the neural network for data efficiency and applying the approach to other fMRI data sets, namely for brain cancer patients.

Deep learning can differentiate IDH-mutant from IDH-wild type GBM

Feb 24, 2021

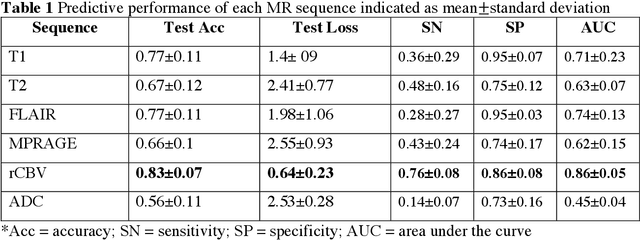

Abstract:Background: Distinction of IDH mutant and wildtype GBMs is challenging on MRI, since conventional imaging shows considerable overlap. While few studies employed deep-learning in a mixed low/high grade glioma population, a GBM-specific model is still lacking in the literature. Our objective was to develop a deep-learning model for IDH prediction in GBM by using Convoluted Neural Networks (CNN) on multiparametric MRI. Methods: We included 100 adult patients with pathologically proven GBM and IDH testing. MRI data included: morphologic sequences, rCBV and ADC maps. Tumor area was obtained by a bounding box function on the axial slice with widest tumor extension on T2 images and was projected on every sequence. Data was split into training and test (80:20) sets. A 4 block 2D - CNN architecture was implemented for IDH prediction on every MRI sequence. IDH mutation probability was calculated with softmax activation function from the last dense layer. Highest performance was calculated accounting for model accuracy and categorical cross-entropy loss (CCEL) in the test cohort. Results: Our model achieved the following performance: T1 (accuracy 77%, CCEL 1.4), T2 (accuracy 67%, CCEL 2.41), FLAIR (accuracy 77%, CCEL 1.98), MPRAGE (accuracy 66%, CCEL 2.55), rCBV (accuracy 83%, CCEL 0.64). ADC achieved lower performance. Conclusion: We built a GBM-tailored deep-learning model for IDH mutation prediction, achieving accuracy of 83% with rCBV maps. High predictivity of perfusion images may reflect the known correlation between IDH, hypoxia inducible factor (HIF) and neoangiogenesis. This model may set a path for non-invasive evaluation of IDH mutation in GBM.

Comparison of Machine Learning Classifiers to Predict Patient Survival and Genetics of GBM: Towards a Standardized Model for Clinical Implementation

Feb 10, 2021

Abstract:Radiomic models have been shown to outperform clinical data for outcome prediction in glioblastoma (GBM). However, clinical implementation is limited by lack of parameters standardization. We aimed to compare nine machine learning classifiers, with different optimization parameters, to predict overall survival (OS), isocitrate dehydrogenase (IDH) mutation, O-6-methylguanine-DNA-methyltransferase (MGMT) promoter methylation, epidermal growth factor receptor (EGFR) VII amplification and Ki-67 expression in GBM patients, based on radiomic features from conventional and advanced MR. 156 adult patients with pathologic diagnosis of GBM were included. Three tumoral regions were analyzed: contrast-enhancing tumor, necrosis and non-enhancing tumor, selected by manual segmentation. Radiomic features were extracted with a custom version of Pyradiomics, and selected through Boruta algorithm. A Grid Search algorithm was applied when computing 4 times K-fold cross validation (K=10) to get the highest mean and lowest spread of accuracy. Once optimal parameters were identified, model performances were assessed in terms of Area Under The Curve-Receiver Operating Characteristics (AUC-ROC). Metaheuristic and ensemble classifiers showed the best performance across tasks. xGB obtained maximum accuracy for OS (74.5%), AB for IDH mutation (88%), MGMT methylation (71,7%), Ki-67 expression (86,6%), and EGFR amplification (81,6%). Best performing features shed light on possible correlations between MR and tumor histology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge