Louis Soum-Fontez

Open-Set 3D object detection in LiDAR data as an Out-of-Distribution problem

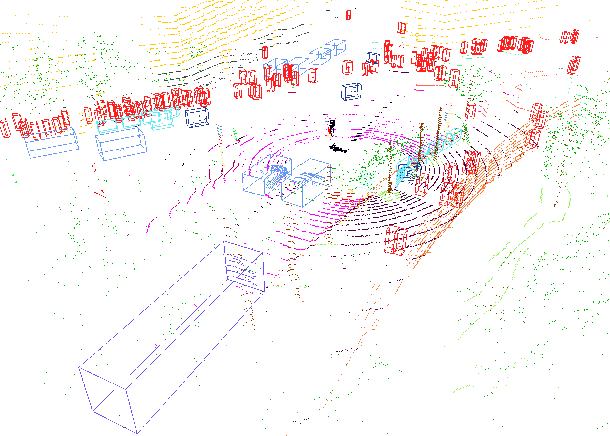

Oct 31, 2024Abstract:3D Object Detection from LiDAR data has achieved industry-ready performance in controlled environments through advanced deep learning methods. However, these neural network models are limited by a finite set of inlier object categories. Our work redefines the open-set 3D Object Detection problem in LiDAR data as an Out-Of-Distribution (OOD) problem to detect outlier objects. This approach brings additional information in comparison with traditional object detection. We establish a comparative benchmark and show that two-stage OOD methods, notably autolabelling, show promising results for 3D OOD Object Detection. Our contributions include setting a rigorous evaluation protocol by examining the evaluation of hyperparameters and evaluating strategies for generating additional data to train an OOD-aware 3D object detector. This comprehensive analysis is essential for developing robust 3D object detection systems that can perform reliably in diverse and unpredictable real-world scenarios.

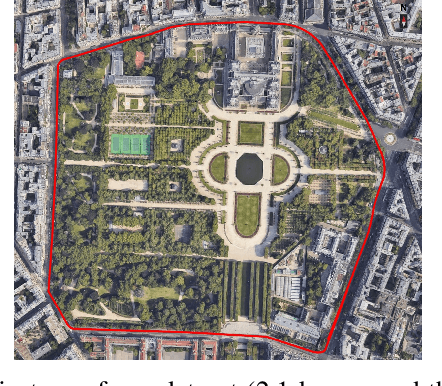

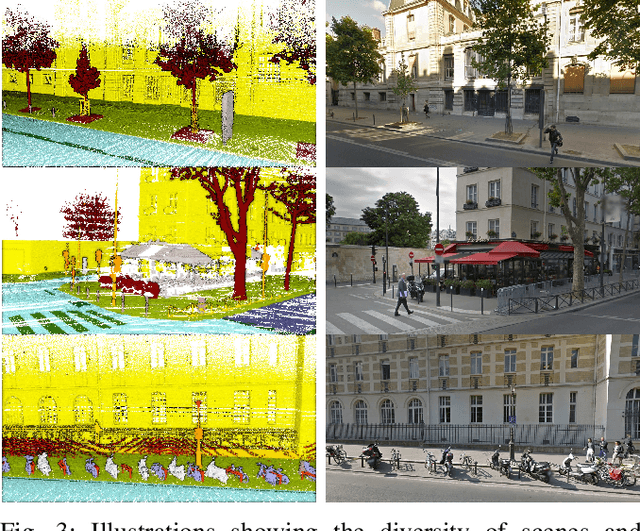

ParisLuco3D: A high-quality target dataset for domain generalization of LiDAR perception

Oct 25, 2023

Abstract:LiDAR is a sensor system that supports autonomous driving by gathering precise geometric information about the scene. Exploiting this information for perception is interesting as the amount of available data increases. As the quantitative performance of various perception tasks has improved, the focus has shifted from source-to-source perception to domain adaptation and domain generalization for perception. These new goals require access to a large variety of domains for evaluation. Unfortunately, the various annotation strategies of data providers complicate the computation of cross-domain performance based on the available data This paper provides a novel dataset, specifically designed for cross-domain evaluation to make it easier to evaluate the performance of various source datasets. Alongside the dataset, a flexible online benchmark is provided to ensure a fair comparison across methods.

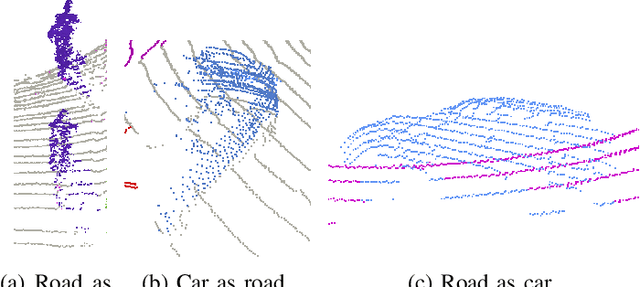

MDT3D: Multi-Dataset Training for LiDAR 3D Object Detection Generalization

Aug 02, 2023

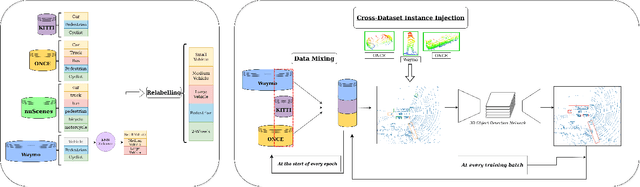

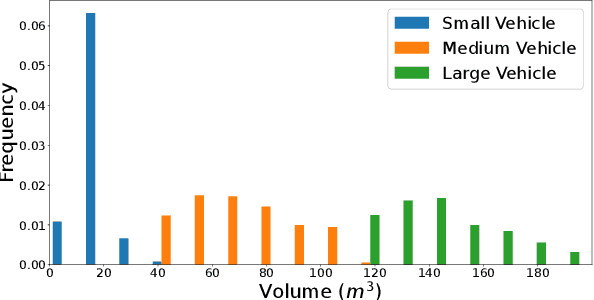

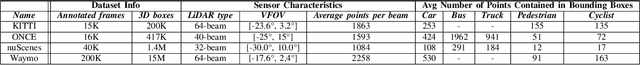

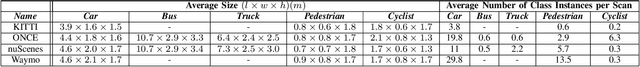

Abstract:Supervised 3D Object Detection models have been displaying increasingly better performance in single-domain cases where the training data comes from the same environment and sensor as the testing data. However, in real-world scenarios data from the target domain may not be available for finetuning or for domain adaptation methods. Indeed, 3D object detection models trained on a source dataset with a specific point distribution have shown difficulties in generalizing to unseen datasets. Therefore, we decided to leverage the information available from several annotated source datasets with our Multi-Dataset Training for 3D Object Detection (MDT3D) method to increase the robustness of 3D object detection models when tested in a new environment with a different sensor configuration. To tackle the labelling gap between datasets, we used a new label mapping based on coarse labels. Furthermore, we show how we managed the mix of datasets during training and finally introduce a new cross-dataset augmentation method: cross-dataset object injection. We demonstrate that this training paradigm shows improvements for different types of 3D object detection models. The source code and additional results for this research project will be publicly available on GitHub for interested parties to access and utilize: https://github.com/LouisSF/MDT3D

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge