Loren Terveen

Leveraging Recommender Systems to Reduce Content Gaps on Peer Production Platforms

Jul 18, 2023

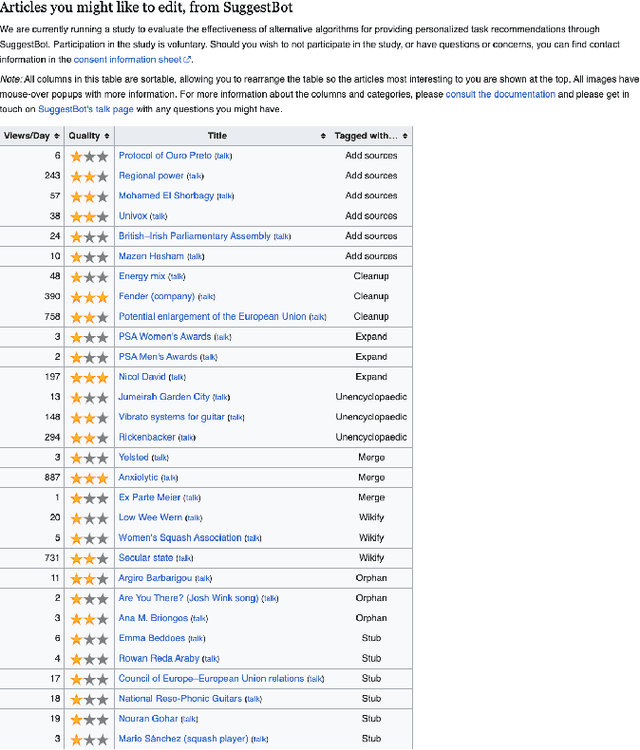

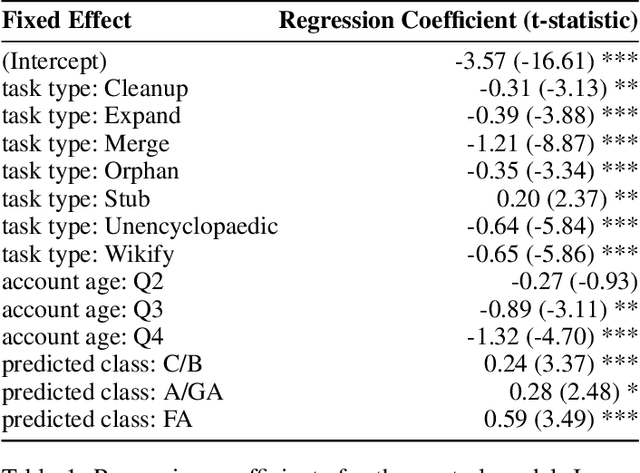

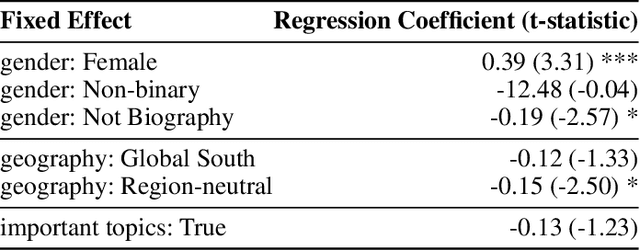

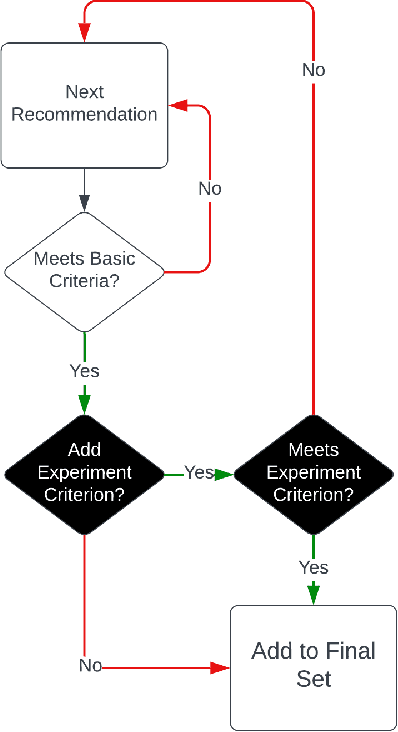

Abstract:Peer production platforms like Wikipedia commonly suffer from content gaps. Prior research suggests recommender systems can help solve this problem, by guiding editors towards underrepresented topics. However, it remains unclear whether this approach would result in less relevant recommendations, leading to reduced overall engagement with recommended items. To answer this question, we first conducted offline analyses (Study 1) on SuggestBot, a task-routing recommender system for Wikipedia, then did a three-month controlled experiment (Study 2). Our results show that presenting users with articles from underrepresented topics increased the proportion of work done on those articles without significantly reducing overall recommendation uptake. We discuss the implications of our results, including how ignoring the article discovery process can artificially narrow recommendations. We draw parallels between this phenomenon and the common issue of "filter bubbles" to show how any platform that employs recommender systems is susceptible to it.

"Some other poor soul's problems": a peer recommendation intervention for health-related social support

Sep 12, 2022

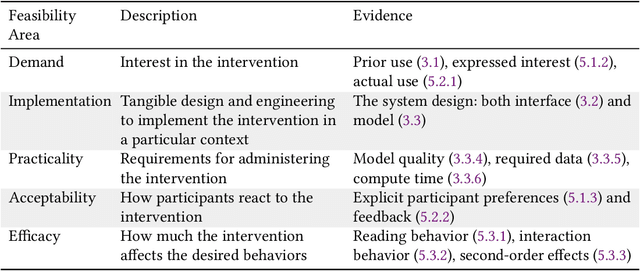

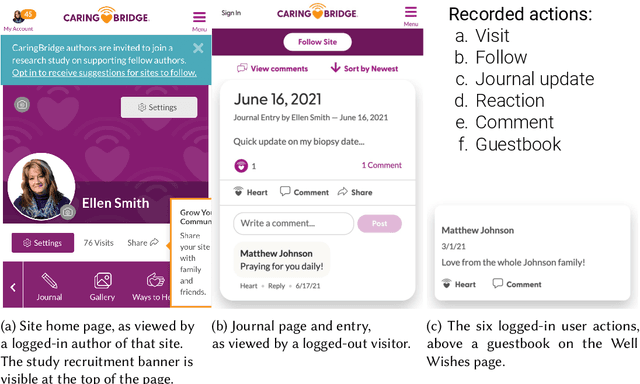

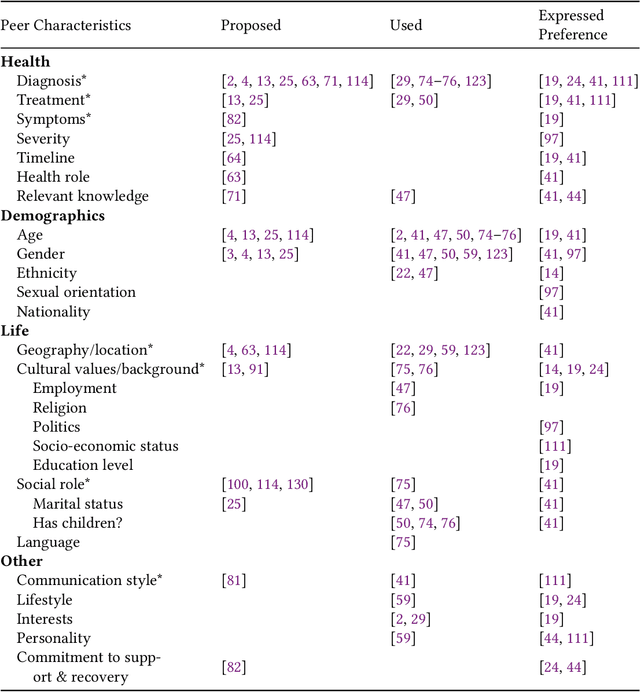

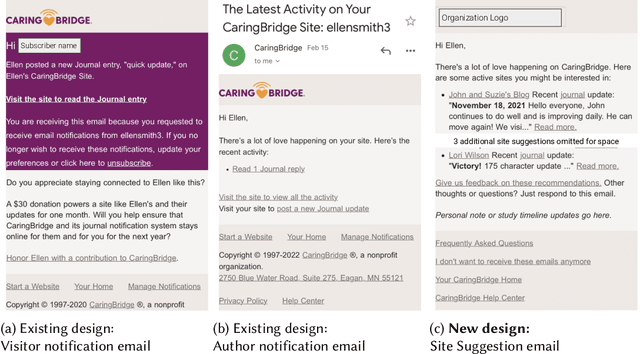

Abstract:Online health communities (OHCs) offer the promise of connecting with supportive peers. Forming these connections first requires finding relevant peers - a process that can be time-consuming. Peer recommendation systems are a computational approach to make finding peers easier during a health journey. By encouraging OHC users to alter their online social networks, peer recommendations could increase available support. But these benefits are hypothetical and based on mixed, observational evidence. To experimentally evaluate the effect of peer recommendations, we conceptualize these systems as health interventions designed to increase specific beneficial connection behaviors. In this paper, we designed a peer recommendation intervention to increase two behaviors: reading about peer experiences and interacting with peers. We conducted an initial feasibility assessment of this intervention by conducting a 12-week field study in which 79 users of CaringBridge received weekly peer recommendations via email. Our results support the usefulness and demand for peer recommendation and suggest benefits to evaluating larger peer recommendation interventions. Our contributions include practical guidance on the development and evaluation of peer recommendation interventions for OHCs.

Keeping Community in the Loop: Understanding Wikipedia Stakeholder Values for Machine Learning-Based Systems

Jan 14, 2020

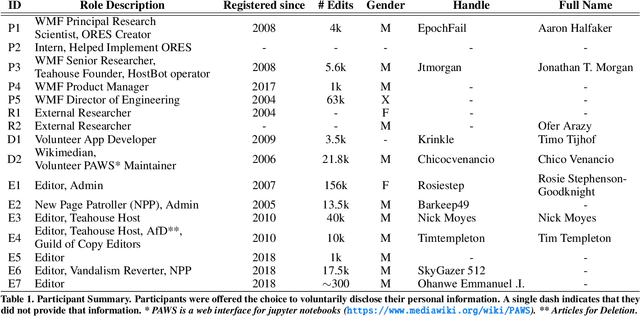

Abstract:On Wikipedia, sophisticated algorithmic tools are used to assess the quality of edits and take corrective actions. However, algorithms can fail to solve the problems they were designed for if they conflict with the values of communities who use them. In this study, we take a Value-Sensitive Algorithm Design approach to understanding a community-created and -maintained machine learning-based algorithm called the Objective Revision Evaluation System (ORES)---a quality prediction system used in numerous Wikipedia applications and contexts. Five major values converged across stakeholder groups that ORES (and its dependent applications) should: (1) reduce the effort of community maintenance, (2) maintain human judgement as the final authority, (3) support differing peoples' differing workflows, (4) encourage positive engagement with diverse editor groups, and (5) establish trustworthiness of people and algorithms within the community. We reveal tensions between these values and discuss implications for future research to improve algorithms like ORES.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge