Loki Natarajan

Sedentary Behavior Estimation with Hip-worn Accelerometer Data: Segmentation, Classification and Thresholding

Jul 05, 2022

Abstract:Cohort studies are increasingly using accelerometers for physical activity and sedentary behavior estimation. These devices tend to be less error-prone than self-report, can capture activity throughout the day, and are economical. However, previous methods for estimating sedentary behavior based on hip-worn data are often invalid or suboptimal under free-living situations and subject-to-subject variation. In this paper, we propose a local Markov switching model that takes this situation into account, and introduce a general procedure for posture classification and sedentary behavior analysis that fits the model naturally. Our method features changepoint detection methods in time series and also a two stage classification step that labels data into 3 classes(sitting, standing, stepping). Through a rigorous training-testing paradigm, we showed that our approach achieves > 80% accuracy. In addition, our method is robust and easy to interpret.

Utilizing stability criteria in choosing feature selection methods yields reproducible results in microbiome data

Nov 30, 2020

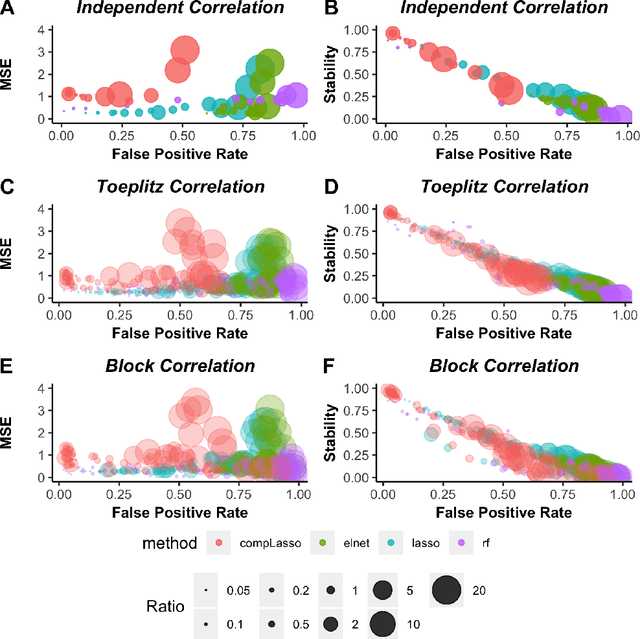

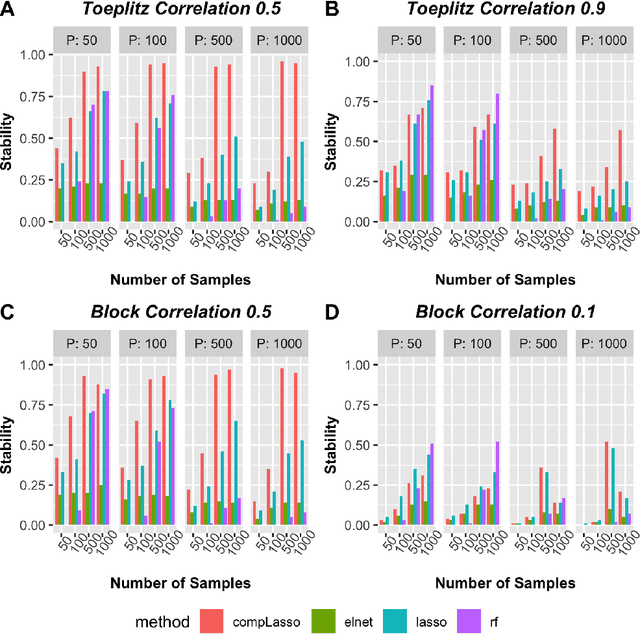

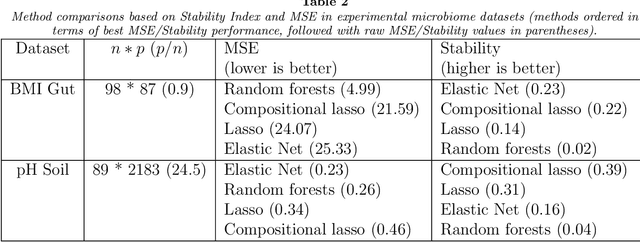

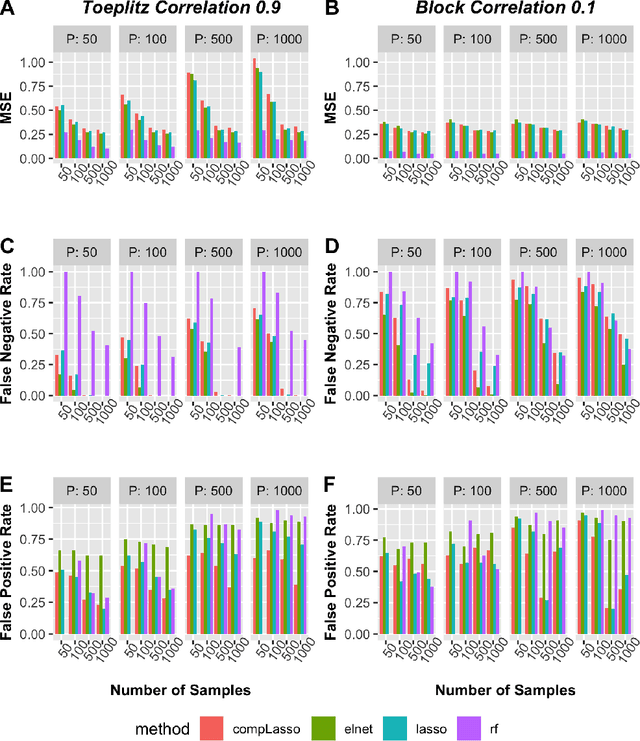

Abstract:Feature selection is indispensable in microbiome data analysis, but it can be particularly challenging as microbiome data sets are high-dimensional, underdetermined, sparse and compositional. Great efforts have recently been made on developing new methods for feature selection that handle the above data characteristics, but almost all methods were evaluated based on performance of model predictions. However, little attention has been paid to address a fundamental question: how appropriate are those evaluation criteria? Most feature selection methods often control the model fit, but the ability to identify meaningful subsets of features cannot be evaluated simply based on the prediction accuracy. If tiny changes to the training data would lead to large changes in the chosen feature subset, then many of the biological features that an algorithm has found are likely to be a data artifact rather than real biological signal. This crucial need of identifying relevant and reproducible features motivated the reproducibility evaluation criterion such as Stability, which quantifies how robust a method is to perturbations in the data. In our paper, we compare the performance of popular model prediction metric MSE and proposed reproducibility criterion Stability in evaluating four widely used feature selection methods in both simulations and experimental microbiome applications. We conclude that Stability is a preferred feature selection criterion over MSE because it better quantifies the reproducibility of the feature selection method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge