Lloyd Windrim

Changes in Real Time: Online Scene Change Detection with Multi-View Fusion

Nov 15, 2025

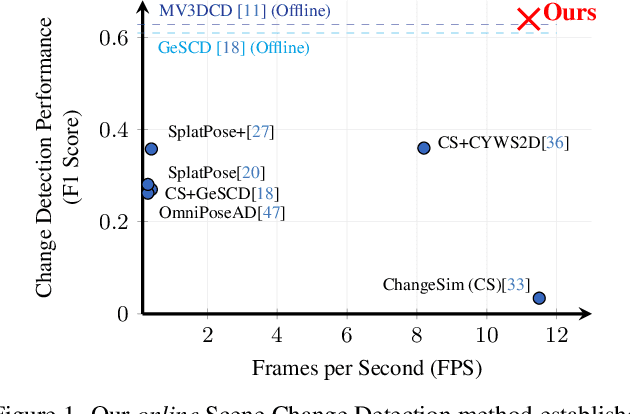

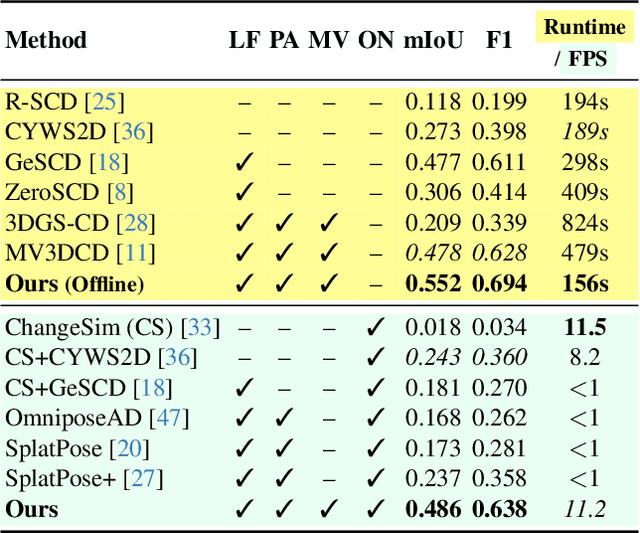

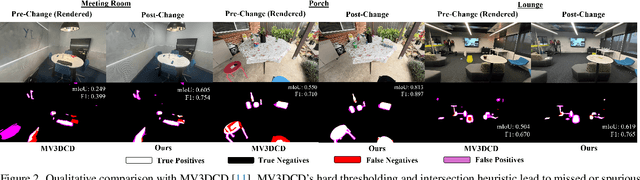

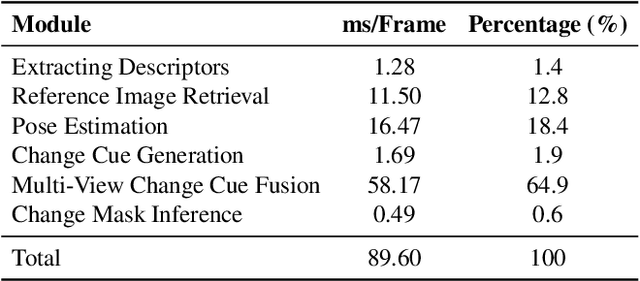

Abstract:Online Scene Change Detection (SCD) is an extremely challenging problem that requires an agent to detect relevant changes on the fly while observing the scene from unconstrained viewpoints. Existing online SCD methods are significantly less accurate than offline approaches. We present the first online SCD approach that is pose-agnostic, label-free, and ensures multi-view consistency, while operating at over 10 FPS and achieving new state-of-the-art performance, surpassing even the best offline approaches. Our method introduces a new self-supervised fusion loss to infer scene changes from multiple cues and observations, PnP-based fast pose estimation against the reference scene, and a fast change-guided update strategy for the 3D Gaussian Splatting scene representation. Extensive experiments on complex real-world datasets demonstrate that our approach outperforms both online and offline baselines.

Multi-View Pose-Agnostic Change Localization with Zero Labels

Dec 05, 2024Abstract:Autonomous agents often require accurate methods for detecting and localizing changes in their environment, particularly when observations are captured from unconstrained and inconsistent viewpoints. We propose a novel label-free, pose-agnostic change detection method that integrates information from multiple viewpoints to construct a change-aware 3D Gaussian Splatting (3DGS) representation of the scene. With as few as 5 images of the post-change scene, our approach can learn additional change channels in a 3DGS and produce change masks that outperform single-view techniques. Our change-aware 3D scene representation additionally enables the generation of accurate change masks for unseen viewpoints. Experimental results demonstrate state-of-the-art performance in complex multi-object scenes, achieving a 1.7$\times$ and 1.6$\times$ improvement in Mean Intersection Over Union and F1 score respectively over other baselines. We also contribute a new real-world dataset to benchmark change detection in diverse challenging scenes in the presence of lighting variations.

Unsupervised ore/waste classification on open-cut mine faces using close-range hyperspectral data

Feb 09, 2023

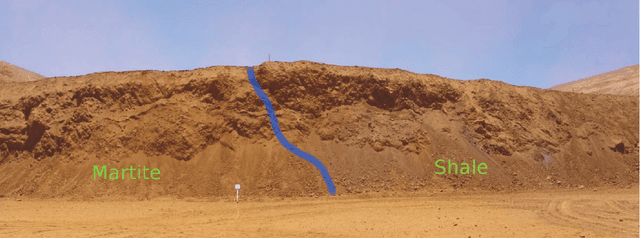

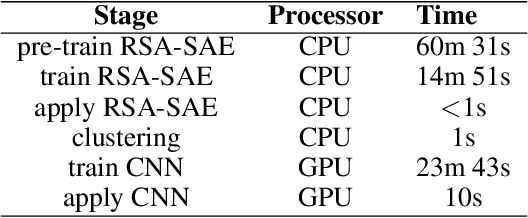

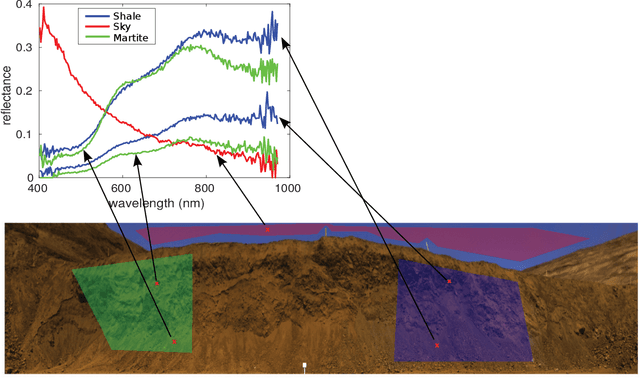

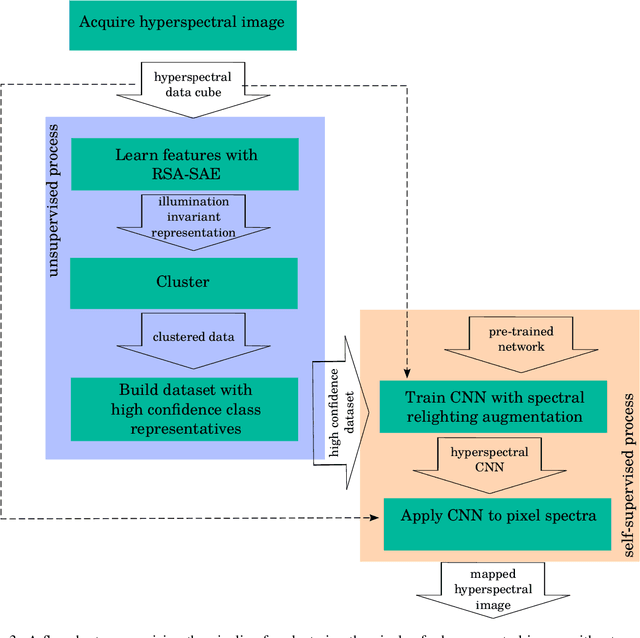

Abstract:The remote mapping of minerals and discrimination of ore and waste on surfaces are important tasks for geological applications such as those in mining. Such tasks have become possible using ground-based, close-range hyperspectral sensors which can remotely measure the reflectance properties of the environment with high spatial and spectral resolution. However, autonomous mapping of mineral spectra measured on an open-cut mine face remains a challenging problem due to the subtleness of differences in spectral absorption features between mineral and rock classes as well as variability in the illumination of the scene. An additional layer of difficulty arises when there is no annotated data available to train a supervised learning algorithm. A pipeline for unsupervised mapping of spectra on a mine face is proposed which draws from several recent advances in the hyperspectral machine learning literature. The proposed pipeline brings together unsupervised and self-supervised algorithms in a unified system to map minerals on a mine face without the need for human-annotated training data. The pipeline is evaluated with a hyperspectral image dataset of an open-cut mine face comprising mineral ore martite and non-mineralised shale. The combined system is shown to produce a superior map to its constituent algorithms, and the consistency of its mapping capability is demonstrated using data acquired at two different times of day.

* Manuscript has been accepted for publication in Geoscience Frontiers. Keywords: Hyperspectral imaging, remote sensing, mineral mapping, machine learning, convolutional neural networks, transfer learning, data augmentation, illumination invariance

Development of a High Fidelity Simulator for Generalised Photometric Based Space Object Classification using Machine Learning

Apr 26, 2020

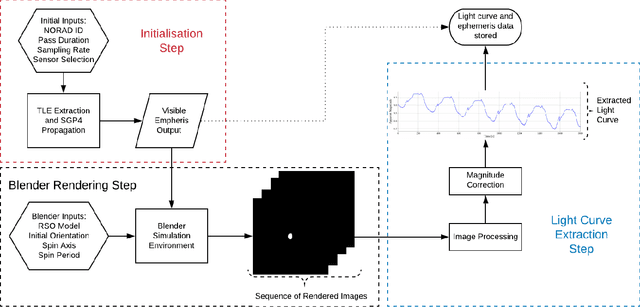

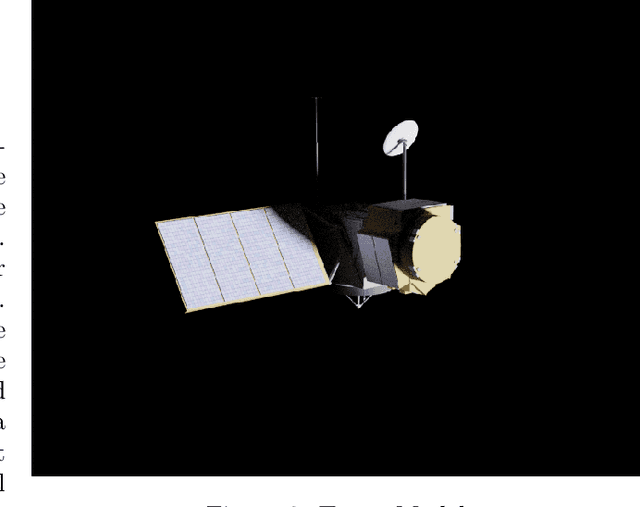

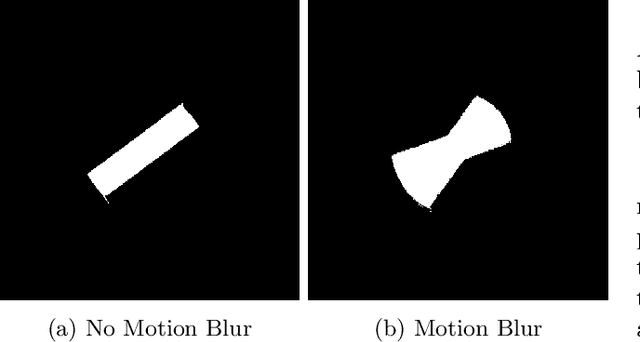

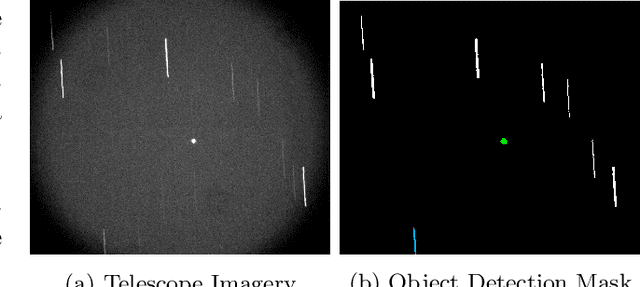

Abstract:This paper presents the initial stages in the development of a deep learning classifier for generalised Resident Space Object (RSO) characterisation that combines high-fidelity simulated light curves with transfer learning to improve the performance of object characterisation models that are trained on real data. The classification and characterisation of RSOs is a significant goal in Space Situational Awareness (SSA) in order to improve the accuracy of orbital predictions. The specific focus of this paper is the development of a high-fidelity simulation environment for generating realistic light curves. The simulator takes in a textured geometric model of an RSO as well as the objects ephemeris and uses Blender to generate photo-realistic images of the RSO that are then processed to extract the light curve. Simulated light curves have been compared with real light curves extracted from telescope imagery to provide validation for the simulation environment. Future work will involve further validation and the use of the simulator to generate a dataset of realistic light curves for the purpose of training neural networks.

* This paper is a pre-print that appeared in Proceedings of 70th International Astronautical Congress (IAC), 2019

Forest Tree Detection and Segmentation using High Resolution Airborne LiDAR

Oct 30, 2018

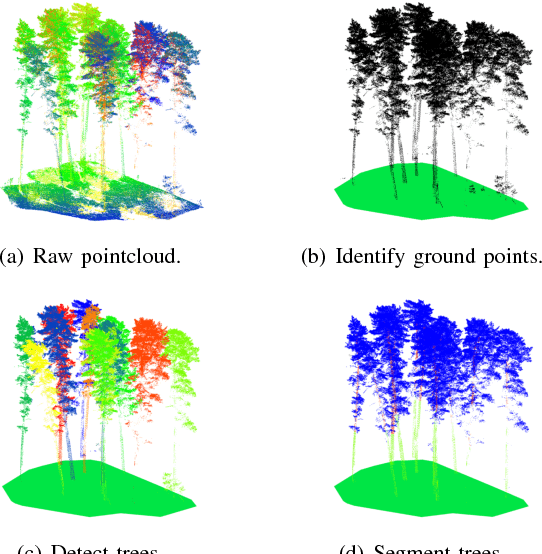

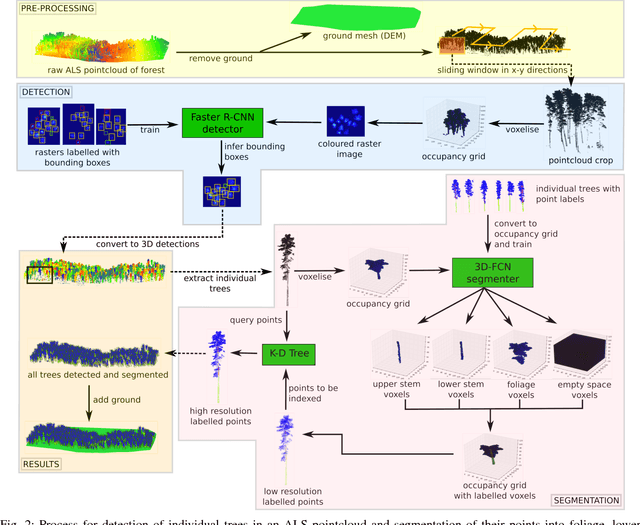

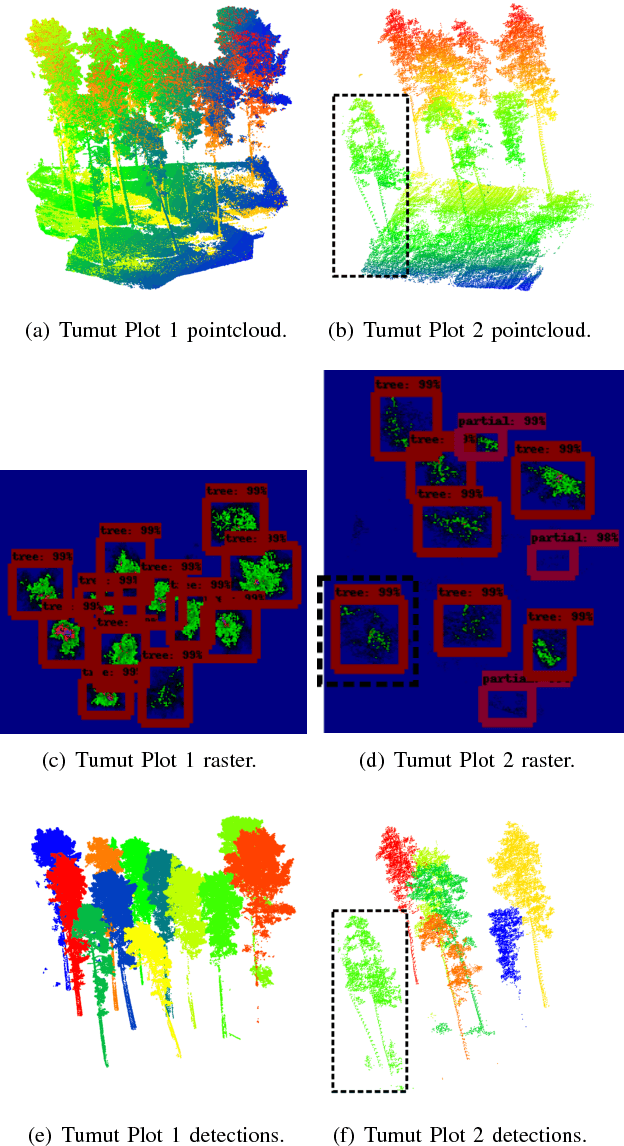

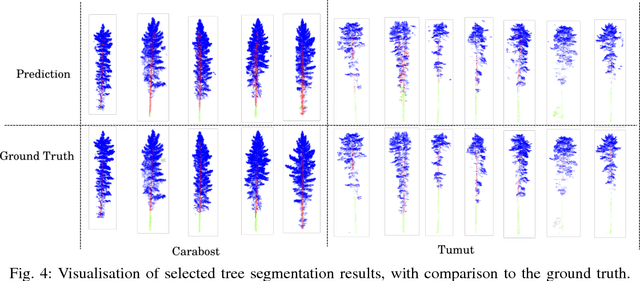

Abstract:This paper presents an autonomous approach to tree detection and segmentation in high resolution airborne LiDAR that utilises state-of-the-art region-based CNN and 3D-CNN deep learning algorithms. If the number of training examples for a site is low, it is shown to be beneficial to transfer a segmentation network learnt from a different site with more training data and fine-tune it. The algorithm was validated using airborne laser scanning over two different commercial pine plantations. The results show that the proposed approach performs favourably in comparison to other methods for tree detection and segmentation.

Hyperspectral CNN Classification with Limited Training Samples

Nov 28, 2016

Abstract:Hyperspectral imaging sensors are becoming increasingly popular in robotics applications such as agriculture and mining, and allow per-pixel thematic classification of materials in a scene based on their unique spectral signatures. Recently, convolutional neural networks have shown remarkable performance for classification tasks, but require substantial amounts of labelled training data. This data must sufficiently cover the variability expected to be encountered in the environment. For hyperspectral data, one of the main variations encountered outdoors is due to incident illumination, which can change in spectral shape and intensity depending on the scene geometry. For example, regions occluded from the sun have a lower intensity and their incident irradiance skewed towards shorter wavelengths. In this work, a data augmentation strategy based on relighting is used during training of a hyperspectral convolutional neural network. It allows training to occur in the outdoor environment given only a small labelled region, which does not need to sufficiently represent the geometric variability of the entire scene. This is important for applications where obtaining large amounts of training data is labourious, hazardous or difficult, such as labelling pixels within shadows. Radiometric normalisation approaches for pre-processing the hyperspectral data are analysed and it is shown that methods based on the raw pixel data are sufficient to be used as input for the classifier. This removes the need for external hardware such as calibration boards, which can restrict the application of hyperspectral sensors in robotics applications. Experiments to evaluate the classification system are carried out on two datasets captured from a field-based platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge