Liupu Wang

Improving Generalization of Neural Vehicle Routing Problem Solvers Through the Lens of Model Architecture

Jun 10, 2024

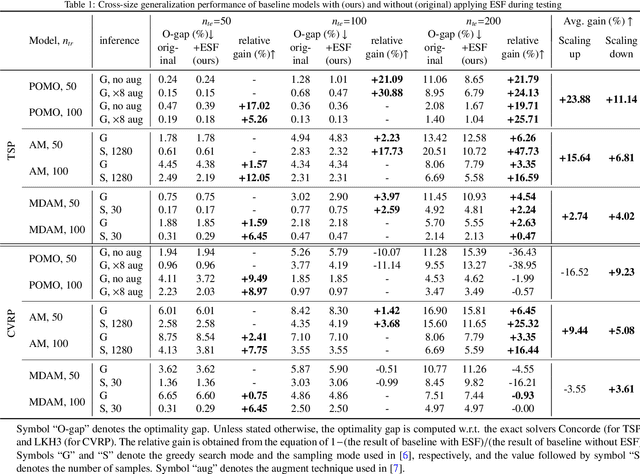

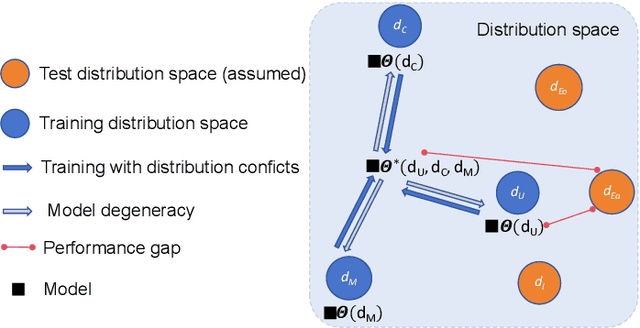

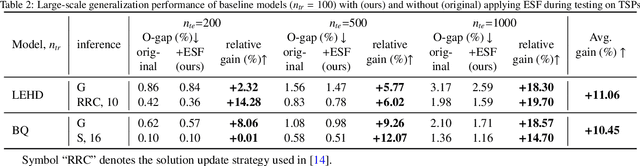

Abstract:Neural models produce promising results when solving Vehicle Routing Problems (VRPs), but often fall short in generalization. Recent attempts to enhance model generalization often incur unnecessarily large training cost or cannot be directly applied to other models solving different VRP variants. To address these issues, we take a novel perspective on model architecture in this study. Specifically, we propose a plug-and-play Entropy-based Scaling Factor (ESF) and a Distribution-Specific (DS) decoder to enhance the size and distribution generalization, respectively. ESF adjusts the attention weight pattern of the model towards familiar ones discovered during training when solving VRPs of varying sizes. The DS decoder explicitly models VRPs of multiple training distribution patterns through multiple auxiliary light decoders, expanding the model representation space to encompass a broader range of distributional scenarios. We conduct extensive experiments on both synthetic and widely recognized real-world benchmarking datasets and compare the performance with seven baseline models. The results demonstrate the effectiveness of using ESF and DS decoder to obtain a more generalizable model and showcase their applicability to solve different VRP variants, i.e., travelling salesman problem and capacitated VRP. Notably, our proposed generic components require minimal computational resources, and can be effortlessly integrated into conventional generalization strategies to further elevate model generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge