Liping Jiang

3D EAGAN: 3D edge-aware attention generative adversarial network for prostate segmentation in transrectal ultrasound images

Nov 07, 2023Abstract:Automatic prostate segmentation in TRUS images has always been a challenging problem, since prostates in TRUS images have ambiguous boundaries and inhomogeneous intensity distribution. Although many prostate segmentation methods have been proposed, they still need to be improved due to the lack of sensibility to edge information. Consequently, the objective of this study is to devise a highly effective prostate segmentation method that overcomes these limitations and achieves accurate segmentation of prostates in TRUS images. A 3D edge-aware attention generative adversarial network (3D EAGAN)-based prostate segmentation method is proposed in this paper, which consists of an edge-aware segmentation network (EASNet) that performs the prostate segmentation and a discriminator network that distinguishes predicted prostates from real prostates. The proposed EASNet is composed of an encoder-decoder-based U-Net backbone network, a detail compensation module, four 3D spatial and channel attention modules, an edge enhance module, and a global feature extractor. The detail compensation module is proposed to compensate for the loss of detailed information caused by the down-sampling process of the encoder. The features of the detail compensation module are selectively enhanced by the 3D spatial and channel attention module. Furthermore, an edge enhance module is proposed to guide shallow layers in the EASNet to focus on contour and edge information in prostates. Finally, features from shallow layers and hierarchical features from the decoder module are fused through the global feature extractor to predict the segmentation prostates.

Improving Hypernasality Estimation with Automatic Speech Recognition in Cleft Palate Speech

Aug 10, 2022

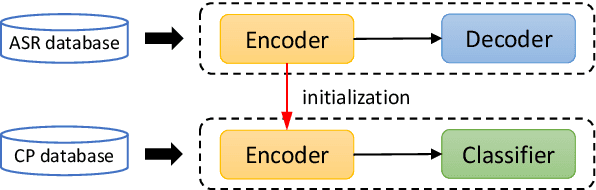

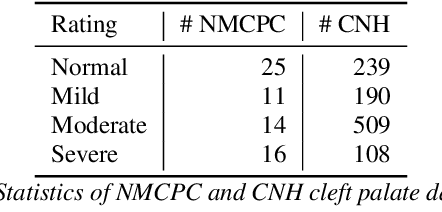

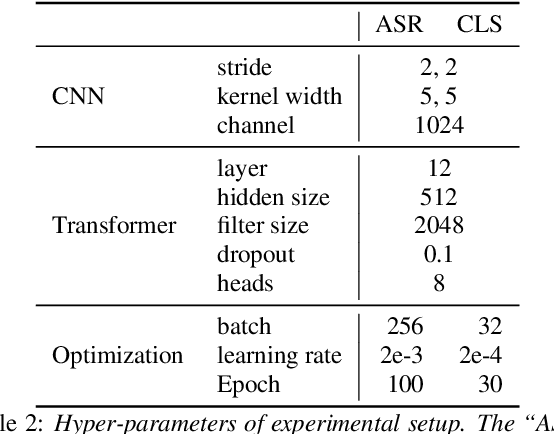

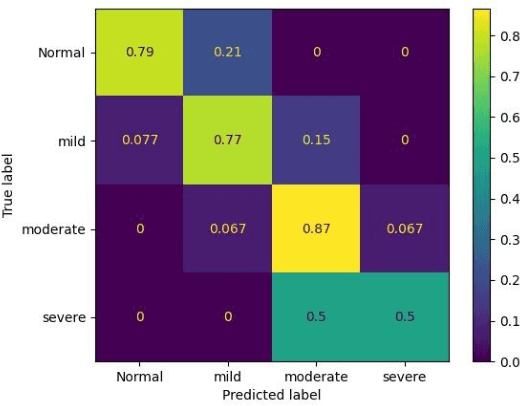

Abstract:Hypernasality is an abnormal resonance in human speech production, especially in patients with craniofacial anomalies such as cleft palate. In clinical application, hypernasality estimation is crucial in cleft palate diagnosis, as its results determine the subsequent surgery and additional speech therapy. Therefore, designing an automatic hypernasality assessment method will facilitate speech-language pathologists to make precise diagnoses. Existing methods for hypernasality estimation only conduct acoustic analysis based on low-resource cleft palate dataset, by using statistical or neural network-based features. In this paper, we propose a novel approach that uses automatic speech recognition model to improve hypernasality estimation. Specifically, we first pre-train an encoder-decoder framework in an automatic speech recognition (ASR) objective by using speech-to-text dataset, and then fine-tune ASR encoder on the cleft palate dataset for hypernasality estimation. Benefiting from such design, our model for hypernasality estimation can enjoy the advantages of ASR model: 1) compared with low-resource cleft palate dataset, the ASR task usually includes large-scale speech data in the general domain, which enables better model generalization; 2) the text annotations in ASR dataset guide model to extract better acoustic features. Experimental results on two cleft palate datasets demonstrate that our method achieves superior performance compared with previous approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge