Linyuan Jing

Deep neural networks can predict mortality from 12-lead electrocardiogram voltage data

May 03, 2019

Abstract:The electrocardiogram (ECG) is a widely-used medical test, typically consisting of 12 voltage versus time traces collected from surface recordings over the heart. Here we hypothesize that a deep neural network can predict an important future clinical event (one-year all-cause mortality) from ECG voltage-time traces. We show good performance for predicting one-year mortality with an average AUC of 0.85 from a model cross-validated on 1,775,926 12-lead resting ECGs, that were collected over a 34-year period in a large regional health system. Even within the large subset of ECGs interpreted as 'normal' by a physician (n=297,548), the model performance to predict one-year mortality remained high (AUC=0.84), and Cox Proportional Hazard model revealed a hazard ratio of 6.6 (p<0.005) for the two predicted groups (dead vs alive one year after ECG) over a 30-year follow-up period. A blinded survey of three cardiologists suggested that the patterns captured by the model were generally not visually apparent to cardiologists even after being shown 240 paired examples of labeled true positives (dead) and true negatives (alive). In summary, deep learning can add significant prognostic information to the interpretation of 12-lead resting ECGs, even in cases that are interpreted as 'normal' by physicians.

Interpretable Neural Networks for Predicting Mortality Risk using Multi-modal Electronic Health Records

Jan 23, 2019

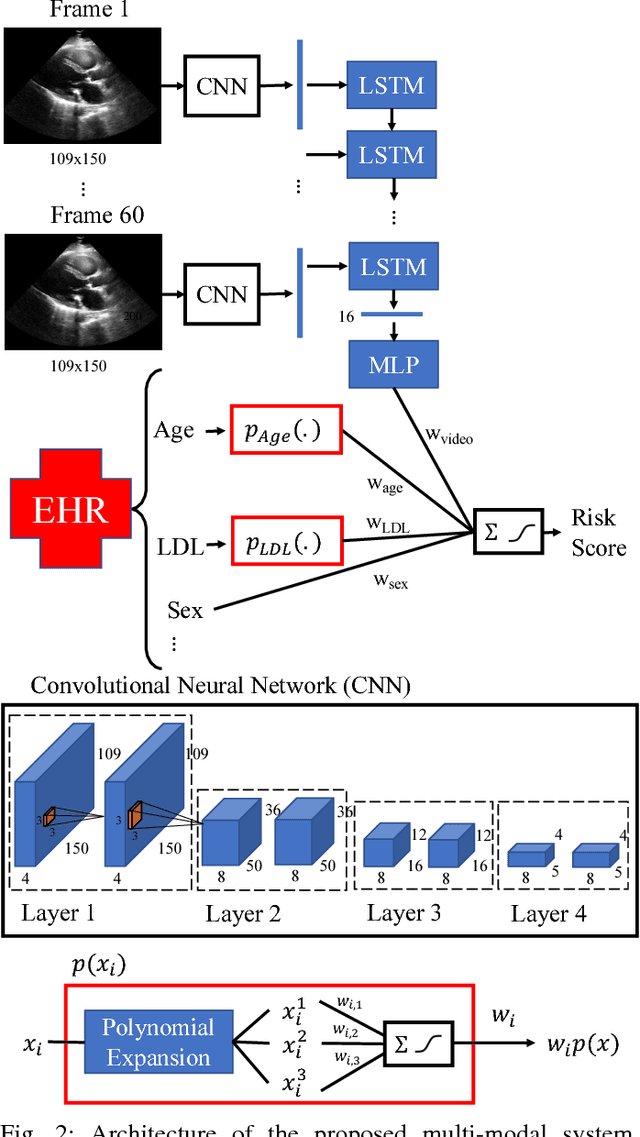

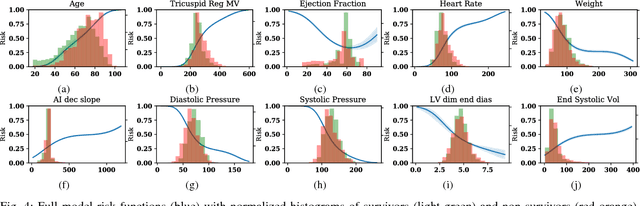

Abstract:We present an interpretable neural network for predicting an important clinical outcome (1-year mortality) from multi-modal Electronic Health Record (EHR) data. Our approach builds on prior multi-modal machine learning models by now enabling visualization of how individual factors contribute to the overall outcome risk, assuming other factors remain constant, which was previously impossible. We demonstrate the value of this approach using a large multi-modal clinical dataset including both EHR data and 31,278 echocardiographic videos of the heart from 26,793 patients. We generated separate models for (i) clinical data only (CD) (e.g. age, sex, diagnoses and laboratory values), (ii) numeric variables derived from the videos, which we call echocardiography-derived measures (EDM), and (iii) CD+EDM+raw videos (pixel data). The interpretable multi-modal model maintained performance compared to non-interpretable models (Random Forest, XGBoost), and also performed significantly better than a model using a single modality (average AUC=0.82). Clinically relevant insights and multi-modal variable importance rankings were also facilitated by the new model, which have previously been impossible.

A deep neural network predicts survival after heart imaging better than cardiologists

Nov 26, 2018

Abstract:Predicting future clinical events, such as death, is an important task in medicine that helps physicians guide appropriate action. Neural networks have particular promise to assist with medical prediction tasks related to clinical imaging by learning patterns from large datasets. Significant advances have been made in predicting complex diagnoses from medical imaging[1-5]. Predicting future events, then, is a natural but relatively unexplored extension of those efforts. Moreover, neural networks have not yet been applied to medical videos on a large scale, such as ultrasound of the heart (echocardiography). Here we show that a large dataset of 723,754 clinically-acquired echocardiographic videos (approx. 45 million images) linked to longitudinal follow-up data in 27,028 patients can be used to train a deep neural network to predict 1-year survival with good accuracy. We also demonstrate that prediction accuracy can be further improved by adding highly predictive clinical variables from the electronic health record. Finally, in a blinded, independent test set, the trained neural network was more accurate in discriminating 1-year survival outcomes than two expert cardiologists. These results therefore highlight the potential of neural networks to add new predictive power to clinical image interpretations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge