Joshua V. Stough

Bayesian Optimization of 2D Echocardiography Segmentation

Nov 17, 2022

Abstract:Bayesian Optimization (BO) is a well-studied hyperparameter tuning technique that is more efficient than grid search for high-cost, high-parameter machine learning problems. Echocardiography is a ubiquitous modality for evaluating heart structure and function in cardiology. In this work, we use BO to optimize the architectural and training-related hyperparameters of a previously published deep fully convolutional neural network model for multi-structure segmentation in echocardiography. In a fair comparison, the resulting model outperforms this recent state-of-the-art on the annotated CAMUS dataset in both apical two- and four-chamber echo views. We report mean Dice overlaps of 0.95, 0.96, and 0.93 on left ventricular (LV) endocardium, LV epicardium, and left atrium respectively. We also observe significant improvement in derived clinical indices, including smaller median absolute errors for LV end-diastolic volume (4.9mL vs. 6.7), end-systolic volume (3.1mL vs. 5.2), and ejection fraction (2.6% vs. 3.7); and much tighter limits of agreement, which were already within inter-rater variability for non-contrast echo. These results demonstrate the benefits of BO for echocardiography segmentation over a recent state-of-the-art framework, although validation using large-scale independent clinical data is required.

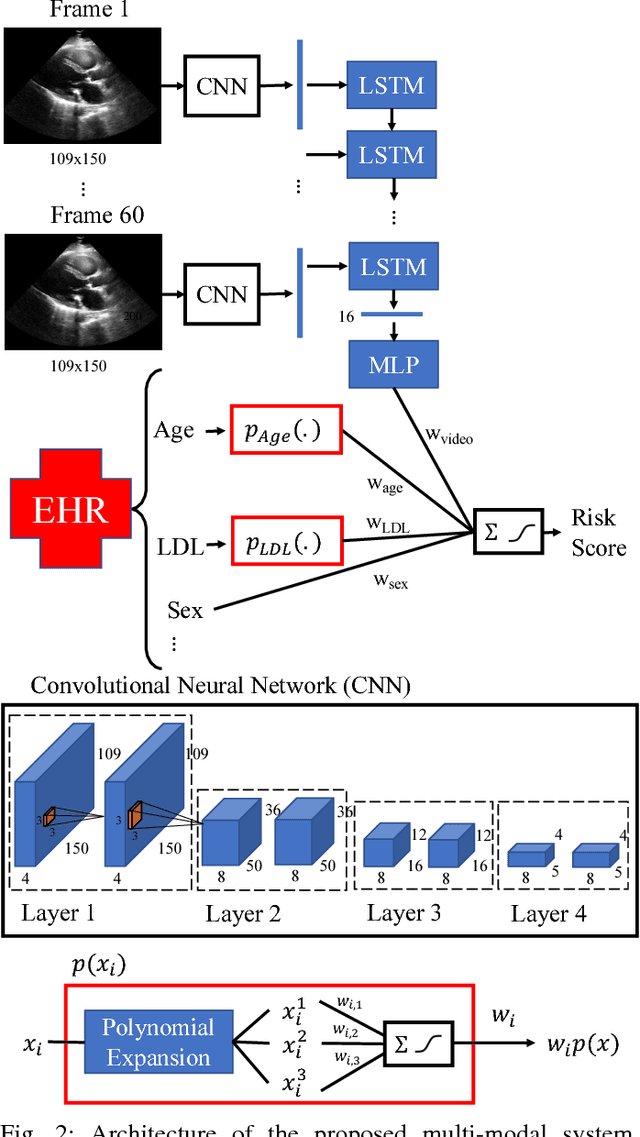

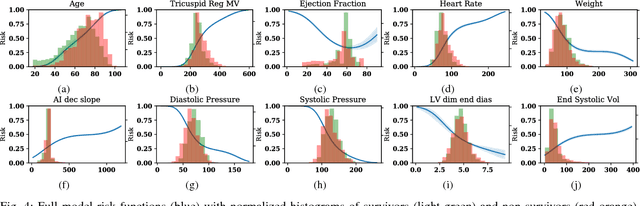

Interpretable Neural Networks for Predicting Mortality Risk using Multi-modal Electronic Health Records

Jan 23, 2019

Abstract:We present an interpretable neural network for predicting an important clinical outcome (1-year mortality) from multi-modal Electronic Health Record (EHR) data. Our approach builds on prior multi-modal machine learning models by now enabling visualization of how individual factors contribute to the overall outcome risk, assuming other factors remain constant, which was previously impossible. We demonstrate the value of this approach using a large multi-modal clinical dataset including both EHR data and 31,278 echocardiographic videos of the heart from 26,793 patients. We generated separate models for (i) clinical data only (CD) (e.g. age, sex, diagnoses and laboratory values), (ii) numeric variables derived from the videos, which we call echocardiography-derived measures (EDM), and (iii) CD+EDM+raw videos (pixel data). The interpretable multi-modal model maintained performance compared to non-interpretable models (Random Forest, XGBoost), and also performed significantly better than a model using a single modality (average AUC=0.82). Clinically relevant insights and multi-modal variable importance rankings were also facilitated by the new model, which have previously been impossible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge