Linas Vepstas

Sheaves: A Topological Approach to Big Data

Jan 04, 2019Abstract:This document develops general concepts useful for extracting knowledge embedded in large graphs or datasets that have pair-wise relationships, such as cause-effect-type relations. Almost no underlying assumptions are made, other than that the data can be presented in terms of pair-wise relationships between objects/events. This assumption is used to mine for patterns in the dataset, defining a reduced graph or dataset that boils-down or concentrates information into a more compact form. The resulting extracted structure or set of patterns are manifestly symbolic in nature, as they capture and encode the graph structure of the dataset in terms of a (generative) grammar. This structure is identified as having the formal mathematical structure of a sheaf. In essence, this paper introduces the basic concepts of sheaf theory into the domain of graphical datasets.

Symbol Grounding via Chaining of Morphisms

Mar 13, 2017

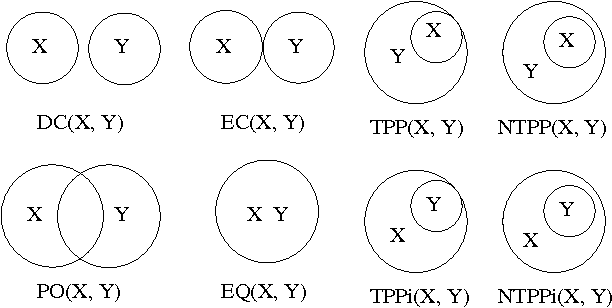

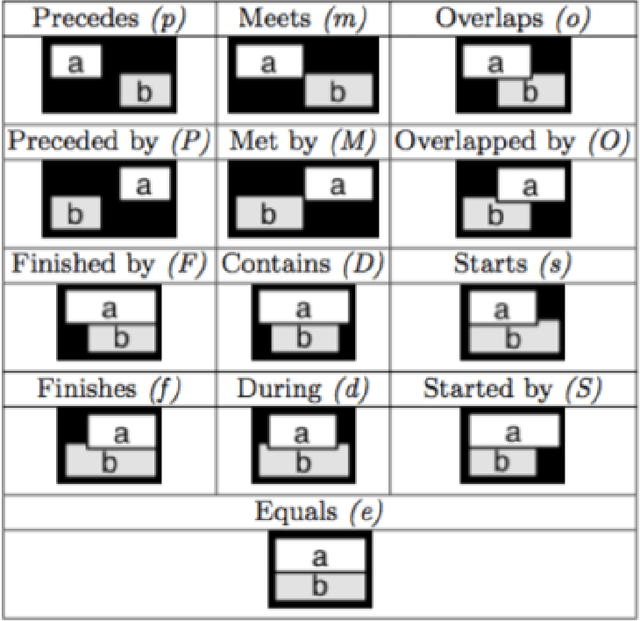

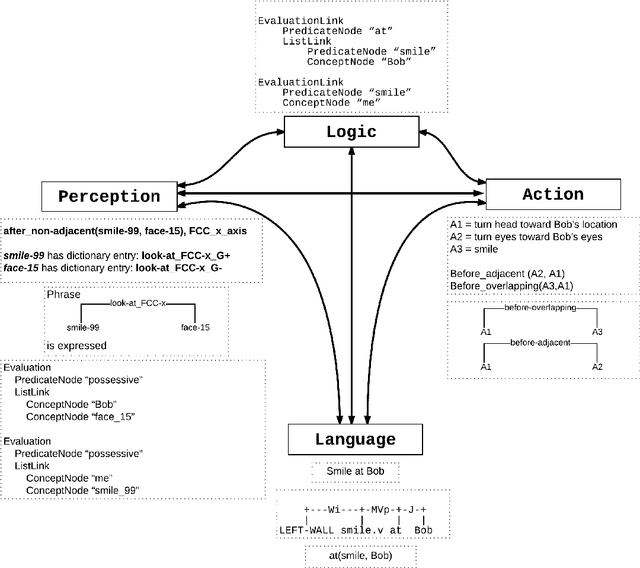

Abstract:A new model of symbol grounding is presented, in which the structures of natural language, logical semantics, perception and action are represented categorically, and symbol grounding is modeled via the composition of morphisms between the relevant categories. This model gives conceptual insight into the fundamentally systematic nature of symbol grounding, and also connects naturally to practical real-world AI systems in current research and commercial use. Specifically, it is argued that the structure of linguistic syntax can be modeled as a certain asymmetric monoidal category, as e.g. implicit in the link grammar formalism; the structure of spatiotemporal relationships and action plans can be modeled similarly using "image grammars" and "action grammars"; and common-sense logical semantic structure can be modeled using dependently-typed lambda calculus with uncertain truth values. Given these formalisms, the grounding of linguistic descriptions in spatiotemporal perceptions and coordinated actions consists of following morphisms from language to logic through to spacetime and body (for comprehension), and vice versa (for generation). The mapping is indicated between the spatial relationships in the Region Connection Calculus and Allen Interval Algebra and corresponding entries in the link grammar syntax parsing dictionary. Further, the abstractions introduced here are shown to naturally model the structures and systems currently being deployed in the context of using the OpenCog cognitive architecture to control Hanson Robotics humanoid robots.

Learning Language from a Large (Unannotated) Corpus

Jan 14, 2014

Abstract:A novel approach to the fully automated, unsupervised extraction of dependency grammars and associated syntax-to-semantic-relationship mappings from large text corpora is described. The suggested approach builds on the authors' prior work with the Link Grammar, RelEx and OpenCog systems, as well as on a number of prior papers and approaches from the statistical language learning literature. If successful, this approach would enable the mining of all the information needed to power a natural language comprehension and generation system, directly from a large, unannotated corpus.

Durkheim Project Data Analysis Report

Oct 24, 2013

Abstract:This report describes the suicidality prediction models created under the DARPA DCAPS program in association with the Durkheim Project [http://durkheimproject.org/]. The models were built primarily from unstructured text (free-format clinician notes) for several hundred patient records obtained from the Veterans Health Administration (VHA). The models were constructed using a genetic programming algorithm applied to bag-of-words and bag-of-phrases datasets. The influence of additional structured data was explored but was found to be minor. Given the small dataset size, classification between cohorts was high fidelity (98%). Cross-validation suggests these models are reasonably predictive, with an accuracy of 50% to 69% on five rotating folds, with ensemble averages of 58% to 67%. One particularly noteworthy result is that word-pairs can dramatically improve classification accuracy; but this is the case only when one of the words in the pair is already known to have a high predictive value. By contrast, the set of all possible word-pairs does not improve on a simple bag-of-words model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge