Likith Reddy

LRH-Net: A Multi-Level Knowledge Distillation Approach for Low-Resource Heart Network

Apr 11, 2022

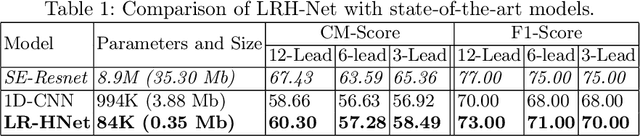

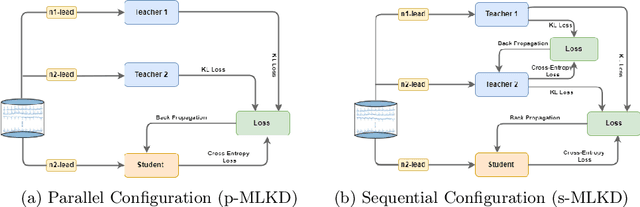

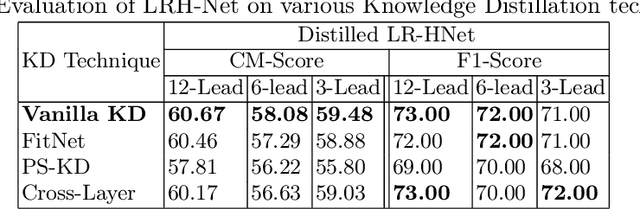

Abstract:An electrocardiogram (ECG) monitors the electrical activity generated by the heart and is used to detect fatal cardiovascular diseases (CVDs). Conventionally, to capture the precise electrical activity, clinical experts use multiple-lead ECGs (typically 12 leads). But in recent times, large-size deep learning models have been used to detect these diseases. However, such models require heavy compute resources like huge memory and long inference time. To alleviate these shortcomings, we propose a low-parameter model, named Low Resource Heart-Network (LRH-Net), which uses fewer leads to detect ECG anomalies in a resource-constrained environment. A multi-level knowledge distillation process is used on top of that to get better generalization performance on our proposed model. The multi-level knowledge distillation process distills the knowledge to LRH-Net trained on a reduced number of leads from higher parameter (teacher) models trained on multiple leads to reduce the performance gap. The proposed model is evaluated on the PhysioNet-2020 challenge dataset with constrained input. The parameters of the LRH-Net are 106x less than our teacher model for detecting CVDs. The performance of the LRH-Net was scaled up to 3.2% and the inference time scaled down by 75% compared to the teacher model. In contrast to the compute- and parameter-intensive deep learning techniques, the proposed methodology uses a subset of ECG leads using the low resource LRH-Net, making it eminently suitable for deployment on edge devices.

mulEEG: A Multi-View Representation Learning on EEG Signals

Apr 07, 2022

Abstract:Modeling effective representations using multiple views that positively influence each other is challenging, and the existing methods perform poorly on Electroencephalogram (EEG) signals for sleep-staging tasks. In this paper, we propose a novel multi-view self-supervised method (mulEEG) for unsupervised EEG representation learning. Our method attempts to effectively utilize the complementary information available in multiple views to learn better representations. We introduce diverse loss that further encourages complementary information across multiple views. Our method with no access to labels beats the supervised training while outperforming multi-view baseline methods on transfer learning experiments carried out on sleep-staging tasks. We posit that our method was able to learn better representations by using complementary multi-views.

IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification

Apr 06, 2022

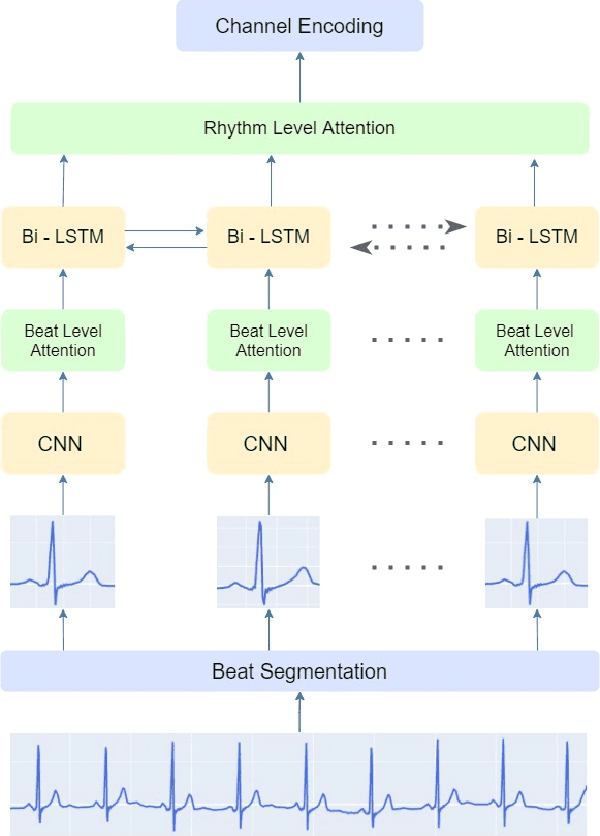

Abstract:Early detection of cardiovascular diseases is crucial for effective treatment and an electrocardiogram (ECG) is pivotal for diagnosis. The accuracy of Deep Learning based methods for ECG signal classification has progressed in recent years to reach cardiologist-level performance. In clinical settings, a cardiologist makes a diagnosis based on the standard 12-channel ECG recording. Automatic analysis of ECG recordings from a multiple-channel perspective has not been given enough attention, so it is essential to analyze an ECG recording from a multiple-channel perspective. We propose a model that leverages the multiple-channel information available in the standard 12-channel ECG recordings and learns patterns at the beat, rhythm, and channel level. The experimental results show that our model achieved a macro-averaged ROC-AUC score of 0.9216, mean accuracy of 88.85\%, and a maximum F1 score of 0.8057 on the PTB-XL dataset. The attention visualization results from the interpretable model are compared against the cardiologist's guidelines to validate the correctness and usability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge