Liat Ben-Sira

SegQC: a segmentation network-based framework for multi-metric segmentation quality control and segmentation error detection in volumetric medical images

Nov 12, 2024Abstract:Quality control of structures segmentation in volumetric medical images is important for identifying segmentation errors in clinical practice and for facilitating model development. This paper introduces SegQC, a novel framework for segmentation quality estimation and segmentation error detection. SegQC computes an estimate measure of the quality of a segmentation in volumetric scans and in their individual slices and identifies possible segmentation error regions within a slice. The key components include: 1. SegQC-Net, a deep network that inputs a scan and its segmentation mask and outputs segmentation error probabilities for each voxel in the scan; 2. three new segmentation quality metrics, two overlap metrics and a structure size metric, computed from the segmentation error probabilities; 3. a new method for detecting possible segmentation errors in scan slices computed from the segmentation error probabilities. We introduce a new evaluation scheme to measure segmentation error discrepancies based on an expert radiologist corrections of automatically produced segmentations that yields smaller observer variability and is closer to actual segmentation errors. We demonstrate SegQC on three fetal structures in 198 fetal MRI scans: fetal brain, fetal body and the placenta. To assess the benefits of SegQC, we compare it to the unsupervised Test Time Augmentation (TTA)-based quality estimation. Our studies indicate that SegQC outperforms TTA-based quality estimation in terms of Pearson correlation and MAE for fetal body and fetal brain structures segmentation. Our segmentation error detection method achieved recall and precision rates of 0.77 and 0.48 for fetal body, and 0.74 and 0.55 for fetal brain segmentation error detection respectively. SegQC enhances segmentation metrics estimation for whole scans and individual slices, as well as provides error regions detection.

Test-time augmentation-based active learning and self-training for label-efficient segmentation

Aug 21, 2023

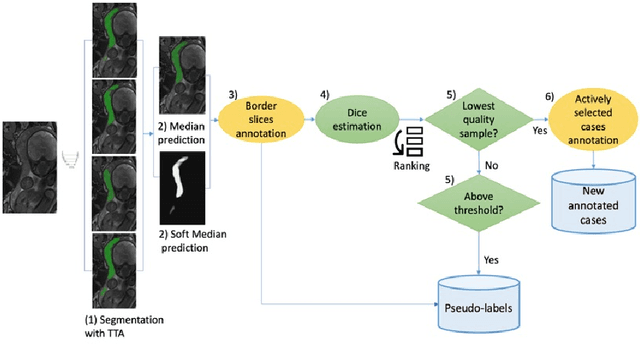

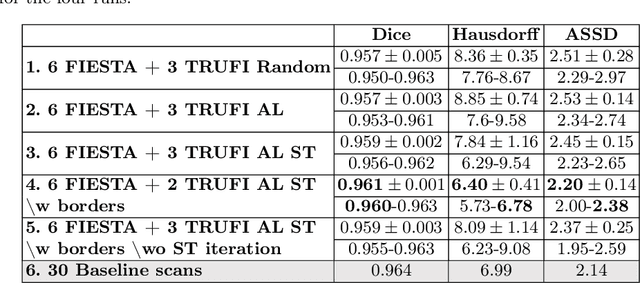

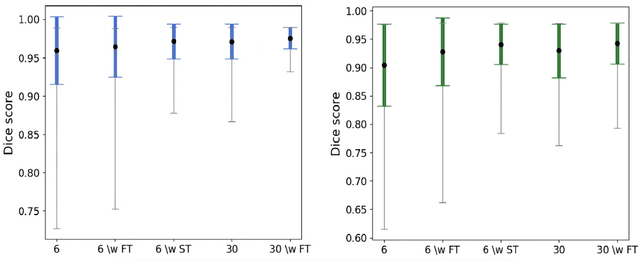

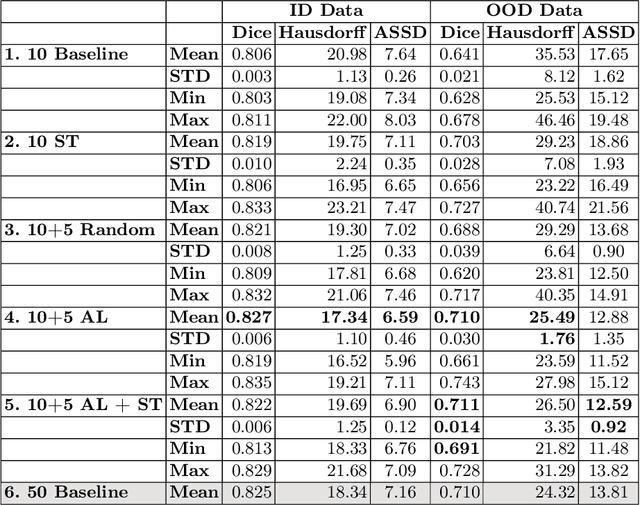

Abstract:Deep learning techniques depend on large datasets whose annotation is time-consuming. To reduce annotation burden, the self-training (ST) and active-learning (AL) methods have been developed as well as methods that combine them in an iterative fashion. However, it remains unclear when each method is the most useful, and when it is advantageous to combine them. In this paper, we propose a new method that combines ST with AL using Test-Time Augmentations (TTA). First, TTA is performed on an initial teacher network. Then, cases for annotation are selected based on the lowest estimated Dice score. Cases with high estimated scores are used as soft pseudo-labels for ST. The selected annotated cases are trained with existing annotated cases and ST cases with border slices annotations. We demonstrate the method on MRI fetal body and placenta segmentation tasks with different data variability characteristics. Our results indicate that ST is highly effective for both tasks, boosting performance for in-distribution (ID) and out-of-distribution (OOD) data. However, while self-training improved the performance of single-sequence fetal body segmentation when combined with AL, it slightly deteriorated performance of multi-sequence placenta segmentation on ID data. AL was helpful for the high variability placenta data, but did not improve upon random selection for the single-sequence body data. For fetal body segmentation sequence transfer, combining AL with ST following ST iteration yielded a Dice of 0.961 with only 6 original scans and 2 new sequence scans. Results using only 15 high-variability placenta cases were similar to those using 50 cases. Code is available at: https://github.com/Bella31/TTA-quality-estimation-ST-AL

Automatic fetal fat quantification from MRI

Sep 08, 2022

Abstract:Normal fetal adipose tissue (AT) development is essential for perinatal well-being. AT, or simply fat, stores energy in the form of lipids. Malnourishment may result in excessive or depleted adiposity. Although previous studies showed a correlation between the amount of AT and perinatal outcome, prenatal assessment of AT is limited by lacking quantitative methods. Using magnetic resonance imaging (MRI), 3D fat- and water-only images of the entire fetus can be obtained from two point Dixon images to enable AT lipid quantification. This paper is the first to present a methodology for developing a deep learning based method for fetal fat segmentation based on Dixon MRI. It optimizes radiologists' manual fetal fat delineation time to produce annotated training dataset. It consists of two steps: 1) model-based semi-automatic fetal fat segmentations, reviewed and corrected by a radiologist; 2) automatic fetal fat segmentation using DL networks trained on the resulting annotated dataset. Three DL networks were trained. We show a significant improvement in segmentation times (3:38 hours to < 1 hour) and observer variability (Dice of 0.738 to 0.906) compared to manual segmentation. Automatic segmentation of 24 test cases with the 3D Residual U-Net, nn-UNet and SWIN-UNetR transformer networks yields a mean Dice score of 0.863, 0.787 and 0.856, respectively. These results are better than the manual observer variability, and comparable to automatic adult and pediatric fat segmentation. A radiologist reviewed and corrected six new independent cases segmented using the best performing network, resulting in a Dice score of 0.961 and a significantly reduced correction time of 15:20 minutes. Using these novel segmentation methods and short MRI acquisition time, whole body subcutaneous lipids can be quantified for individual fetuses in the clinic and large-cohort research.

Automatic linear measurements of the fetal brain on MRI with deep neural networks

Jun 15, 2021

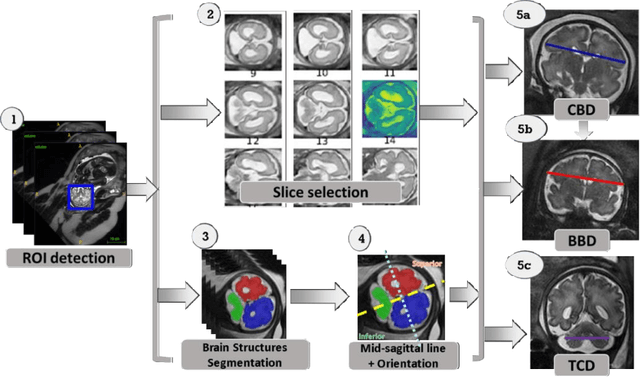

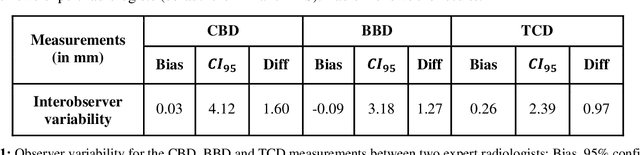

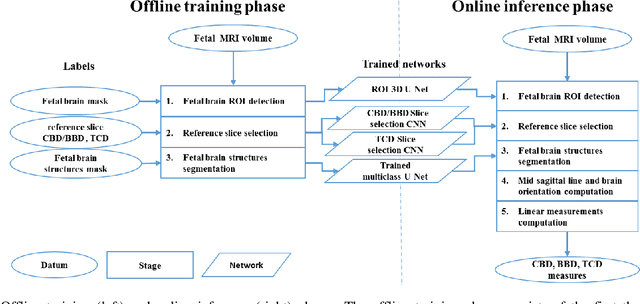

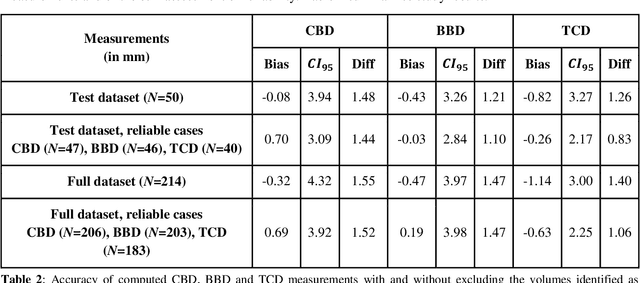

Abstract:Timely, accurate and reliable assessment of fetal brain development is essential to reduce short and long-term risks to fetus and mother. Fetal MRI is increasingly used for fetal brain assessment. Three key biometric linear measurements important for fetal brain evaluation are Cerebral Biparietal Diameter (CBD), Bone Biparietal Diameter (BBD), and Trans-Cerebellum Diameter (TCD), obtained manually by expert radiologists on reference slices, which is time consuming and prone to human error. The aim of this study was to develop a fully automatic method computing the CBD, BBD and TCD measurements from fetal brain MRI. The input is fetal brain MRI volumes which may include the fetal body and the mother's abdomen. The outputs are the measurement values and reference slices on which the measurements were computed. The method, which follows the manual measurements principle, consists of five stages: 1) computation of a Region Of Interest that includes the fetal brain with an anisotropic 3D U-Net classifier; 2) reference slice selection with a Convolutional Neural Network; 3) slice-wise fetal brain structures segmentation with a multiclass U-Net classifier; 4) computation of the fetal brain midsagittal line and fetal brain orientation, and; 5) computation of the measurements. Experimental results on 214 volumes for CBD, BBD and TCD measurements yielded a mean $L_1$ difference of 1.55mm, 1.45mm and 1.23mm respectively, and a Bland-Altman 95% confidence interval ($CI_{95}$) of 3.92mm, 3.98mm and 2.25mm respectively. These results are similar to the manual inter-observer variability. The proposed automatic method for computing biometric linear measurements of the fetal brain from MR imaging achieves human level performance. It has the potential of being a useful method for the assessment of fetal brain biometry in normal and pathological cases, and of improving routine clinical practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge