Lev Sorokin

Benchmarking Contextual Understanding for In-Car Conversational Systems

Dec 12, 2025

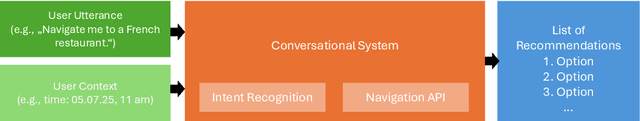

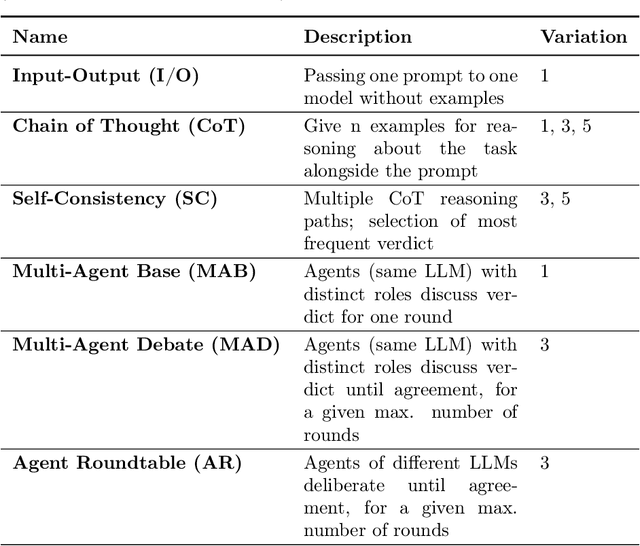

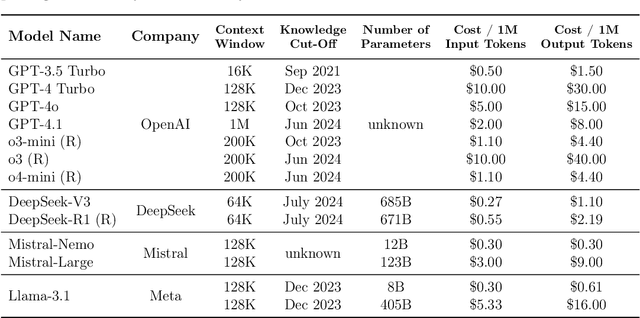

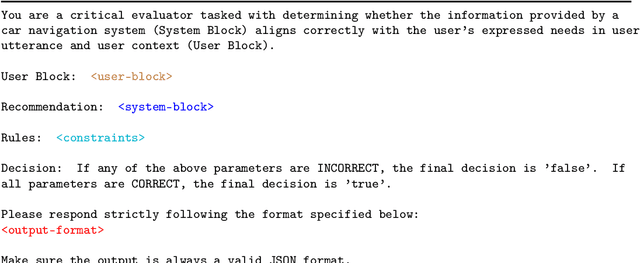

Abstract:In-Car Conversational Question Answering (ConvQA) systems significantly enhance user experience by enabling seamless voice interactions. However, assessing their accuracy and reliability remains a challenge. This paper explores the use of Large Language Models (LLMs) alongside advanced prompting techniques and agent-based methods to evaluate the extent to which ConvQA system responses adhere to user utterances. The focus lies on contextual understanding and the ability to provide accurate venue recommendations considering user constraints and situational context. To evaluate utterance-response coherence using an LLM, we synthetically generate user utterances accompanied by correct and modified failure-containing system responses. We use input-output, chain-of-thought, self-consistency prompting, and multi-agent prompting techniques with 13 reasoning and non-reasoning LLMs of varying sizes and providers, including OpenAI, DeepSeek, Mistral AI, and Meta. We evaluate our approach on a case study involving restaurant recommendations. The most substantial improvements occur for small non-reasoning models when applying advanced prompting techniques, particularly multi-agent prompting. However, reasoning models consistently outperform non-reasoning models, with the best performance achieved using single-agent prompting with self-consistency. Notably, DeepSeek-R1 reaches an F1-score of 0.99 at a cost of 0.002 USD per request. Overall, the best balance between effectiveness and cost-time efficiency is reached with the non-reasoning model DeepSeek-V3. Our findings show that LLM-based evaluation offers a scalable and accurate alternative to traditional human evaluation for benchmarking contextual understanding in ConvQA systems.

Automated Factual Benchmarking for In-Car Conversational Systems using Large Language Models

Apr 01, 2025Abstract:In-car conversational systems bring the promise to improve the in-vehicle user experience. Modern conversational systems are based on Large Language Models (LLMs), which makes them prone to errors such as hallucinations, i.e., inaccurate, fictitious, and therefore factually incorrect information. In this paper, we present an LLM-based methodology for the automatic factual benchmarking of in-car conversational systems. We instantiate our methodology with five LLM-based methods, leveraging ensembling techniques and diverse personae to enhance agreement and minimize hallucinations. We use our methodology to evaluate CarExpert, an in-car retrieval-augmented conversational question answering system, with respect to the factual correctness to a vehicle's manual. We produced a novel dataset specifically created for the in-car domain, and tested our methodology against an expert evaluation. Our results show that the combination of GPT-4 with the Input Output Prompting achieves over 90 per cent factual correctness agreement rate with expert evaluations, other than being the most efficient approach yielding an average response time of 4.5s. Our findings suggest that LLM-based testing constitutes a viable approach for the validation of conversational systems regarding their factual correctness.

Simulator Ensembles for Trustworthy Autonomous Driving Testing

Mar 11, 2025Abstract:Scenario-based testing with driving simulators is extensively used to identify failing conditions of automated driving assistance systems (ADAS) and reduce the amount of in-field road testing. However, existing studies have shown that repeated test execution in the same as well as in distinct simulators can yield different outcomes, which can be attributed to sources of flakiness or different implementations of the physics, among other factors. In this paper, we present MultiSim, a novel approach to multi-simulation ADAS testing based on a search-based testing approach that leverages an ensemble of simulators to identify failure-inducing, simulator-agnostic test scenarios. During the search, each scenario is evaluated jointly on multiple simulators. Scenarios that produce consistent results across simulators are prioritized for further exploration, while those that fail on only a subset of simulators are given less priority, as they may reflect simulator-specific issues rather than generalizable failures. Our case study, which involves testing a deep neural network-based ADAS on different pairs of three widely used simulators, demonstrates that MultiSim outperforms single-simulator testing by achieving on average a higher rate of simulator-agnostic failures by 51%. Compared to a state-of-the-art multi-simulator approach that combines the outcome of independent test generation campaigns obtained in different simulators, MultiSim identifies 54% more simulator-agnostic failing tests while showing a comparable validity rate. An enhancement of MultiSim that leverages surrogate models to predict simulator disagreements and bypass executions does not only increase the average number of valid failures but also improves efficiency in finding the first valid failure.

Can Search-Based Testing with Pareto Optimization Effectively Cover Failure-Revealing Test Inputs?

Oct 15, 2024Abstract:Search-based software testing (SBST) is a widely adopted technique for testing complex systems with large input spaces, such as Deep Learning-enabled (DL-enabled) systems. Many SBST techniques focus on Pareto-based optimization, where multiple objectives are optimized in parallel to reveal failures. However, it is important to ensure that identified failures are spread throughout the entire failure-inducing area of a search domain and not clustered in a sub-region. This ensures that identified failures are semantically diverse and reveal a wide range of underlying causes. In this paper, we present a theoretical argument explaining why testing based on Pareto optimization is inadequate for covering failure-inducing areas within a search domain. We support our argument with empirical results obtained by applying two widely used types of Pareto-based optimization techniques, namely NSGA-II (an evolutionary algorithm) and MOPSO (a swarm-based algorithm), to two DL-enabled systems: an industrial Automated Valet Parking (AVP) system and a system for classifying handwritten digits. We measure the coverage of failure-revealing test inputs in the input space using a metric that we refer to as the Coverage Inverted Distance quality indicator. Our results show that NSGA-II and MOPSO are not more effective than a na\"ive random search baseline in covering test inputs that reveal failures. The replication package for this study is available in a GitHub repository.

Towards Continuous Assurance Case Creation for ADS with the Evidential Tool Bus

Mar 04, 2024

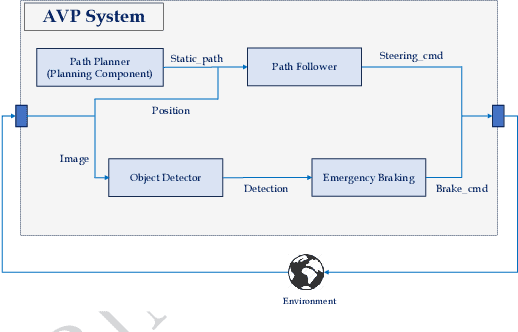

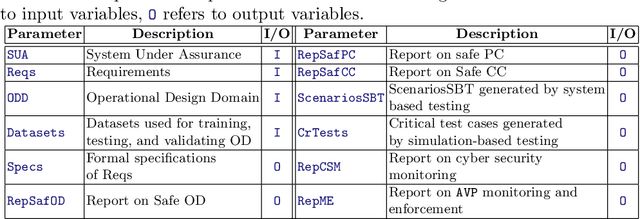

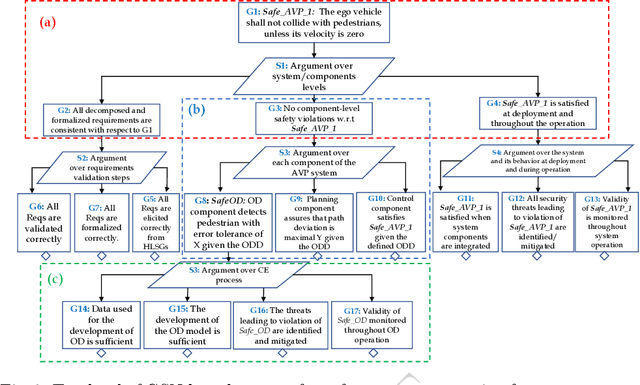

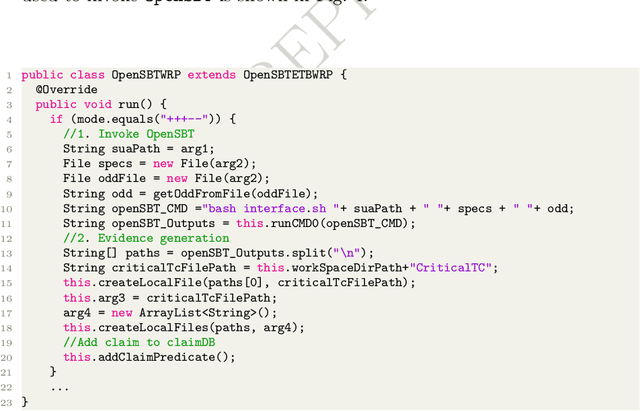

Abstract:An assurance case has become an integral component for the certification of safety-critical systems. While manually defining assurance case patterns can be not avoided, system-specific instantiations of assurance case patterns are both costly and time-consuming. It becomes especially complex to maintain an assurance case for a system when the requirements of the System-Under-Assurance change, or an assurance claim becomes invalid due to, e.g., degradation of a systems component, as common when deploying learning-enabled components. In this paper, we report on our preliminary experience leveraging the tool integration framework Evidential Tool Bus (ETB) for the construction and continuous maintenance of an assurance case from a predefined assurance case pattern. Specifically, we demonstrate the assurance process on an industrial Automated Valet Parking system from the automotive domain. We present the formalization of the provided assurance case pattern in the ETB processable logical specification language of workflows. Our findings show that ETB is able to create and maintain evidence required for the construction of an assurance case.

Guiding the Search Towards Failure-Inducing Test Inputs Using Support Vector Machines

Jan 22, 2024

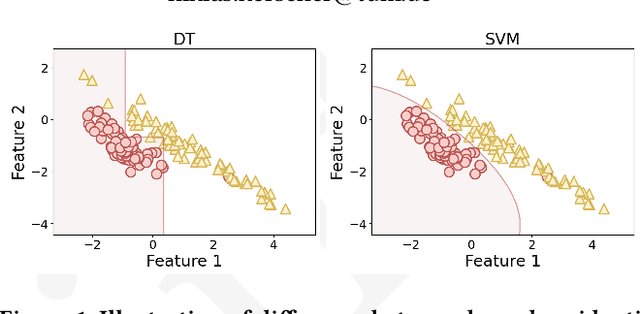

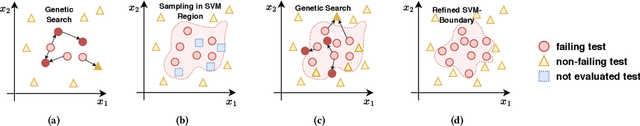

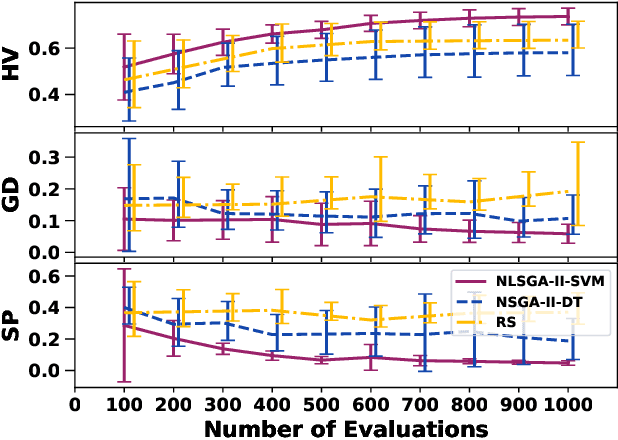

Abstract:In this paper, we present NSGA-II-SVM (Non-dominated Sorting Genetic Algorithm with Support Vector Machine Guidance), a novel learnable evolutionary and search-based testing algorithm that leverages Support Vector Machine (SVM) classification models to direct the search towards failure-revealing test inputs. Supported by genetic search, NSGA-II-SVM creates iteratively SVM-based models of the test input space, learning which regions in the search space are promising to be explored. A subsequent sampling and repetition of evolutionary search iterations allow to refine and make the model more accurate in the prediction. Our preliminary evaluation of NSGA-II-SVM by testing an Automated Valet Parking system shows that NSGA-II-SVM is more effective in identifying more critical test cases than a state of the art learnable evolutionary testing technique as well as naive random search.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge