Leqi Geng

FAIR: Frequency-aware Image Restoration for Industrial Visual Anomaly Detection

Sep 13, 2023

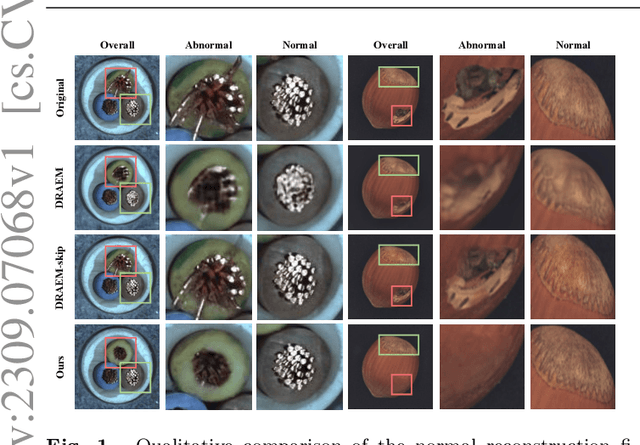

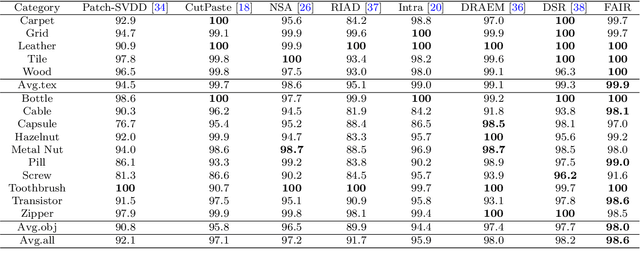

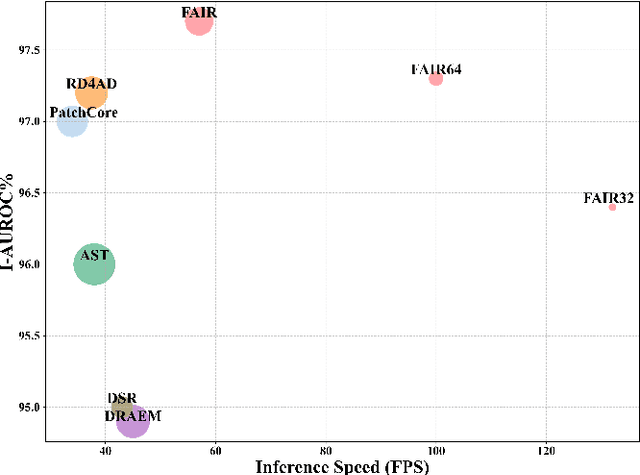

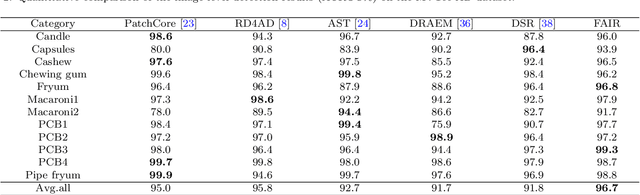

Abstract:Image reconstruction-based anomaly detection models are widely explored in industrial visual inspection. However, existing models usually suffer from the trade-off between normal reconstruction fidelity and abnormal reconstruction distinguishability, which damages the performance. In this paper, we find that the above trade-off can be better mitigated by leveraging the distinct frequency biases between normal and abnormal reconstruction errors. To this end, we propose Frequency-aware Image Restoration (FAIR), a novel self-supervised image restoration task that restores images from their high-frequency components. It enables precise reconstruction of normal patterns while mitigating unfavorable generalization to anomalies. Using only a simple vanilla UNet, FAIR achieves state-of-the-art performance with higher efficiency on various defect detection datasets. Code: https://github.com/liutongkun/FAIR.

Reconstruction from edge image combined with color and gradient difference for industrial surface anomaly detection

Oct 26, 2022

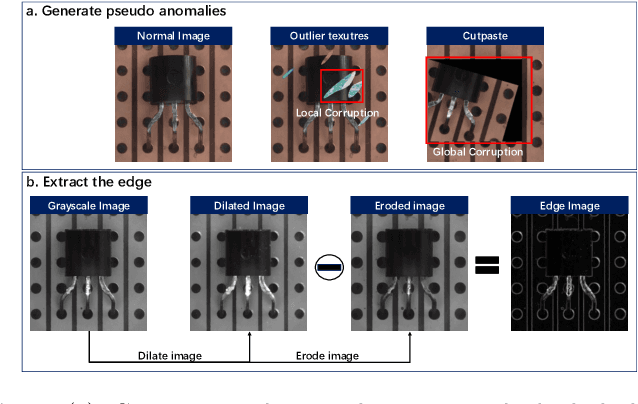

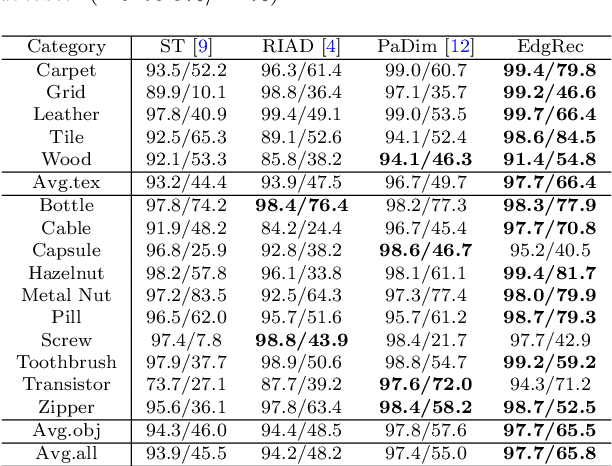

Abstract:Reconstruction-based methods are widely explored in industrial visual anomaly detection. Such methods commonly require the model to well reconstruct the normal patterns but fail in the anomalies, and thus the anomalies can be detected by evaluating the reconstruction errors. However, in practice, it's usually difficult to control the generalization boundary of the model. The model with an overly strong generalization capability can even well reconstruct the abnormal regions, making them less distinguishable, while the model with a poor generalization capability can not reconstruct those changeable high-frequency components in the normal regions, which ultimately leads to false positives. To tackle the above issue, we propose a new reconstruction network where we reconstruct the original RGB image from its gray value edges (EdgRec). Specifically, this is achieved by an UNet-type denoising autoencoder with skip connections. The input edge and skip connections can well preserve the high-frequency information in the original image. Meanwhile, the proposed restoration task can force the network to memorize the normal low-frequency and color information. Besides, the denoising design can prevent the model from directly copying the original high-frequent components. To evaluate the anomalies, we further propose a new interpretable hand-crafted evaluation function that considers both the color and gradient differences. Our method achieves competitive results on the challenging benchmark MVTec AD (97.8\% for detection and 97.7\% for localization, AUROC). In addition, we conduct experiments on the MVTec 3D-AD dataset and show convincing results using RGB images only. Our code will be available at https://github.com/liutongkun/EdgRec.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge