Leila Yousefi

An analysis of retracted papers in Computer Science

Jun 14, 2022

Abstract:Context: The retraction of research papers, for whatever reason, is a growing phenomenon. However, although retracted paper information is publicly available via publishers, it is somewhat distributed and inconsistent. Objective: The aim is to assess: (i) the extent and nature of retracted research in Computer Science (CS) (ii) the post-retraction citation behaviour of retracted works and (iii) the potential impact on systematic reviews and mapping studies. Method: We analyse the Retraction Watch database and take citation information from the Web of Science and Google scholar. Results: We find that of the 33,955 entries in the Retraction watch database (16 May 2022), 2,816 are classified as CS, i.e., approximately 8.3%. For CS, 56% of retracted papers, provide little or no information as to the reasons. This contrasts with 26% for other disciplines. There is also a remarkable disparity between different publishers, a tendency for multiple versions of a retracted paper over and above the Version of Record (VoR), and for new citations long after a paper is officially retracted. Conclusions: Unfortunately retraction seems to be a sufficiently common outcome for a scientific paper that we as a research community need to take it more seriously, e.g., standardising procedures and taxonomies across publishers and the provision of appropriate research tools. Finally, we recommend particular caution when undertaking secondary analyses and meta-analyses which are at risk of becoming contaminated by these problem primary studies.

The Prevalence of Errors in Machine Learning Experiments

Sep 10, 2019

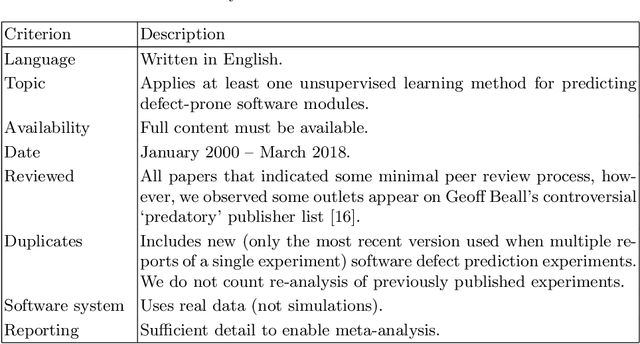

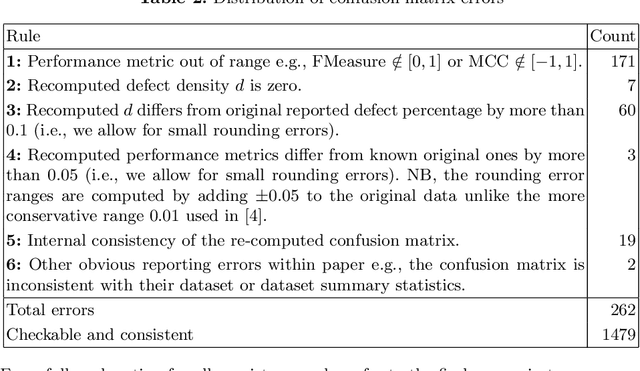

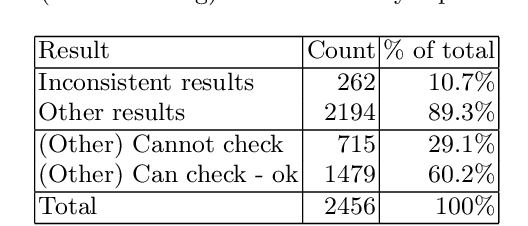

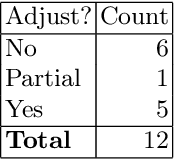

Abstract:Context: Conducting experiments is central to research machine learning research to benchmark, evaluate and compare learning algorithms. Consequently it is important we conduct reliable, trustworthy experiments. Objective: We investigate the incidence of errors in a sample of machine learning experiments in the domain of software defect prediction. Our focus is simple arithmetical and statistical errors. Method: We analyse 49 papers describing 2456 individual experimental results from a previously undertaken systematic review comparing supervised and unsupervised defect prediction classifiers. We extract the confusion matrices and test for relevant constraints, e.g., the marginal probabilities must sum to one. We also check for multiple statistical significance testing errors. Results: We find that a total of 22 out of 49 papers contain demonstrable errors. Of these 7 were statistical and 16 related to confusion matrix inconsistency (one paper contained both classes of error). Conclusions: Whilst some errors may be of a relatively trivial nature, e.g., transcription errors their presence does not engender confidence. We strongly urge researchers to follow open science principles so errors can be more easily be detected and corrected, thus as a community reduce this worryingly high error rate with our computational experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge