Leila Tavakoli

Online and Offline Evaluation in Search Clarification

Mar 14, 2024

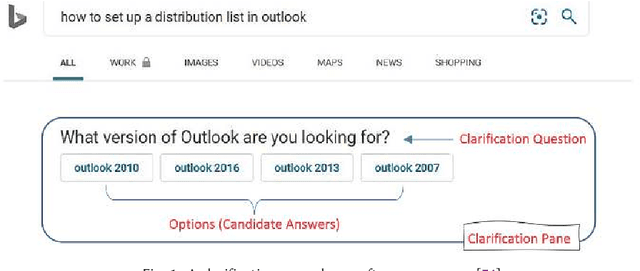

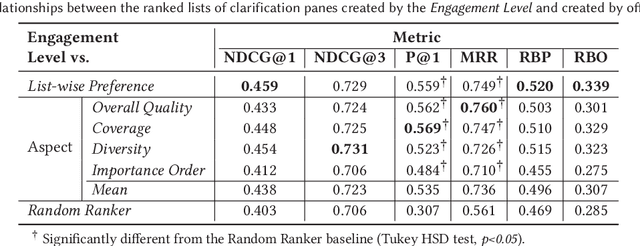

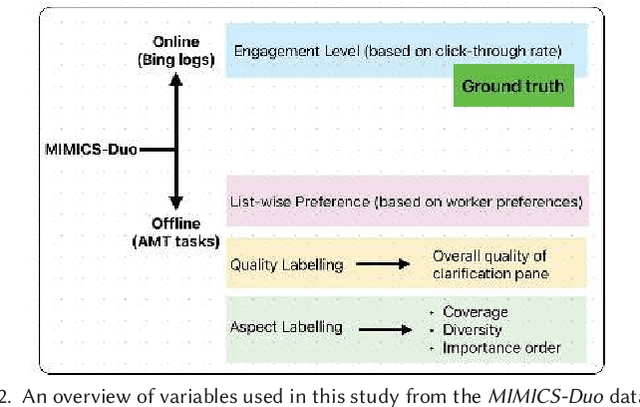

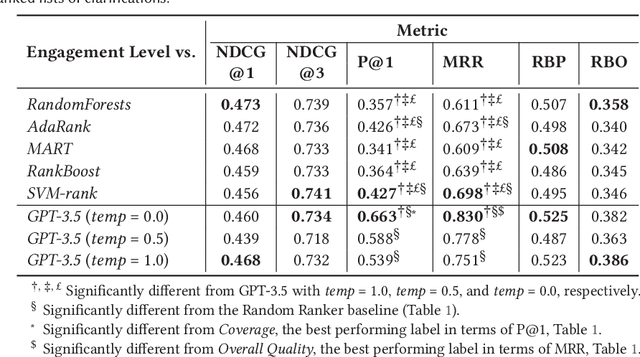

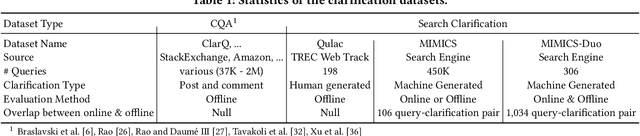

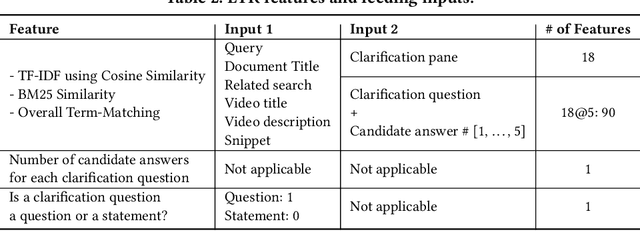

Abstract:The effectiveness of clarification question models in engaging users within search systems is currently constrained, casting doubt on their overall usefulness. To improve the performance of these models, it is crucial to employ assessment approaches that encompass both real-time feedback from users (online evaluation) and the characteristics of clarification questions evaluated through human assessment (offline evaluation). However, the relationship between online and offline evaluations has been debated in information retrieval. This study aims to investigate how this discordance holds in search clarification. We use user engagement as ground truth and employ several offline labels to investigate to what extent the offline ranked lists of clarification resemble the ideal ranked lists based on online user engagement.

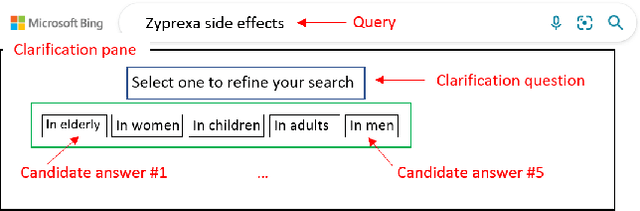

MIMICS-Duo: Offline & Online Evaluation of Search Clarification

Jun 09, 2022

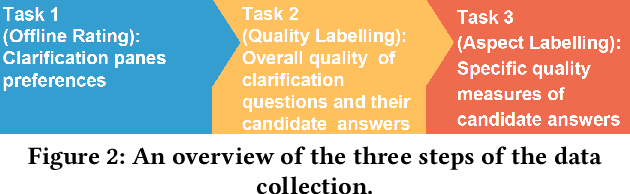

Abstract:Asking clarification questions is an active area of research; however, resources for training and evaluating search clarification methods are not sufficient. To address this issue, we describe MIMICS-Duo, a new freely available dataset of 306 search queries with multiple clarifications (a total of 1,034 query-clarification pairs). MIMICS-Duo contains fine-grained annotations on clarification questions and their candidate answers and enhances the existing MIMICS datasets by enabling multi-dimensional evaluation of search clarification methods, including online and offline evaluation. We conduct extensive analysis to demonstrate the relationship between offline and online search clarification datasets and outline several research directions enabled by MIMICS-Duo. We believe that this resource will help researchers better understand clarification in search.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge