Laurence Illing Midgley

Flow Annealed Importance Sampling Bootstrap

Aug 03, 2022

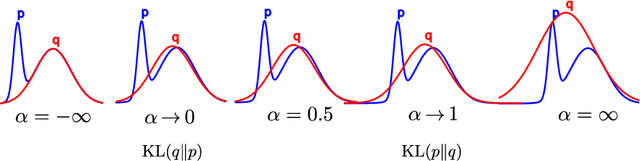

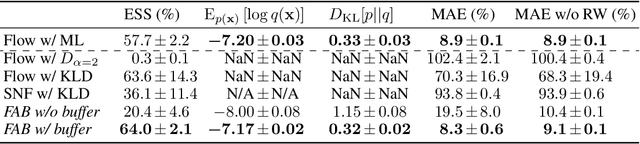

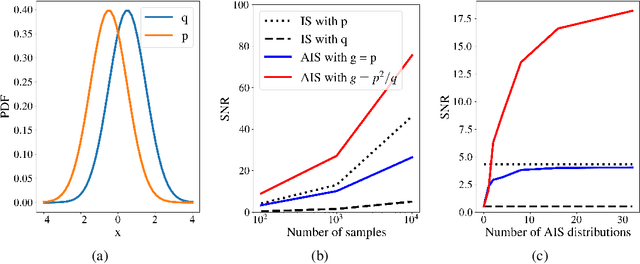

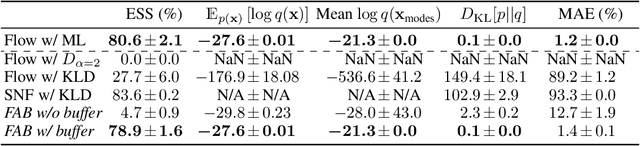

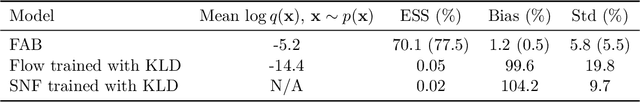

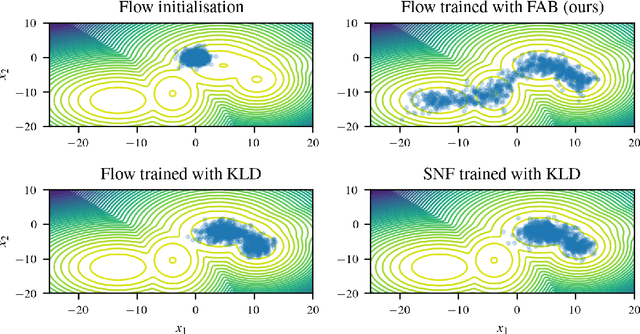

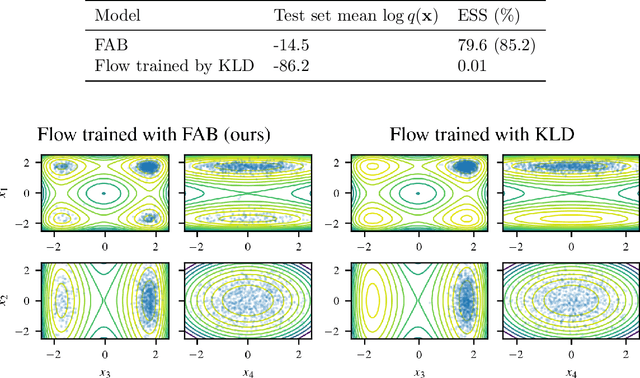

Abstract:Normalizing flows are tractable density models that can approximate complicated target distributions, e.g. Boltzmann distributions of physical systems. However, current methods for training flows either suffer from mode-seeking behavior, use samples from the target generated beforehand by expensive MCMC simulations, or use stochastic losses that have very high variance. To avoid these problems, we augment flows with annealed importance sampling (AIS) and minimize the mass covering $\alpha$-divergence with $\alpha=2$, which minimizes importance weight variance. Our method, Flow AIS Bootstrap (FAB), uses AIS to generate samples in regions where the flow is a poor approximation of the target, facilitating the discovery of new modes. We target with AIS the minimum variance distribution for the estimation of the $\alpha$-divergence via importance sampling. We also use a prioritized buffer to store and reuse AIS samples. These two features significantly improve FAB's performance. We apply FAB to complex multimodal targets and show that we can approximate them very accurately where previous methods fail. To the best of our knowledge, we are the first to learn the Boltzmann distribution of the alanine dipeptide molecule using only the unnormalized target density and without access to samples generated via Molecular Dynamics (MD) simulations: FAB produces better results than training via maximum likelihood on MD samples while using 100 times fewer target evaluations. After reweighting samples with importance weights, we obtain unbiased histograms of dihedral angles that are almost identical to the ground truth ones.

Bootstrap Your Flow

Dec 06, 2021

Abstract:Normalising flows are flexible, parameterized distributions that can be used to approximate expectations from intractable distributions via importance sampling. However, current flow-based approaches are limited on challenging targets where they either suffer from mode seeking behaviour or high variance in the training loss, or rely on samples from the target distribution, which may not be available. To address these challenges, we combine flows with annealed importance sampling (AIS), while using the $\alpha$-divergence as our objective, in a novel training procedure, FAB (Flow AIS Bootstrap). Thereby, the flow and AIS to improve each other in a bootstrapping manner. We demonstrate that FAB can be used to produce accurate approximations to complex target distributions, including Boltzmann distributions, in problems where previous flow-based methods fail.

Deep Reinforcement Learning for Process Synthesis

Sep 23, 2020

Abstract:This paper demonstrates the application of reinforcement learning (RL) to process synthesis by presenting Distillation Gym, a set of RL environments in which an RL agent is tasked with designing a distillation train, given a user defined multi-component feed stream. Distillation Gym interfaces with a process simulator (COCO and ChemSep) to simulate the environment. A demonstration of two distillation problem examples are discussed in this paper (a Benzene, Toluene, P-xylene separation problem and a hydrocarbon separation problem), in which a deep RL agent is successfully able to learn within Distillation Gym to produce reasonable designs. Finally, this paper proposes the creation of Chemical Engineering Gym, an all-purpose reinforcement learning software toolkit for chemical engineering process synthesis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge