Lars Johannsmeier

Point Bridge: 3D Representations for Cross Domain Policy Learning

Jan 24, 2026Abstract:Robot foundation models are beginning to deliver on the promise of generalist robotic agents, yet progress remains constrained by the scarcity of large-scale real-world manipulation datasets. Simulation and synthetic data generation offer a scalable alternative, but their usefulness is limited by the visual domain gap between simulation and reality. In this work, we present Point Bridge, a framework that leverages unified, domain-agnostic point-based representations to unlock synthetic datasets for zero-shot sim-to-real policy transfer, without explicit visual or object-level alignment. Point Bridge combines automated point-based representation extraction via Vision-Language Models (VLMs), transformer-based policy learning, and efficient inference-time pipelines to train capable real-world manipulation agents using only synthetic data. With additional co-training on small sets of real demonstrations, Point Bridge further improves performance, substantially outperforming prior vision-based sim-and-real co-training methods. It achieves up to 44% gains in zero-shot sim-to-real transfer and up to 66% with limited real data across both single-task and multitask settings. Videos of the robot are best viewed at: https://pointbridge3d.github.io/

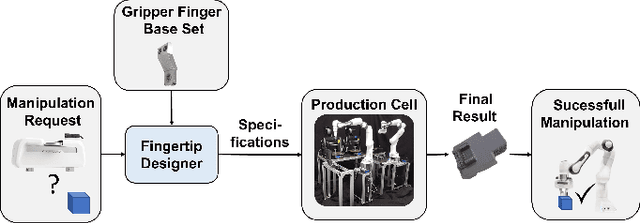

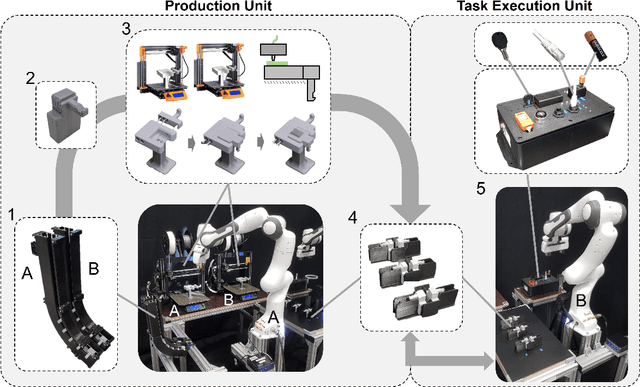

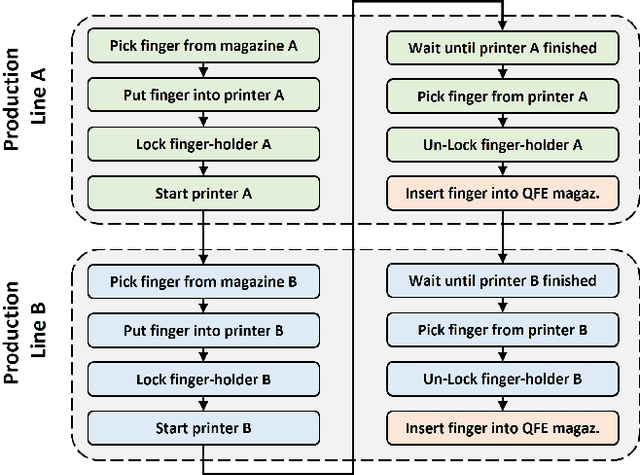

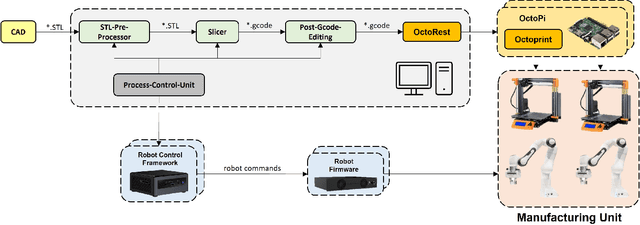

Towards Task-Specific Modular Gripper Fingers: Automatic Production of Fingertip Mechanics

Oct 18, 2022

Abstract:The number of sequential tasks a single gripper can perform is significantly limited by its design. In many cases, changing the gripper fingers is required to successfully conduct multiple consecutive tasks. For this reason, several robotic tool change systems have been introduced that allow an automatic changing of the entire end-effector. However, many situations require only the modification or the change of the fingertip, making the exchange of the entire gripper uneconomic. In this paper, we introduce a paradigm for automatic task-specific fingertip production. The setup used in the proposed framework consists of a production and task execution unit, containing a robotic manipulator, and two 3D printers - autonomously producing the gripper fingers. It also consists of a second manipulator that uses a quick-exchange mechanism to pick up the printed fingertips and evaluates gripping performance. The setup is experimentally validated by conducting automatic production of three different fingertips and executing graspstability tests as well as multiple pick- and insertion tasks, with and without position offsets - using these fingertips. The proposed paradigm, indeed, goes beyond fingertip production and serves as a foundation for a fully automatic fingertip design, production and application pipeline - potentially improving manufacturing flexibility and representing a new production paradigm: tactile 3D manufacturing.

A Framework for Robot Manipulation: Skill Formalism, Meta Learning and Adaptive Control

May 22, 2018

Abstract:In this paper we introduce a novel framework for expressing and learning force-sensitive robot manipulation skills. It is based on a formalism that extends our previous work on adaptive impedance control with meta parameter learning and compatible skill specifications. This way the system is also able to make use of abstract expert knowledge by incorporating process descriptions and quality evaluation metrics. We evaluate various state-of-the-art schemes for the meta parameter learning and experimentally compare selected ones. Our results clearly indicate that the combination of our adaptive impedance controller with a carefully defined skill formalism significantly reduces the complexity of manipulation tasks even for learning peg-in-hole with submillimeter industrial tolerances. Overall, the considered system is able to learn variations of this skill in under 20 minutes. In fact, experimentally the system was able to perform the learned tasks faster than humans, leading to the first learning-based solution of complex assembly at such real-world performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge