Lakmal Meegahapola

M3BAT: Unsupervised Domain Adaptation for Multimodal Mobile Sensing with Multi-Branch Adversarial Training

Apr 26, 2024

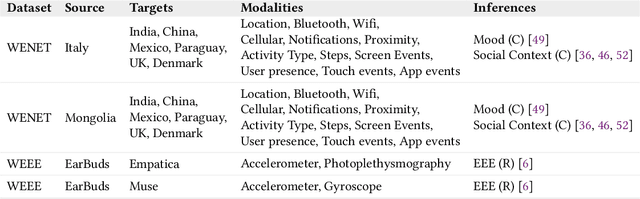

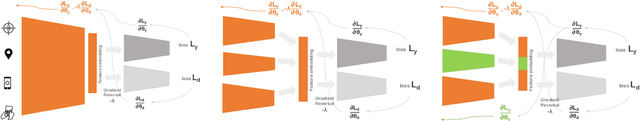

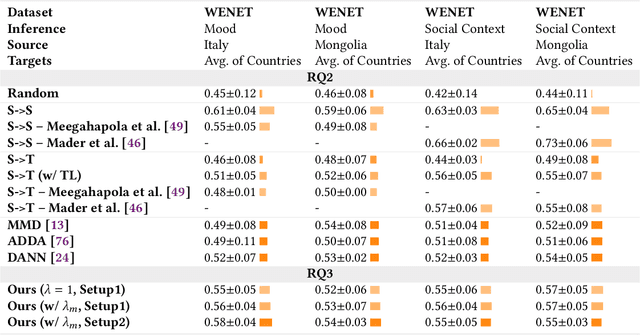

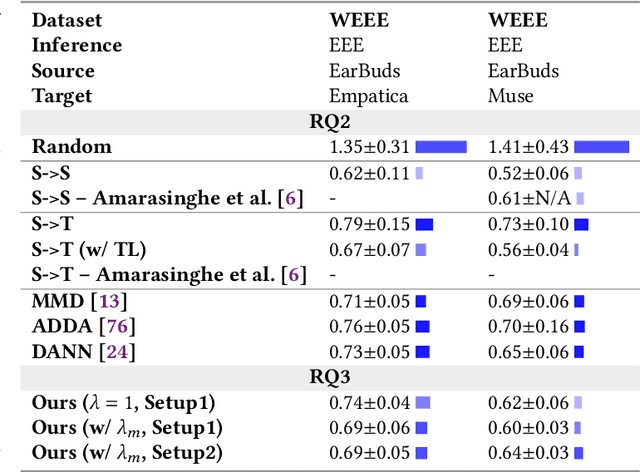

Abstract:Over the years, multimodal mobile sensing has been used extensively for inferences regarding health and well being, behavior, and context. However, a significant challenge hindering the widespread deployment of such models in real world scenarios is the issue of distribution shift. This is the phenomenon where the distribution of data in the training set differs from the distribution of data in the real world, the deployment environment. While extensively explored in computer vision and natural language processing, and while prior research in mobile sensing briefly addresses this concern, current work primarily focuses on models dealing with a single modality of data, such as audio or accelerometer readings, and consequently, there is little research on unsupervised domain adaptation when dealing with multimodal sensor data. To address this gap, we did extensive experiments with domain adversarial neural networks (DANN) showing that they can effectively handle distribution shifts in multimodal sensor data. Moreover, we proposed a novel improvement over DANN, called M3BAT, unsupervised domain adaptation for multimodal mobile sensing with multi-branch adversarial training, to account for the multimodality of sensor data during domain adaptation with multiple branches. Through extensive experiments conducted on two multimodal mobile sensing datasets, three inference tasks, and 14 source-target domain pairs, including both regression and classification, we demonstrate that our approach performs effectively on unseen domains. Compared to directly deploying a model trained in the source domain to the target domain, the model shows performance increases up to 12% AUC (area under the receiver operating characteristics curves) on classification tasks, and up to 0.13 MAE (mean absolute error) on regression tasks.

Your Day in Your Pocket: Complex Activity Recognition from Smartphone Accelerometers

Jan 17, 2023

Abstract:Human Activity Recognition (HAR) enables context-aware user experiences where mobile apps can alter content and interactions depending on user activities. Hence, smartphones have become valuable for HAR as they allow large, and diversified data collection. Although previous work in HAR managed to detect simple activities (i.e., sitting, walking, running) with good accuracy using inertial sensors (i.e., accelerometer), the recognition of complex daily activities remains an open problem, specially in remote work/study settings when people are more sedentary. Moreover, understanding the everyday activities of a person can support the creation of applications that aim to support their well-being. This paper investigates the recognition of complex activities exclusively using smartphone accelerometer data. We used a large smartphone sensing dataset collected from over 600 users in five countries during the pandemic and showed that deep learning-based, binary classification of eight complex activities (sleeping, eating, watching videos, online communication, attending a lecture, sports, shopping, studying) can be achieved with AUROC scores up to 0.76 with partially personalized models. This shows encouraging signs toward assessing complex activities only using phone accelerometer data in the post-pandemic world.

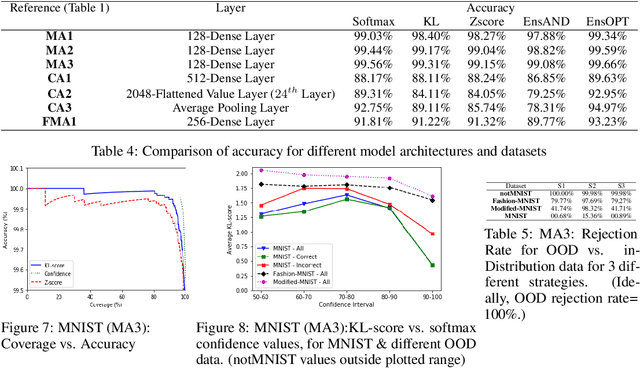

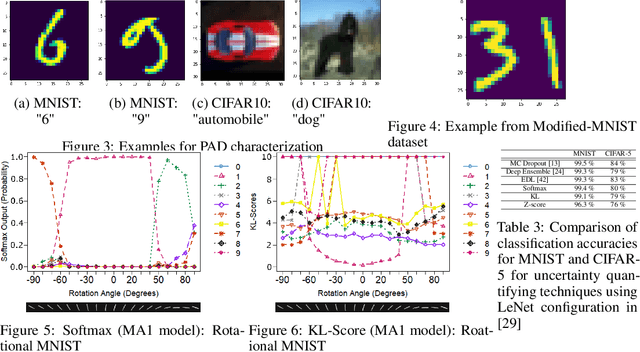

Prior Activation Distribution (PAD): A Versatile Representation to Utilize DNN Hidden Units

Jul 05, 2019

Abstract:In this paper, we introduce the concept of Prior Activation Distribution (PAD) as a versatile and general technique to capture the typical activation patterns of hidden layer units of a Deep Neural Network used for classification tasks. We show that the combined neural activations of such a hidden layer have class-specific distributional properties, and then define multiple statistical measures to compute how far a test sample's activations deviate from such distributions. Using a variety of benchmark datasets (including MNIST, CIFAR10, Fashion-MNIST & notMNIST), we show how such PAD-based measures can be used, independent of any training technique, to (a) derive fine-grained uncertainty estimates for inferences; (b) provide inferencing accuracy competitive with alternatives that require execution of the full pipeline, and (c) reliably isolate out-of-distribution test samples.

Cognitive Analysis of 360 degree Surround Photos

Jan 17, 2019

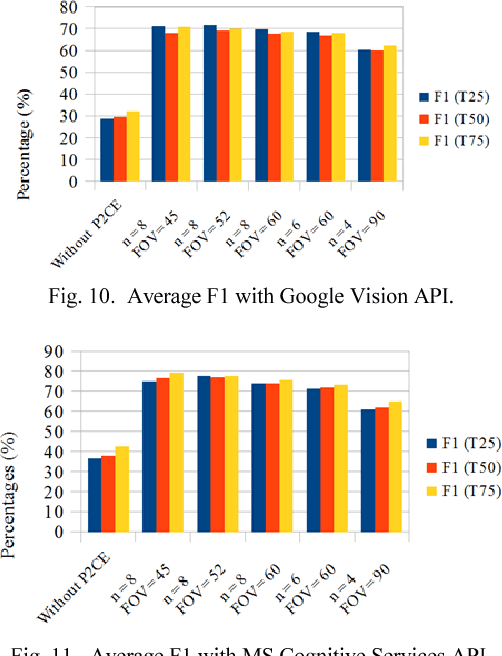

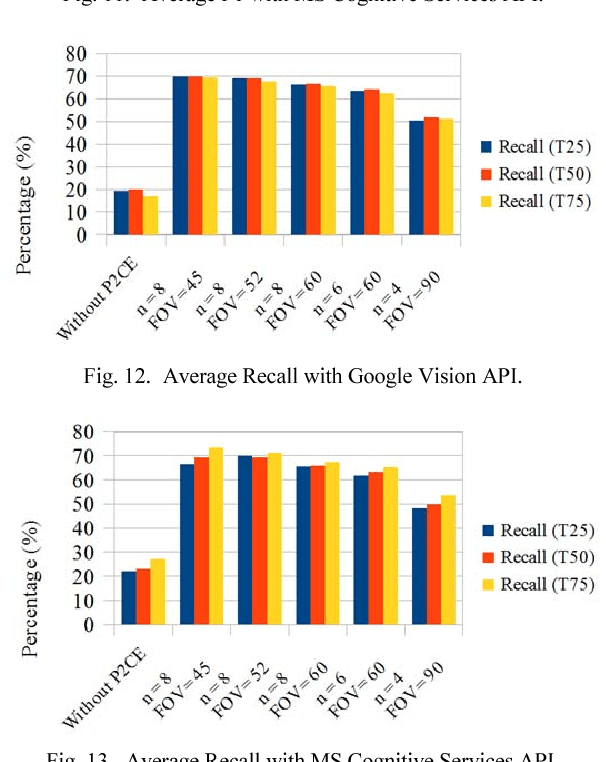

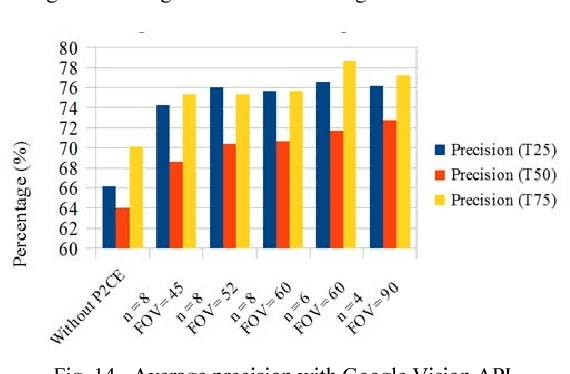

Abstract:360 degrees surround photography or photospheres have taken the world by storm as the new media for content creation providing viewers rich, immersive experience compared to conventional photography. With the emergence of Virtual Reality as a mainstream trend, the 360 degrees photography is increasingly important to offer a practical approach to the general public to capture virtual reality ready content from their mobile phones without explicit tool support or knowledge. Even though the amount of 360-degree surround content being uploaded to the Internet continues to grow, there is no proper way to index them or to process them for further information. This is because of the difficulty in image processing the photospheres due to the distorted nature of objects embedded. This challenge lies in the way 360-degree panoramic photospheres are saved. This paper presents a unique, and innovative technique named Photosphere to Cognition Engine (P2CE), which allows cognitive analysis on 360-degree surround photos using existing image cognitive analysis algorithms and APIs designed for conventional photos. We have optimized the system using a wide variety of indoor and outdoor samples and extensive evaluation approaches. On average, P2CE provides up-to 100% growth in accuracy on image cognitive analysis of Photospheres over direct use of conventional non-photosphere based Image Cognition Systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge