Kyung-Hwan Shim

Toward Compact Deep Neural Networks via Energy-Aware Pruning

Mar 19, 2021

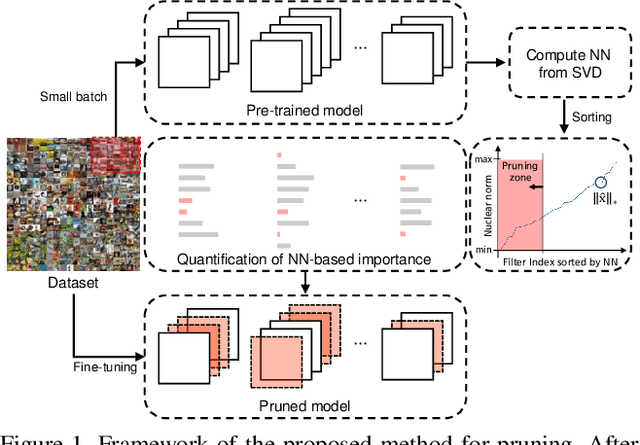

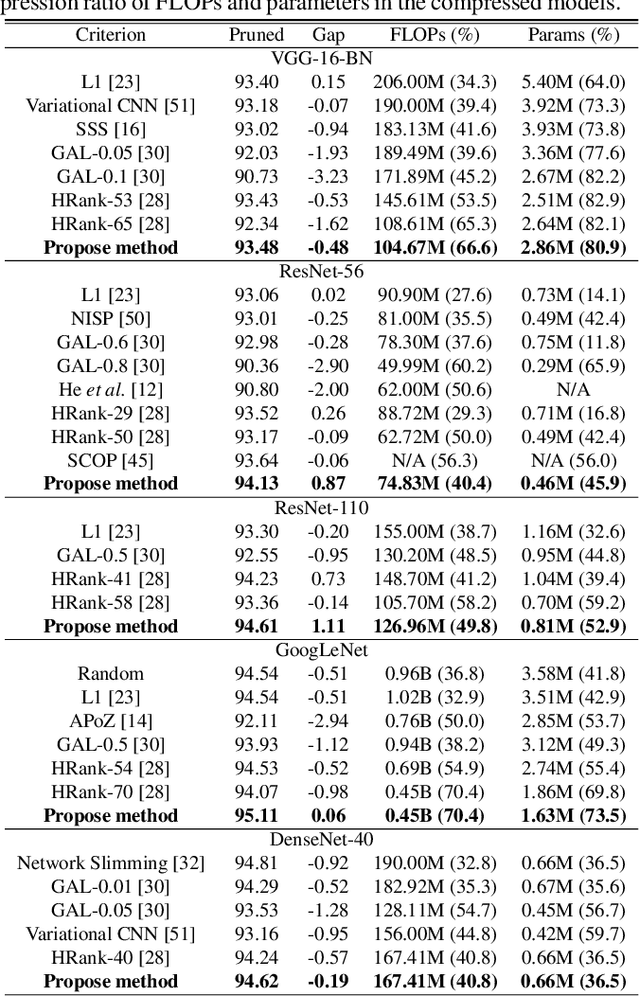

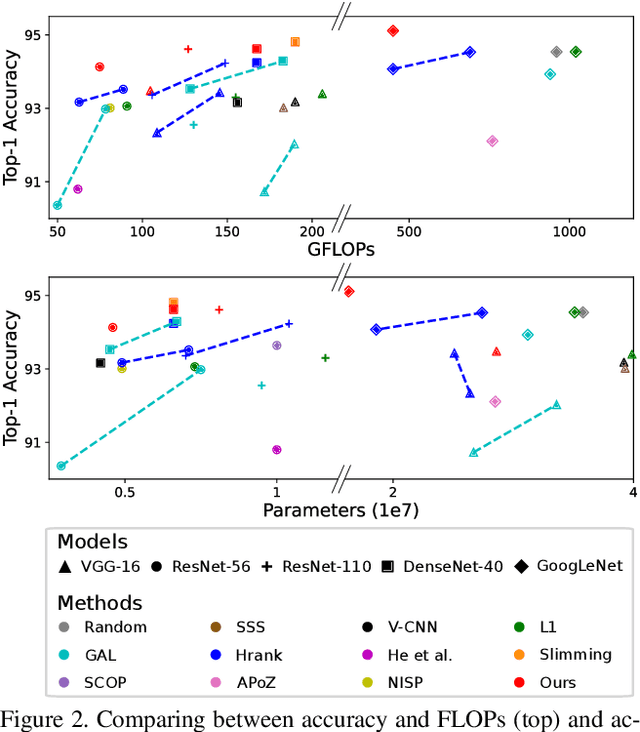

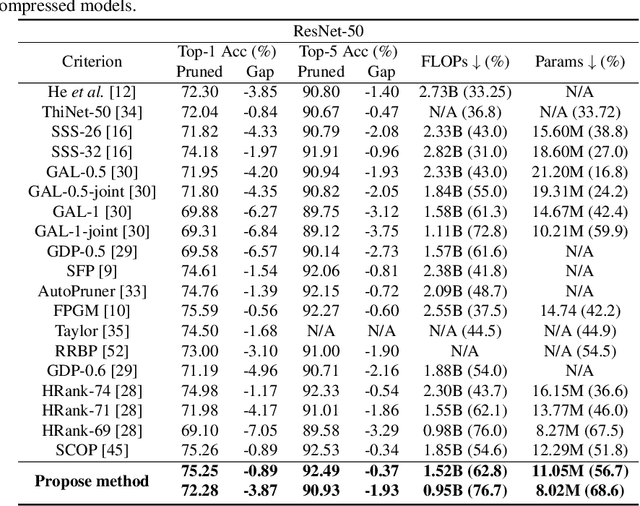

Abstract:Despite of the remarkable performance, modern deep neural networks are inevitably accompanied with a significant amount of computational cost for learning and deployment, which may be incompatible with their usage on edge devices. Recent efforts to reduce these overheads involves pruning and decomposing the parameters of various layers without performance deterioration. Inspired by several decomposition studies, in this paper, we propose a novel energy-aware pruning method that quantifies the importance of each filter in the network using nuclear-norm (NN). Proposed energy-aware pruning leads to state-of-the art performance for Top-1 accuracy, FLOPs, and parameter reduction across a wide range of scenarios with multiple network architectures on CIFAR-10 and ImageNet after fine-grained classification tasks. On toy experiment, despite of no fine-tuning, we can visually observe that NN not only has little change in decision boundaries across classes, but also clearly outperforms previous popular criteria. We achieve competitive results with 40.4/49.8% of FLOPs and 45.9/52.9% of parameter reduction with 94.13/94.61% in the Top-1 accuracy with ResNet-56/110 on CIFAR-10, respectively. In addition, our observations are consistent for a variety of different pruning setting in terms of data size as well as data quality which can be emphasized in the stability of the acceleration and compression with negligible accuracy loss. Our code is available at https://github.com/nota-github/nota-pruning_rank.

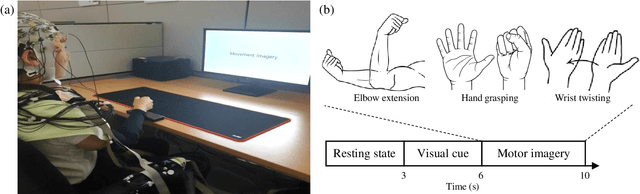

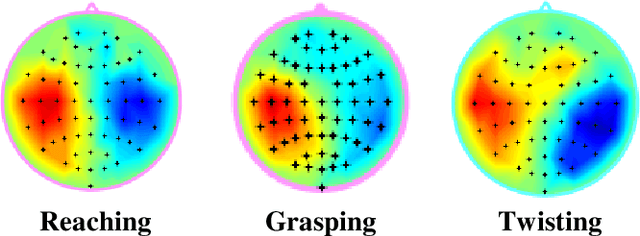

Gradual Relation Network: Decoding Intuitive Upper Extremity Movement Imaginations Based on Few-Shot EEG Learning

May 06, 2020

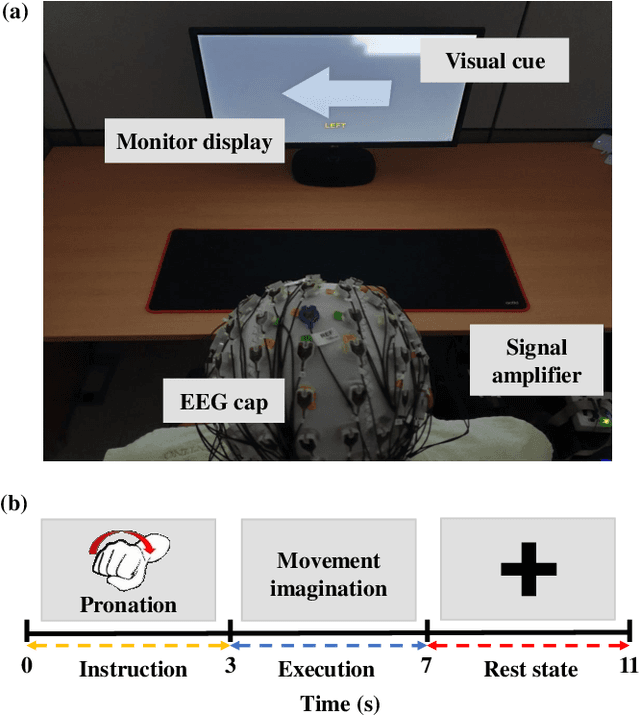

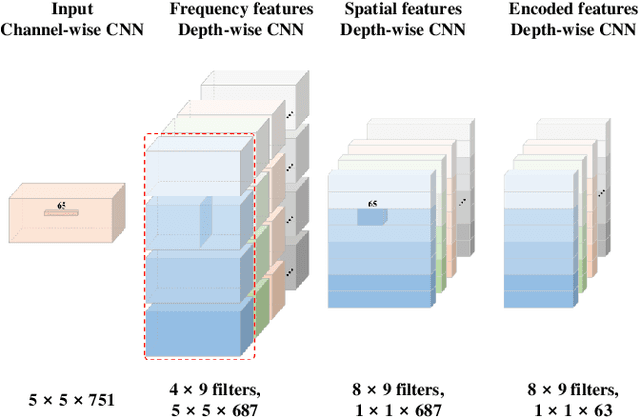

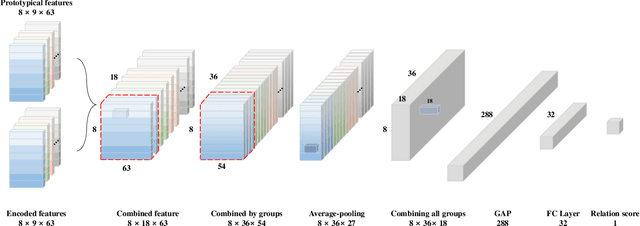

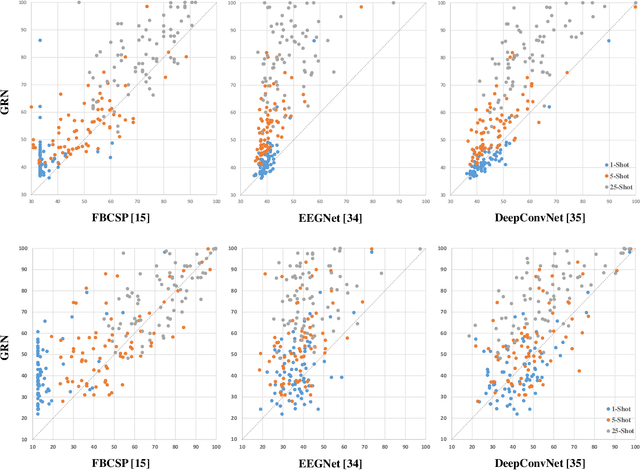

Abstract:Brain-computer interface (BCI) is a communication tool that connects users and external devices. In a real-time BCI environment, a calibration procedure is particularly necessary for each user and each session. This procedure consumes a significant amount of time that hinders the application of a BCI system in a real-world scenario. To avoid this problem, we adopt the metric based few-shot learning approach for decoding intuitive upper-extremity movement imagination (MI) using a gradual relation network (GRN) that can gradually consider the combination of temporal and spectral groups. We acquired the MI data of the upper-arm, forearm, and hand associated with intuitive upper-extremity movement from 25 subjects. The grand average multiclass classification results under offline analysis were 42.57%, 55.60%, and 80.85% in 1-, 5-, and 25-shot settings, respectively. In addition, we could demonstrate the feasibility of intuitive MI decoding using the few-shot approach in real-time robotic arm control scenarios. Five participants could achieve a success rate of 78% in the drinking task. Hence, we demonstrated the feasibility of the online robotic arm control with shortened calibration time by focusing on human body parts but also the accommodation of various untrained intuitive MI decoding based on the proposed GRN.

Classification of High-Dimensional Motor Imagery Tasks based on An End-to-end role assigned convolutional neural network

Feb 04, 2020

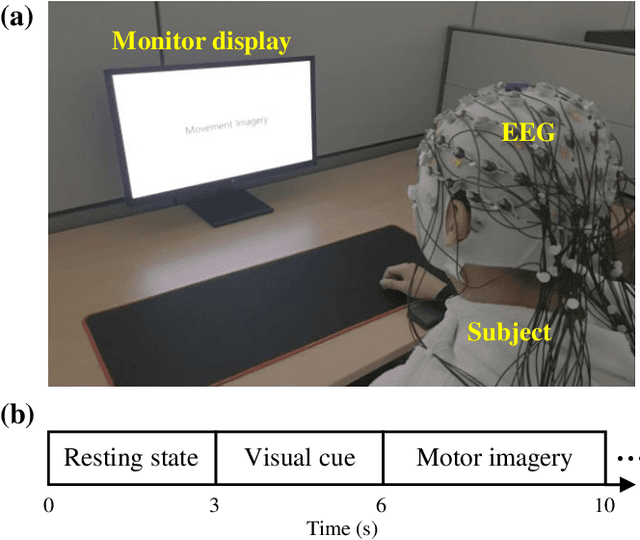

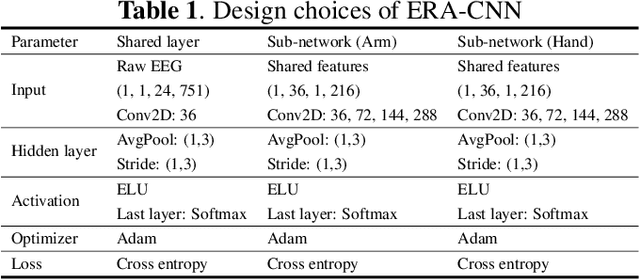

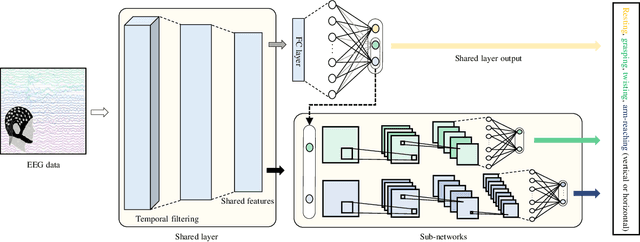

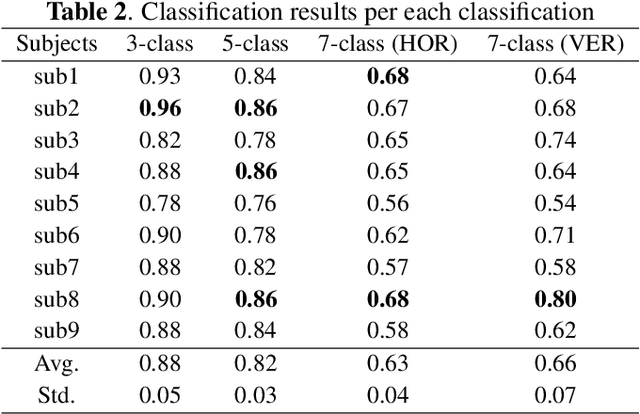

Abstract:A brain-computer interface (BCI) provides a direct communication pathway between user and external devices. Electroencephalogram (EEG) motor imagery (MI) paradigm is widely used in non-invasive BCI to obtain encoded signals contained user intention of movement execution. However, EEG has intricate and non-stationary properties resulting in insufficient decoding performance. By imagining numerous movements of a single-arm, decoding performance can be improved without artificial command matching. In this study, we collected intuitive EEG data contained the nine different types of movements of a single-arm from 9 subjects. We propose an end-to-end role assigned convolutional neural network (ERA-CNN) which considers discriminative features of each upper limb region by adopting the principle of a hierarchical CNN architecture. The proposed model outperforms previous methods on 3-class, 5-class and two different types of 7-class classification tasks. Hence, we demonstrate the possibility of decoding user intention by using only EEG signals with robust performance using an ERA-CNN.

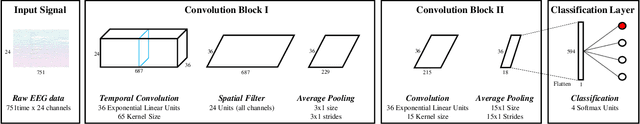

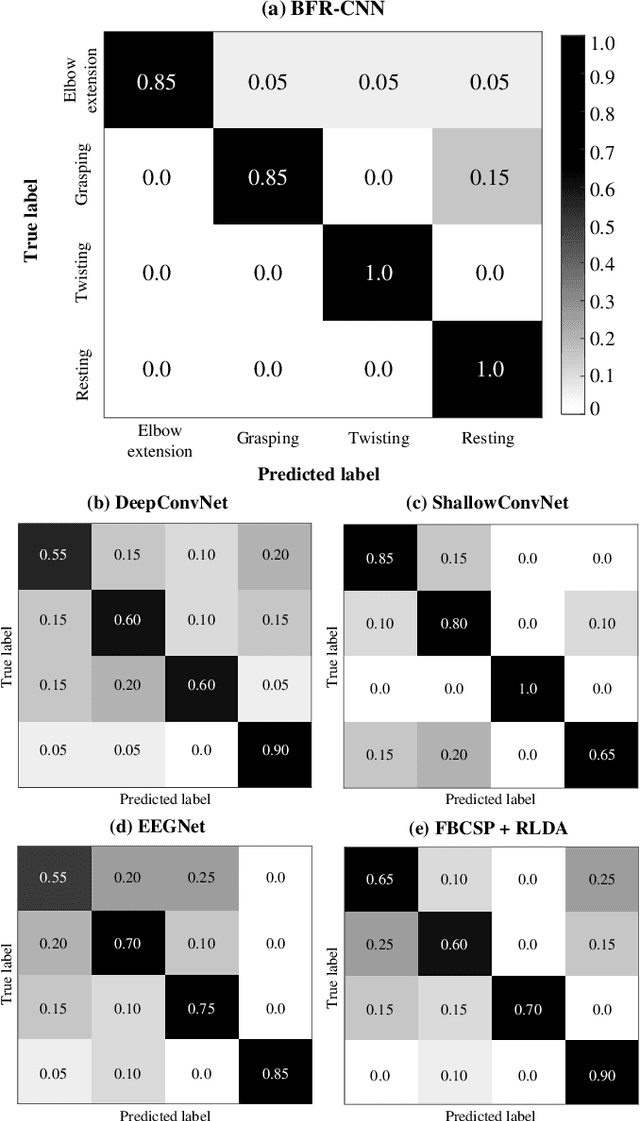

Motor Imagery Classification of Single-Arm Tasks Using Convolutional Neural Network based on Feature Refining

Feb 04, 2020

Abstract:Brain-computer interface (BCI) decodes brain signals to understand user intention and status. Because of its simple and safe data acquisition process, electroencephalogram (EEG) is commonly used in non-invasive BCI. One of EEG paradigms, motor imagery (MI) is commonly used for recovery or rehabilitation of motor functions due to its signal origin. However, the EEG signals are an oscillatory and non-stationary signal that makes it difficult to collect and classify MI accurately. In this study, we proposed a band-power feature refining convolutional neural network (BFR-CNN) which is composed of two convolution blocks to achieve high classification accuracy. We collected EEG signals to create MI dataset contained the movement imagination of a single-arm. The proposed model outperforms conventional approaches in 4-class MI tasks classification. Hence, we demonstrate that the decoding of user intention is possible by using only EEG signals with robust performance using BFR-CNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge