Dong-Joo Kim

RL-BioAug: Label-Efficient Reinforcement Learning for Self-Supervised EEG Representation Learning

Jan 21, 2026Abstract:The quality of data augmentation serves as a critical determinant for the performance of contrastive learning in EEG tasks. Although this paradigm is promising for utilizing unlabeled data, static or random augmentation strategies often fail to preserve intrinsic information due to the non-stationarity of EEG signals where statistical properties change over time. To address this, we propose RL-BioAug, a framework that leverages a label-efficient reinforcement learning (RL) agent to autonomously determine optimal augmentation policies. While utilizing only a minimal fraction (10%) of labeled data to guide the agent's policy, our method enables the encoder to learn robust representations in a strictly self-supervised manner. Experimental results demonstrate that RL-BioAug significantly outperforms the random selection strategy, achieving substantial improvements of 9.69% and 8.80% in Macro-F1 score on the Sleep-EDFX and CHB-MIT datasets, respectively. Notably, this agent mainly chose optimal strategies for each task--for example, Time Masking with a 62% probability for sleep stage classification and Crop & Resize with a 77% probability for seizure detection. Our framework suggests its potential to replace conventional heuristic-based augmentations and establish a new autonomous paradigm for data augmentation. The source code is available at https://github.com/dlcjfgmlnasa/RL-BioAug.

Diffusion-Based Electrocardiography Noise Quantification via Anomaly Detection

Jun 13, 2025Abstract:Electrocardiography (ECG) signals are often degraded by noise, which complicates diagnosis in clinical and wearable settings. This study proposes a diffusion-based framework for ECG noise quantification via reconstruction-based anomaly detection, addressing annotation inconsistencies and the limited generalizability of conventional methods. We introduce a distributional evaluation using the Wasserstein-1 distance ($W_1$), comparing the reconstruction error distributions between clean and noisy ECGs to mitigate inconsistent annotations. Our final model achieved robust noise quantification using only three reverse diffusion steps. The model recorded a macro-average $W_1$ score of 1.308 across the benchmarks, outperforming the next-best method by over 48%. External validations demonstrated strong generalizability, supporting the exclusion of low-quality segments to enhance diagnostic accuracy and enable timely clinical responses to signal degradation. The proposed method enhances clinical decision-making, diagnostic accuracy, and real-time ECG monitoring capabilities, supporting future advancements in clinical and wearable ECG applications.

Toward Foundational Model for Sleep Analysis Using a Multimodal Hybrid Self-Supervised Learning Framework

Feb 28, 2025

Abstract:Sleep is essential for maintaining human health and quality of life. Analyzing physiological signals during sleep is critical in assessing sleep quality and diagnosing sleep disorders. However, manual diagnoses by clinicians are time-intensive and subjective. Despite advances in deep learning that have enhanced automation, these approaches remain heavily dependent on large-scale labeled datasets. This study introduces SynthSleepNet, a multimodal hybrid self-supervised learning framework designed for analyzing polysomnography (PSG) data. SynthSleepNet effectively integrates masked prediction and contrastive learning to leverage complementary features across multiple modalities, including electroencephalogram (EEG), electrooculography (EOG), electromyography (EMG), and electrocardiogram (ECG). This approach enables the model to learn highly expressive representations of PSG data. Furthermore, a temporal context module based on Mamba was developed to efficiently capture contextual information across signals. SynthSleepNet achieved superior performance compared to state-of-the-art methods across three downstream tasks: sleep-stage classification, apnea detection, and hypopnea detection, with accuracies of 89.89%, 99.75%, and 89.60%, respectively. The model demonstrated robust performance in a semi-supervised learning environment with limited labels, achieving accuracies of 87.98%, 99.37%, and 77.52% in the same tasks. These results underscore the potential of the model as a foundational tool for the comprehensive analysis of PSG data. SynthSleepNet demonstrates comprehensively superior performance across multiple downstream tasks compared to other methodologies, making it expected to set a new standard for sleep disorder monitoring and diagnostic systems.

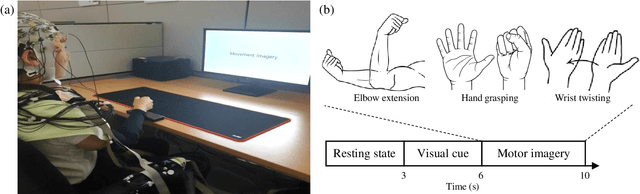

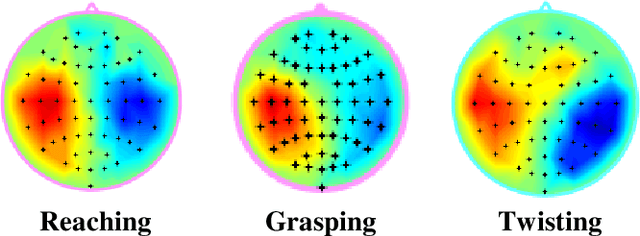

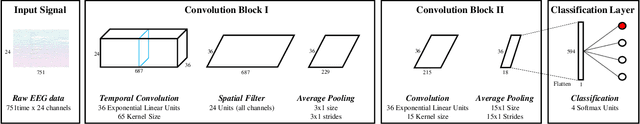

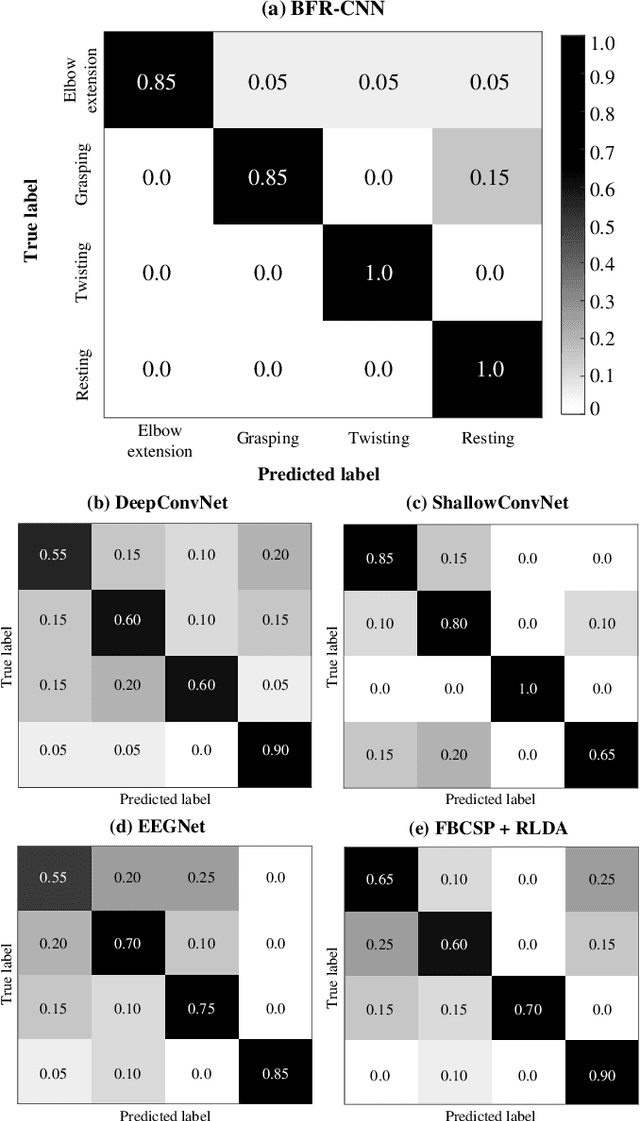

Motor Imagery Classification of Single-Arm Tasks Using Convolutional Neural Network based on Feature Refining

Feb 04, 2020

Abstract:Brain-computer interface (BCI) decodes brain signals to understand user intention and status. Because of its simple and safe data acquisition process, electroencephalogram (EEG) is commonly used in non-invasive BCI. One of EEG paradigms, motor imagery (MI) is commonly used for recovery or rehabilitation of motor functions due to its signal origin. However, the EEG signals are an oscillatory and non-stationary signal that makes it difficult to collect and classify MI accurately. In this study, we proposed a band-power feature refining convolutional neural network (BFR-CNN) which is composed of two convolution blocks to achieve high classification accuracy. We collected EEG signals to create MI dataset contained the movement imagination of a single-arm. The proposed model outperforms conventional approaches in 4-class MI tasks classification. Hence, we demonstrate that the decoding of user intention is possible by using only EEG signals with robust performance using BFR-CNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge