Kwansik Park

Indoor Path Planning for Multiple Unmanned Aerial Vehicles via Curriculum Learning

Sep 05, 2022

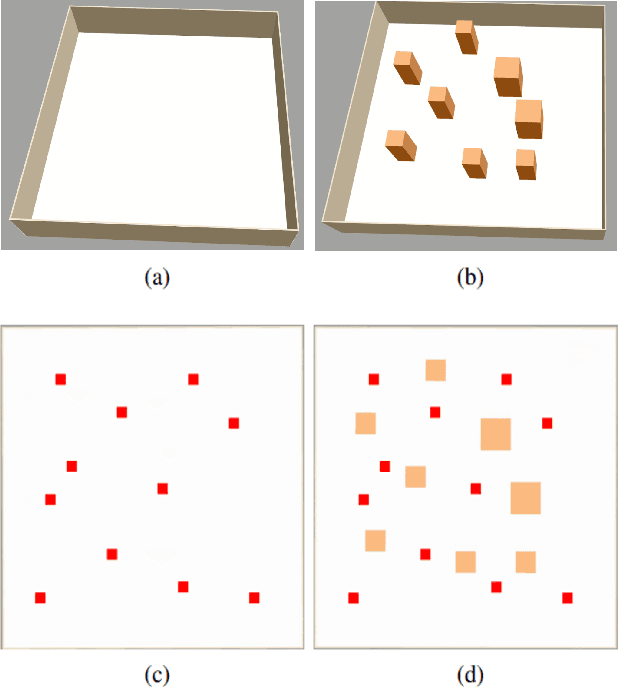

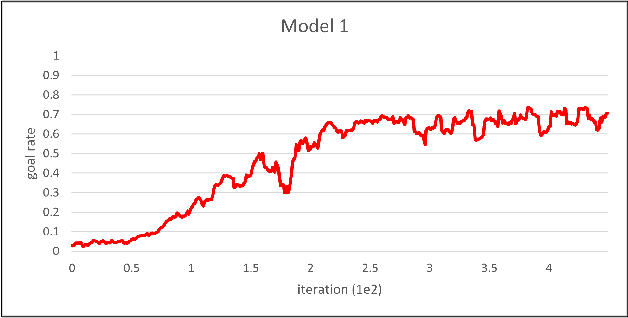

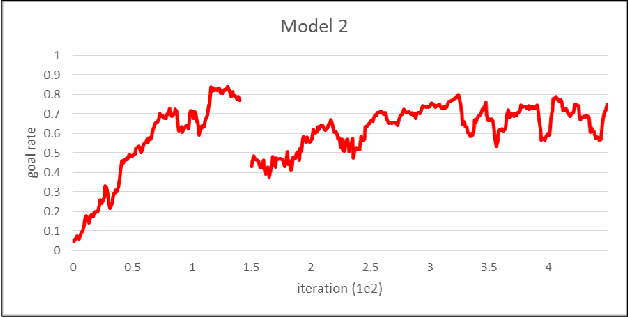

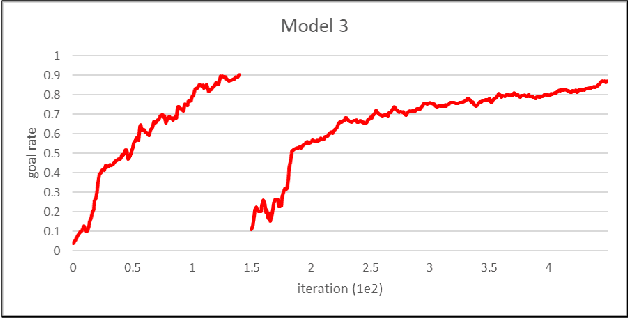

Abstract:Multi-agent reinforcement learning was performed in this study for indoor path planning of two unmanned aerial vehicles (UAVs). Each UAV performed the task of moving as fast as possible from a randomly paired initial position to a goal position in an environment with obstacles. To minimize training time and prevent the damage of UAVs, learning was performed by simulation. Considering the non-stationary characteristics of the multi-agent environment wherein the optimal behavior varies based on the actions of other agents, the action of the other UAV was also included in the state space of each UAV. Curriculum learning was performed in two stages to increase learning efficiency. A goal rate of 89.0% was obtained compared with other learning strategies that obtained goal rates of 73.6% and 79.9%.

GPS Multipath Detection Based on Carrier-to-Noise-Density Ratio Measurements from a Dual-Polarized Antenna

Aug 21, 2021

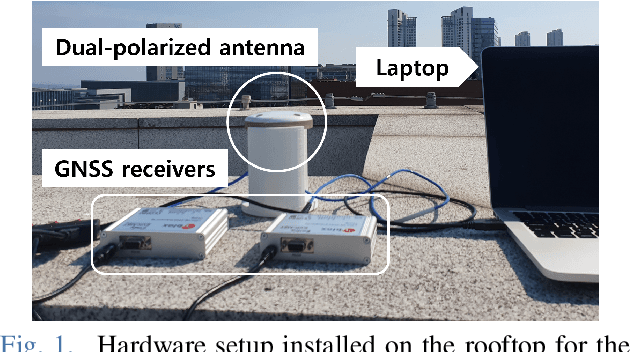

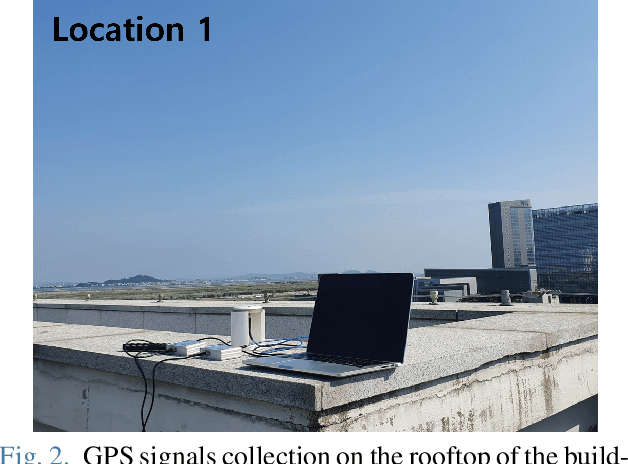

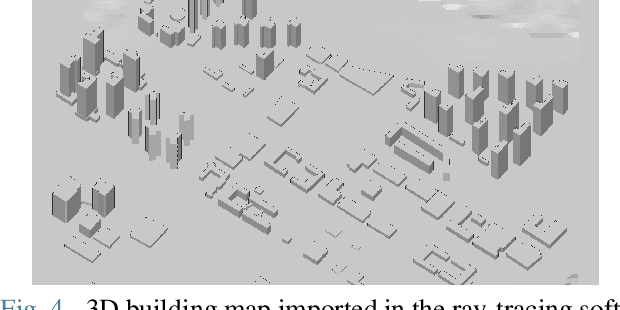

Abstract:In this study, the global positioning system (GPS) multipath detection was performed based on the carrier-to-noise-density ratio, C/N0, measured through a dual-polarized antenna. As the right hand circular polarization (RHCP) antenna is sensitive to the signals directly received from the GPS, and the left hand circular polarization (LHCP) antenna is sensitive to the singly reflected signals, the C/N0 difference between the RHCP and LHCP measurements is used for multipath detection. Once we collected the GPS signals in a low multipath location, we calculated the C/N0 difference to obtain a threshold value that can be used to detect the multipath GPS signal received from another location. The results were validated through a ray-tracing simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge