Kushal Bose

A New Framework for Convex Clustering in Kernel Spaces: Finite Sample Bounds, Consistency and Performance Insights

Nov 07, 2025

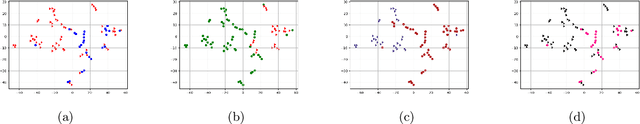

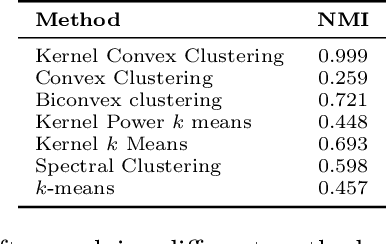

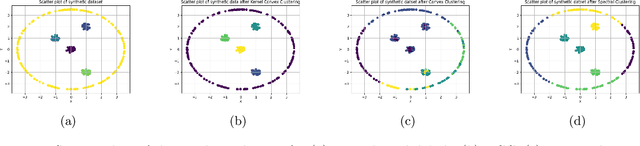

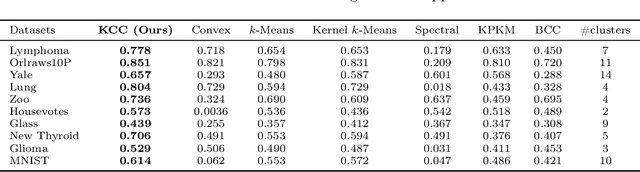

Abstract:Convex clustering is a well-regarded clustering method, resembling the similar centroid-based approach of Lloyd's $k$-means, without requiring a predefined cluster count. It starts with each data point as its centroid and iteratively merges them. Despite its advantages, this method can fail when dealing with data exhibiting linearly non-separable or non-convex structures. To mitigate the limitations, we propose a kernelized extension of the convex clustering method. This approach projects the data points into a Reproducing Kernel Hilbert Space (RKHS) using a feature map, enabling convex clustering in this transformed space. This kernelization not only allows for better handling of complex data distributions but also produces an embedding in a finite-dimensional vector space. We provide a comprehensive theoretical underpinnings for our kernelized approach, proving algorithmic convergence and establishing finite sample bounds for our estimates. The effectiveness of our method is demonstrated through extensive experiments on both synthetic and real-world datasets, showing superior performance compared to state-of-the-art clustering techniques. This work marks a significant advancement in the field, offering an effective solution for clustering in non-linear and non-convex data scenarios.

Learning from Heterophilic Graphs: A Spectral Theory Perspective on the Impact of Self-Loops and Parallel Edges

Sep 16, 2025Abstract:Graph heterophily poses a formidable challenge to the performance of Message-passing Graph Neural Networks (MP-GNNs). The familiar low-pass filters like Graph Convolutional Networks (GCNs) face performance degradation, which can be attributed to the blending of the messages from dissimilar neighboring nodes. The performance of the low-pass filters on heterophilic graphs still requires an in-depth analysis. In this context, we update the heterophilic graphs by adding a number of self-loops and parallel edges. We observe that eigenvalues of the graph Laplacian decrease and increase respectively by increasing the number of self-loops and parallel edges. We conduct several studies regarding the performance of GCN on various benchmark heterophilic networks by adding either self-loops or parallel edges. The studies reveal that the GCN exhibited either increasing or decreasing performance trends on adding self-loops and parallel edges. In light of the studies, we established connections between the graph spectra and the performance trends of the low-pass filters on the heterophilic graphs. The graph spectra characterize the essential intrinsic properties of the input graph like the presence of connected components, sparsity, average degree, cluster structures, etc. Our work is adept at seamlessly evaluating graph spectrum and properties by observing the performance trends of the low-pass filters without pursuing the costly eigenvalue decomposition. The theoretical foundations are also discussed to validate the impact of adding self-loops and parallel edges on the graph spectrum.

Transformers Are Universally Consistent

May 30, 2025Abstract:Despite their central role in the success of foundational models and large-scale language modeling, the theoretical foundations governing the operation of Transformers remain only partially understood. Contemporary research has largely focused on their representational capacity for language comprehension and their prowess in in-context learning, frequently under idealized assumptions such as linearized attention mechanisms. Initially conceived to model sequence-to-sequence transformations, a fundamental and unresolved question is whether Transformers can robustly perform functional regression over sequences of input tokens. This question assumes heightened importance given the inherently non-Euclidean geometry underlying real-world data distributions. In this work, we establish that Transformers equipped with softmax-based nonlinear attention are uniformly consistent when tasked with executing Ordinary Least Squares (OLS) regression, provided both the inputs and outputs are embedded in hyperbolic space. We derive deterministic upper bounds on the empirical error which, in the asymptotic regime, decay at a provable rate of $\mathcal{O}(t^{-1/2d})$, where $t$ denotes the number of input tokens and $d$ the embedding dimensionality. Notably, our analysis subsumes the Euclidean setting as a special case, recovering analogous convergence guarantees parameterized by the intrinsic dimensionality of the data manifold. These theoretical insights are corroborated through empirical evaluations on real-world datasets involving both continuous and categorical response variables.

Topology-Driven Clustering: Enhancing Performance with Betti Number Filtration

May 07, 2025Abstract:Clustering aims to form groups of similar data points in an unsupervised regime. Yet, clustering complex datasets containing critically intertwined shapes poses significant challenges. The prevailing clustering algorithms widely depend on evaluating similarity measures based on Euclidean metrics. Exploring topological characteristics to perform clustering of complex datasets inevitably presents a better scope. The topological clustering algorithms predominantly perceive the point set through the lens of Simplicial complexes and Persistent homology. Despite these approaches, the existing topological clustering algorithms cannot somehow fully exploit topological structures and show inconsistent performances on some highly complicated datasets. This work aims to mitigate the limitations by identifying topologically similar neighbors through the Vietoris-Rips complex and Betti number filtration. In addition, we introduce the concept of the Betti sequences to capture flexibly essential features from the topological structures. Our proposed algorithm is adept at clustering complex, intertwined shapes contained in the datasets. We carried out experiments on several synthetic and real-world datasets. Our algorithm demonstrated commendable performances across the datasets compared to some of the well-known topology-based clustering algorithms.

On the Universal Statistical Consistency of Expansive Hyperbolic Deep Convolutional Neural Networks

Nov 15, 2024

Abstract:The emergence of Deep Convolutional Neural Networks (DCNNs) has been a pervasive tool for accomplishing widespread applications in computer vision. Despite its potential capability to capture intricate patterns inside the data, the underlying embedding space remains Euclidean and primarily pursues contractive convolution. Several instances can serve as a precedent for the exacerbating performance of DCNNs. The recent advancement of neural networks in the hyperbolic spaces gained traction, incentivizing the development of convolutional deep neural networks in the hyperbolic space. In this work, we propose Hyperbolic DCNN based on the Poincar\'{e} Disc. The work predominantly revolves around analyzing the nature of expansive convolution in the context of the non-Euclidean domain. We further offer extensive theoretical insights pertaining to the universal consistency of the expansive convolution in the hyperbolic space. Several simulations were performed not only on the synthetic datasets but also on some real-world datasets. The experimental results reveal that the hyperbolic convolutional architecture outperforms the Euclidean ones by a commendable margin.

Fortifying Fully Convolutional Generative Adversarial Networks for Image Super-Resolution Using Divergence Measures

Apr 09, 2024Abstract:Super-Resolution (SR) is a time-hallowed image processing problem that aims to improve the quality of a Low-Resolution (LR) sample up to the standard of its High-Resolution (HR) counterpart. We aim to address this by introducing Super-Resolution Generator (SuRGe), a fully-convolutional Generative Adversarial Network (GAN)-based architecture for SR. We show that distinct convolutional features obtained at increasing depths of a GAN generator can be optimally combined by a set of learnable convex weights to improve the quality of generated SR samples. In the process, we employ the Jensen-Shannon and the Gromov-Wasserstein losses respectively between the SR-HR and LR-SR pairs of distributions to further aid the generator of SuRGe to better exploit the available information in an attempt to improve SR. Moreover, we train the discriminator of SuRGe with the Wasserstein loss with gradient penalty, to primarily prevent mode collapse. The proposed SuRGe, as an end-to-end GAN workflow tailor-made for super-resolution, offers improved performance while maintaining low inference time. The efficacy of SuRGe is substantiated by its superior performance compared to 18 state-of-the-art contenders on 10 benchmark datasets.

HyPE-GT: where Graph Transformers meet Hyperbolic Positional Encodings

Dec 11, 2023Abstract:Graph Transformers (GTs) facilitate the comprehension of graph-structured data by calculating the self-attention of node pairs without considering node position information. To address this limitation, we introduce an innovative and efficient framework that introduces Positional Encodings (PEs) into the Transformer, generating a set of learnable positional encodings in the hyperbolic space, a non-Euclidean domain. This approach empowers us to explore diverse options for optimal selection of PEs for specific downstream tasks, leveraging hyperbolic neural networks or hyperbolic graph convolutional networks. Additionally, we repurpose these positional encodings to mitigate the impact of over-smoothing in deep Graph Neural Networks (GNNs). Comprehensive experiments on molecular benchmark datasets, co-author, and co-purchase networks substantiate the effectiveness of hyperbolic positional encodings in enhancing the performance of deep GNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge