Kuo Chen

multiPI-TransBTS: A Multi-Path Learning Framework for Brain Tumor Image Segmentation Based on Multi-Physical Information

Sep 18, 2024Abstract:Brain Tumor Segmentation (BraTS) plays a critical role in clinical diagnosis, treatment planning, and monitoring the progression of brain tumors. However, due to the variability in tumor appearance, size, and intensity across different MRI modalities, automated segmentation remains a challenging task. In this study, we propose a novel Transformer-based framework, multiPI-TransBTS, which integrates multi-physical information to enhance segmentation accuracy. The model leverages spatial information, semantic information, and multi-modal imaging data, addressing the inherent heterogeneity in brain tumor characteristics. The multiPI-TransBTS framework consists of an encoder, an Adaptive Feature Fusion (AFF) module, and a multi-source, multi-scale feature decoder. The encoder incorporates a multi-branch architecture to separately extract modality-specific features from different MRI sequences. The AFF module fuses information from multiple sources using channel-wise and element-wise attention, ensuring effective feature recalibration. The decoder combines both common and task-specific features through a Task-Specific Feature Introduction (TSFI) strategy, producing accurate segmentation outputs for Whole Tumor (WT), Tumor Core (TC), and Enhancing Tumor (ET) regions. Comprehensive evaluations on the BraTS2019 and BraTS2020 datasets demonstrate the superiority of multiPI-TransBTS over the state-of-the-art methods. The model consistently achieves better Dice coefficients, Hausdorff distances, and Sensitivity scores, highlighting its effectiveness in addressing the BraTS challenges. Our results also indicate the need for further exploration of the balance between precision and recall in the ET segmentation task. The proposed framework represents a significant advancement in BraTS, with potential implications for improving clinical outcomes for brain tumor patients.

CasCIFF: A Cross-Domain Information Fusion Framework Tailored for Cascade Prediction in Social Networks

Aug 09, 2023Abstract:Existing approaches for information cascade prediction fall into three main categories: feature-driven methods, point process-based methods, and deep learning-based methods. Among them, deep learning-based methods, characterized by its superior learning and representation capabilities, mitigates the shortcomings inherent of the other methods. However, current deep learning methods still face several persistent challenges. In particular, accurate representation of user attributes remains problematic due to factors such as fake followers and complex network configurations. Previous algorithms that focus on the sequential order of user activations often neglect the rich insights offered by activation timing. Furthermore, these techniques often fail to holistically integrate temporal and structural aspects, thus missing the nuanced propagation trends inherent in information cascades.To address these issues, we propose the Cross-Domain Information Fusion Framework (CasCIFF), which is tailored for information cascade prediction. This framework exploits multi-hop neighborhood information to make user embeddings robust. When embedding cascades, the framework intentionally incorporates timestamps, endowing it with the ability to capture evolving patterns of information diffusion. In particular, the CasCIFF seamlessly integrates the tasks of user classification and cascade prediction into a consolidated framework, thereby allowing the extraction of common features that prove useful for all tasks, a strategy anchored in the principles of multi-task learning.

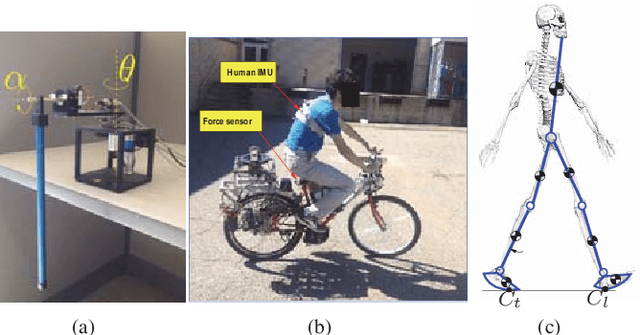

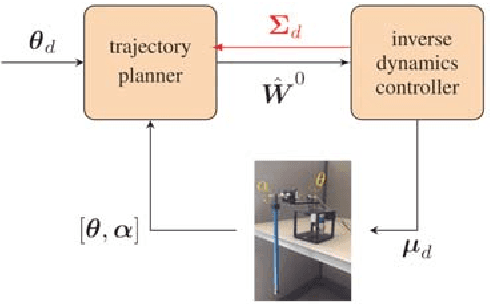

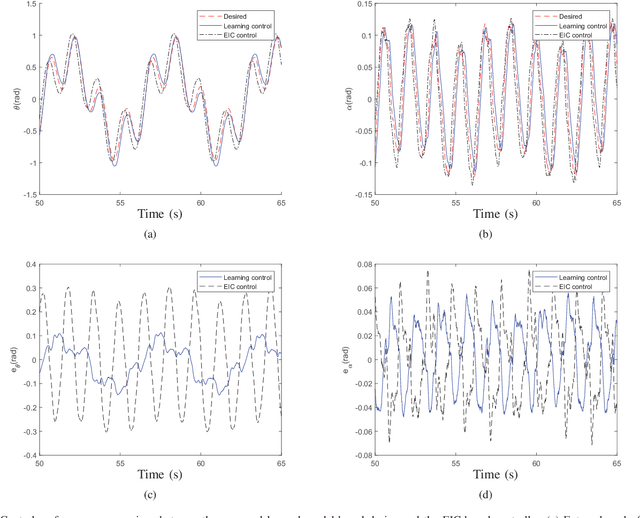

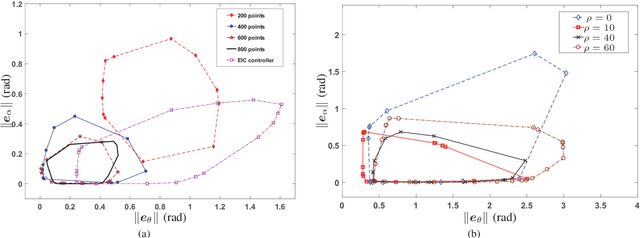

Gaussian Processes Model-based Control of Underactuated Balance Robots

Oct 29, 2020

Abstract:Ranging from cart-pole systems and autonomous bicycles to bipedal robots, control of these underactuated balance robots aims to achieve both external (actuated) subsystem trajectory tracking and internal (unactuated) subsystem balancing tasks with limited actuation authority. This paper proposes a learning model-based control framework for underactuated balance robots. The key idea to simultaneously achieve tracking and balancing tasks is to design control strategies in slow- and fast-time scales, respectively. In slow-time scale, model predictive control (MPC) is used to generate the desired internal subsystem trajectory that encodes the external subsystem tracking performance and control input. In fast-time scale, the actual internal trajectory is stabilized to the desired internal trajectory by using an inverse dynamics controller. The coupling effects between the external and internal subsystems are captured through the planned internal trajectory profile and the dual structural properties of the robotic systems. The control design is based on Gaussian processes (GPs) regression model that are learned from experiments without need of priori knowledge about the robot dynamics nor successful balance demonstration. The GPs provide estimates of modeling uncertainties of the robotic systems and these uncertainty estimations are incorporated in the MPC design to enhance the control robustness to modeling errors. The learning-based control design is analyzed with guaranteed stability and performance. The proposed design is demonstrated by experiments on a Furuta pendulum and an autonomous bikebot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge