Kuan-Lun Tseng

Local-Adaptive Face Recognition via Graph-based Meta-Clustering and Regularized Adaptation

Mar 27, 2022

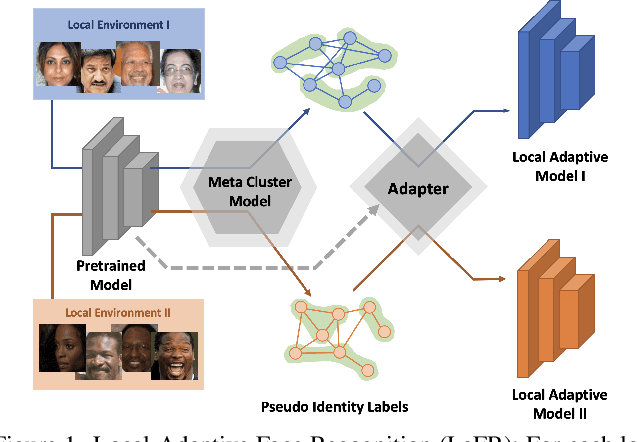

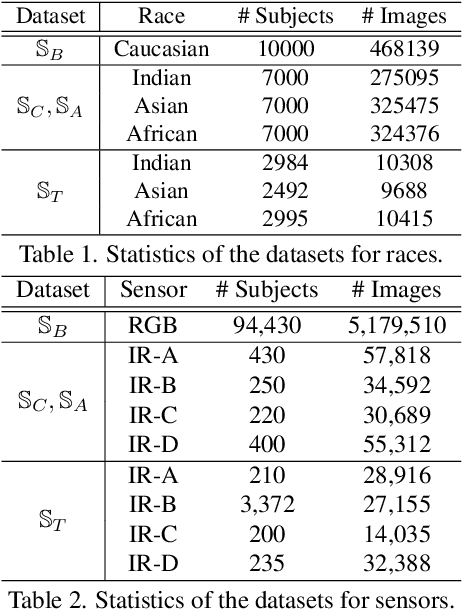

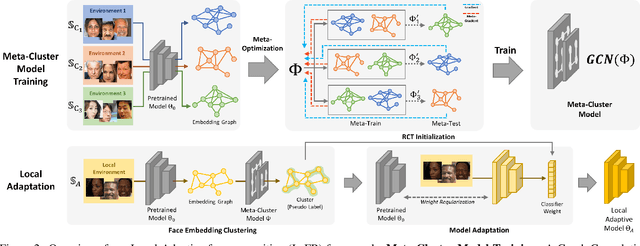

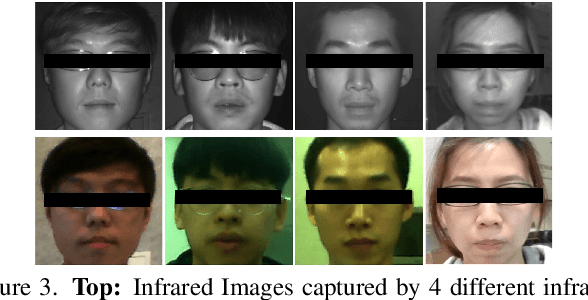

Abstract:Due to the rising concern of data privacy, it's reasonable to assume the local client data can't be transferred to a centralized server, nor their associated identity label is provided. To support continuous learning and fill the last-mile quality gap, we introduce a new problem setup called Local-Adaptive Face Recognition (LaFR). Leveraging the environment-specific local data after the deployment of the initial global model, LaFR aims at getting optimal performance by training local-adapted models automatically and un-supervisely, as opposed to fixing their initial global model. We achieve this by a newly proposed embedding cluster model based on Graph Convolution Network (GCN), which is trained via meta-optimization procedure. Compared with previous works, our meta-clustering model can generalize well in unseen local environments. With the pseudo identity labels from the clustering results, we further introduce novel regularization techniques to improve the model adaptation performance. Extensive experiments on racial and internal sensor adaptation demonstrate that our proposed solution is more effective for adapting face recognition models in each specific environment. Meanwhile, we show that LaFR can further improve the global model by a simple federated aggregation over the updated local models.

Organ At Risk Segmentation with Multiple Modality

Oct 17, 2019

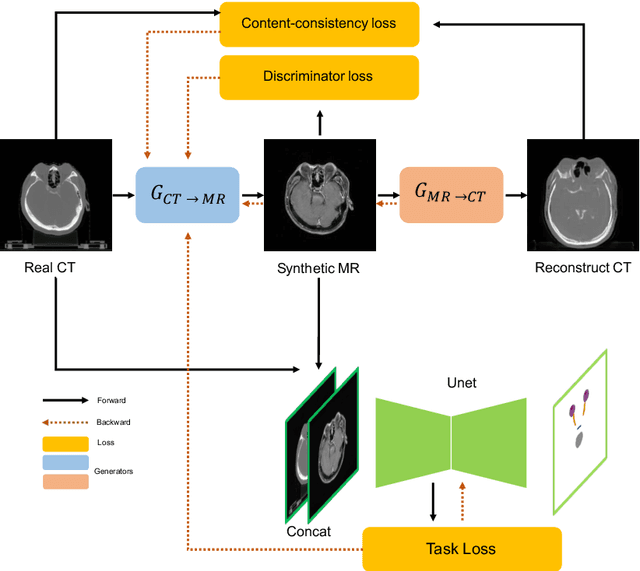

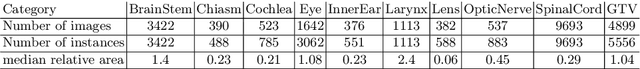

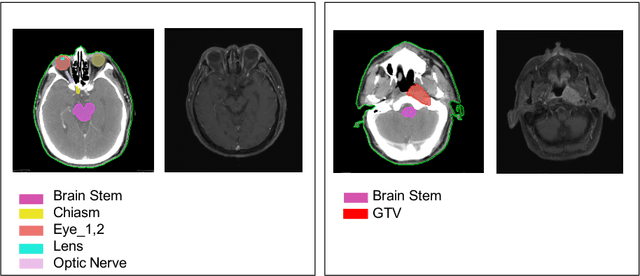

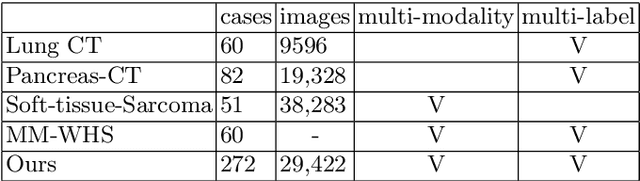

Abstract:With the development of image segmentation in computer vision, biomedical image segmentation have achieved remarkable progress on brain tumor segmentation and Organ At Risk (OAR) segmentation. However, most of the research only uses single modality such as Computed Tomography (CT) scans while in real world scenario doctors often use multiple modalities to get more accurate result. To better leverage different modalities, we have collected a large dataset consists of 136 cases with CT and MR images which diagnosed with nasopharyngeal cancer. In this paper, we propose to use Generative Adversarial Network to perform CT to MR transformation to synthesize MR images instead of aligning two modalities. The synthesized MR can be jointly trained with CT to achieve better performance. In addition, we use instance segmentation model to extend the OAR segmentation task to segment both organs and tumor region. The collected dataset will be made public soon.

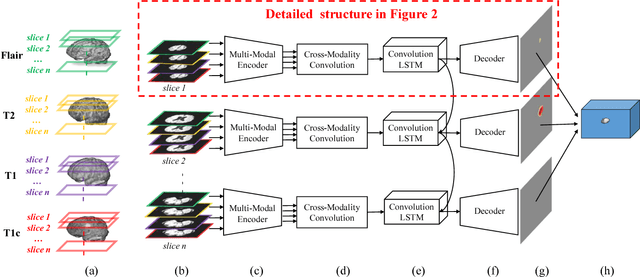

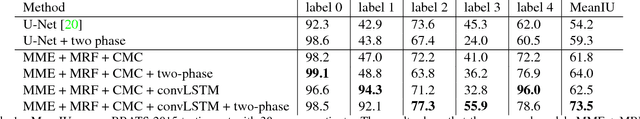

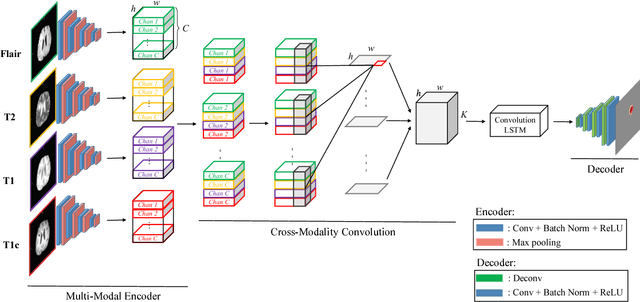

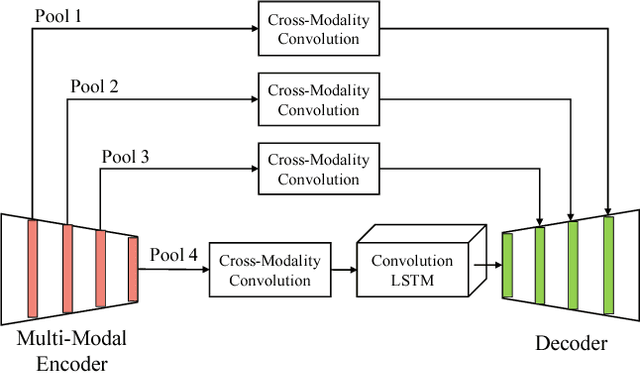

Joint Sequence Learning and Cross-Modality Convolution for 3D Biomedical Segmentation

Apr 25, 2017

Abstract:Deep learning models such as convolutional neural net- work have been widely used in 3D biomedical segmentation and achieve state-of-the-art performance. However, most of them often adapt a single modality or stack multiple modalities as different input channels. To better leverage the multi- modalities, we propose a deep encoder-decoder structure with cross-modality convolution layers to incorporate different modalities of MRI data. In addition, we exploit convolutional LSTM to model a sequence of 2D slices, and jointly learn the multi-modalities and convolutional LSTM in an end-to-end manner. To avoid converging to the certain labels, we adopt a re-weighting scheme and two-phase training to handle the label imbalance. Experimental results on BRATS-2015 show that our method outperforms state-of-the-art biomedical segmentation approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge