Krzysztof Ciebiera

RoboMorph: Evolving Robot Morphology using Large Language Models

Jul 11, 2024

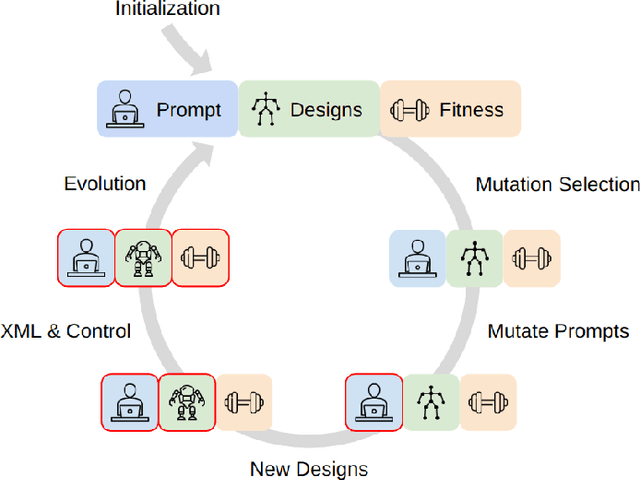

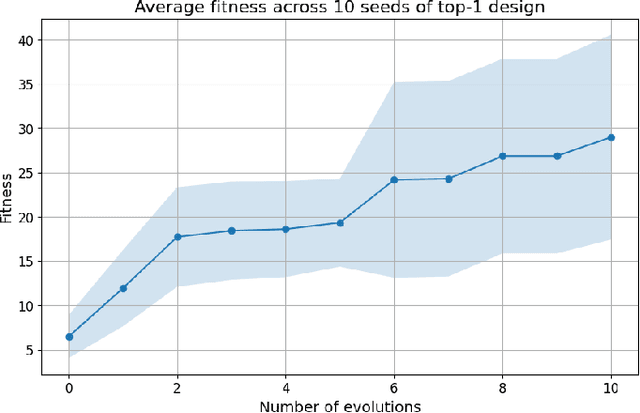

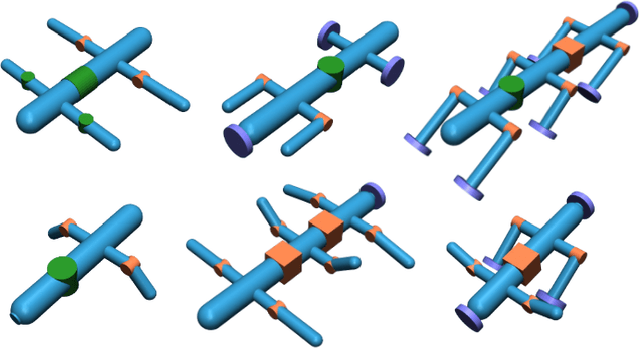

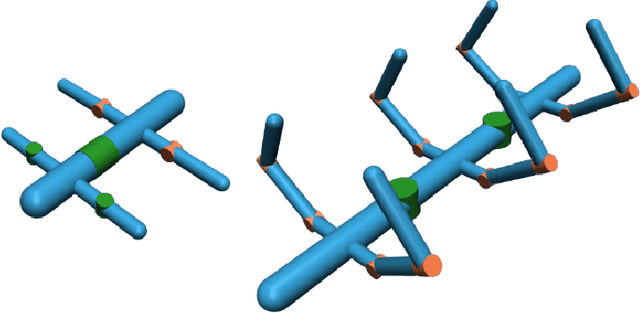

Abstract:We introduce RoboMorph, an automated approach for generating and optimizing modular robot designs using large language models (LLMs) and evolutionary algorithms. In this framework, we represent each robot design as a grammar and leverage the capabilities of LLMs to navigate the extensive robot design space, which is traditionally time-consuming and computationally demanding. By integrating automatic prompt design and a reinforcement learning based control algorithm, RoboMorph iteratively improves robot designs through feedback loops. Our experimental results demonstrate that RoboMorph can successfully generate nontrivial robots that are optimized for a single terrain while showcasing improvements in morphology over successive evolutions. Our approach demonstrates the potential of using LLMs for data-driven and modular robot design, providing a promising methodology that can be extended to other domains with similar design frameworks.

Grasping Student: semi-supervised learning for robotic manipulation

Mar 08, 2023Abstract:Gathering real-world data from the robot quickly becomes a bottleneck when constructing a robot learning system for grasping. In this work, we design a semi-supervised grasping system that, on top of a small sample of robot experience, takes advantage of images of products to be picked, which are collected without any interactions with the robot. We validate our findings both in the simulation and in the real world. In the regime of a small number of robot training samples, taking advantage of the unlabeled data allows us to achieve performance at the level of 10-fold bigger dataset size used by the baseline. The code and datasets used in the paper will be released at https://github.com/nomagiclab/grasping-student.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge