Kevin Qiu

Debate2Create: Robot Co-design via Large Language Model Debates

Oct 29, 2025Abstract:Automating the co-design of a robot's morphology and control is a long-standing challenge due to the vast design space and the tight coupling between body and behavior. We introduce Debate2Create (D2C), a framework in which large language model (LLM) agents engage in a structured dialectical debate to jointly optimize a robot's design and its reward function. In each round, a design agent proposes targeted morphological modifications, and a control agent devises a reward function tailored to exploit the new design. A panel of pluralistic judges then evaluates the design-control pair in simulation and provides feedback that guides the next round of debate. Through iterative debates, the agents progressively refine their proposals, producing increasingly effective robot designs. Notably, D2C yields diverse and specialized morphologies despite no explicit diversity objective. On a quadruped locomotion benchmark, D2C discovers designs that travel 73% farther than the default, demonstrating that structured LLM-based debate can serve as a powerful mechanism for emergent robot co-design. Our results suggest that multi-agent debate, when coupled with physics-grounded feedback, is a promising new paradigm for automated robot design.

TAG-K: Tail-Averaged Greedy Kaczmarz for Computationally Efficient and Performant Online Inertial Parameter Estimation

Oct 06, 2025Abstract:Accurate online inertial parameter estimation is essential for adaptive robotic control, enabling real-time adjustment to payload changes, environmental interactions, and system wear. Traditional methods such as Recursive Least Squares (RLS) and the Kalman Filter (KF) often struggle to track abrupt parameter shifts or incur high computational costs, limiting their effectiveness in dynamic environments and for computationally constrained robotic systems. As such, we introduce TAG-K, a lightweight extension of the Kaczmarz method that combines greedy randomized row selection for rapid convergence with tail averaging for robustness under noise and inconsistency. This design enables fast, stable parameter adaptation while retaining the low per-iteration complexity inherent to the Kaczmarz framework. We evaluate TAG-K in synthetic benchmarks and quadrotor tracking tasks against RLS, KF, and other Kaczmarz variants. TAG-K achieves 1.5x-1.9x faster solve times on laptop-class CPUs and 4.8x-20.7x faster solve times on embedded microcontrollers. More importantly, these speedups are paired with improved resilience to measurement noise and a 25% reduction in estimation error, leading to nearly 2x better end-to-end tracking performance.

Ground Awareness in Deep Learning for Large Outdoor Point Cloud Segmentation

Jan 30, 2025

Abstract:This paper presents an analysis of utilizing elevation data to aid outdoor point cloud semantic segmentation through existing machine-learning networks in remote sensing, specifically in urban, built-up areas. In dense outdoor point clouds, the receptive field of a machine learning model may be too small to accurately determine the surroundings and context of a point. By computing Digital Terrain Models (DTMs) from the point clouds, we extract the relative elevation feature, which is the vertical distance from the terrain to a point. RandLA-Net is employed for efficient semantic segmentation of large-scale point clouds. We assess its performance across three diverse outdoor datasets captured with varying sensor technologies and sensor locations. Integration of relative elevation data leads to consistent performance improvements across all three datasets, most notably in the Hessigheim dataset, with an increase of 3.7 percentage points in average F1 score from 72.35% to 76.01%, by establishing long-range dependencies between ground and objects. We also explore additional local features such as planarity, normal vectors, and 2D features, but their efficacy varied based on the characteristics of the point cloud. Ultimately, this study underscores the important role of the non-local relative elevation feature for semantic segmentation of point clouds in remote sensing applications.

RoboMorph: Evolving Robot Morphology using Large Language Models

Jul 11, 2024

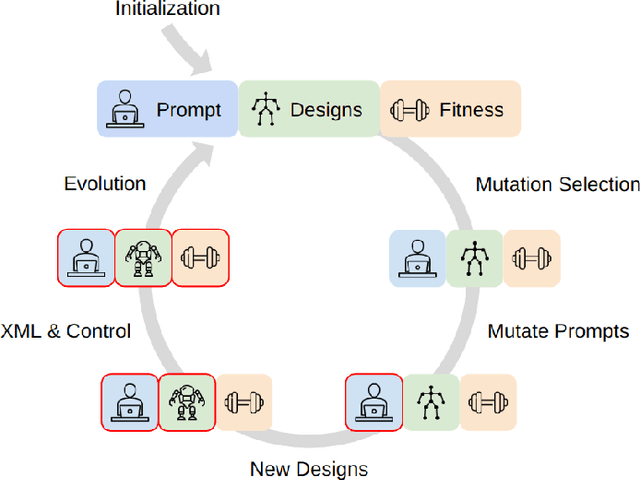

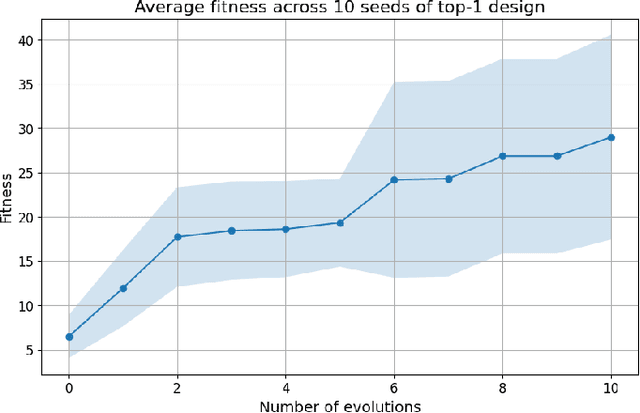

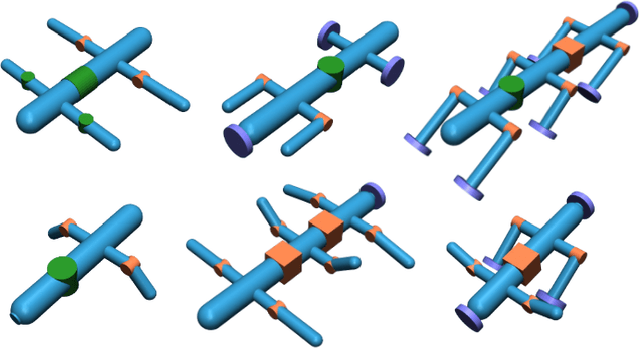

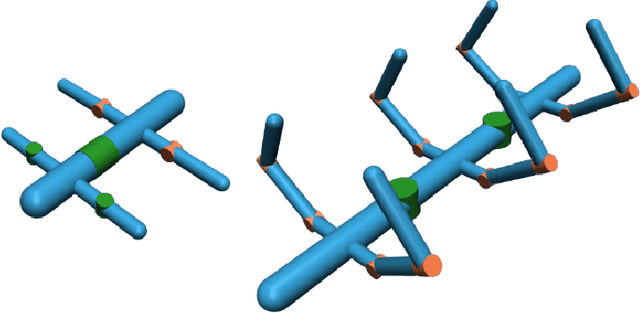

Abstract:We introduce RoboMorph, an automated approach for generating and optimizing modular robot designs using large language models (LLMs) and evolutionary algorithms. In this framework, we represent each robot design as a grammar and leverage the capabilities of LLMs to navigate the extensive robot design space, which is traditionally time-consuming and computationally demanding. By integrating automatic prompt design and a reinforcement learning based control algorithm, RoboMorph iteratively improves robot designs through feedback loops. Our experimental results demonstrate that RoboMorph can successfully generate nontrivial robots that are optimized for a single terrain while showcasing improvements in morphology over successive evolutions. Our approach demonstrates the potential of using LLMs for data-driven and modular robot design, providing a promising methodology that can be extended to other domains with similar design frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge