Kristian Muri Knausgård

Gaussian Splatting: 3D Reconstruction and Novel View Synthesis, a Review

May 06, 2024Abstract:Image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have gained attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art techniques for 3D reconstruction, including the generation of novel, unseen views. An overview of recent developments in the Gaussian Splatting method is provided, covering input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this domain and the numerous opportunities for enhancing 3D reconstruction methods, a comprehensive examination of algorithms appears essential. Consequently, this study offers a thorough overview of the latest advancements in Gaussian Splatting.

Loss and Reward Weighing for increased learning in Distributed Reinforcement Learning

Apr 25, 2023

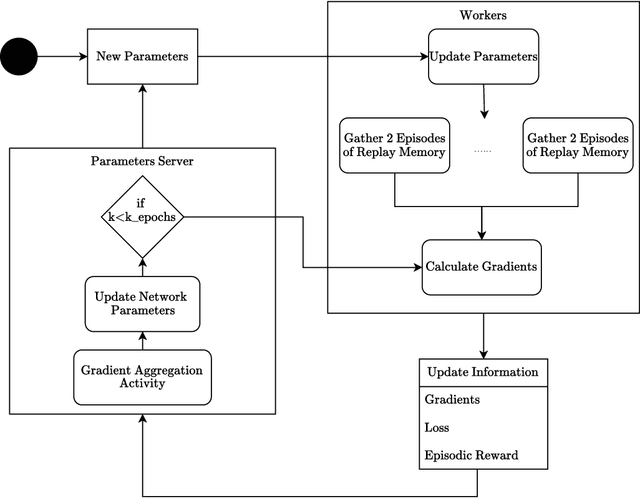

Abstract:This paper introduces two learning schemes for distributed agents in Reinforcement Learning (RL) environments, namely Reward-Weighted (R-Weighted) and Loss-Weighted (L-Weighted) gradient merger. The R/L weighted methods replace standard practices for training multiple agents, such as summing or averaging the gradients. The core of our methods is to scale the gradient of each actor based on how high the reward (for R-Weighted) or the loss (for L-Weighted) is compared to the other actors. During training, each agent operates in differently initialized versions of the same environment, which gives different gradients from different actors. In essence, the R-Weights and L-Weights of each agent inform the other agents of its potential, which again reports which environment should be prioritized for learning. This approach of distributed learning is possible because environments that yield higher rewards, or low losses, have more critical information than environments that yield lower rewards or higher losses. We empirically demonstrate that the R-Weighted methods work superior to the state-of-the-art in multiple RL environments.

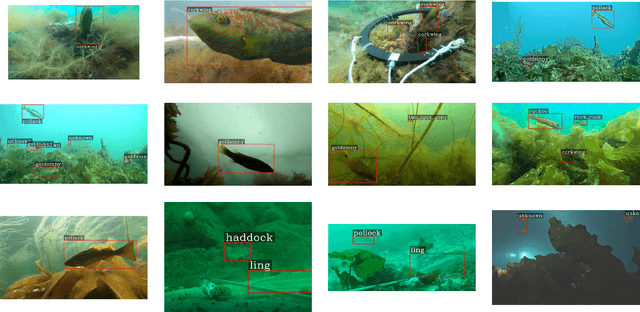

A contrastive learning approach for individual re-identification in a wild fish population

Jan 02, 2023

Abstract:In both terrestrial and marine ecology, physical tagging is a frequently used method to study population dynamics and behavior. However, such tagging techniques are increasingly being replaced by individual re-identification using image analysis. This paper introduces a contrastive learning-based model for identifying individuals. The model uses the first parts of the Inception v3 network, supported by a projection head, and we use contrastive learning to find similar or dissimilar image pairs from a collection of uniform photographs. We apply this technique for corkwing wrasse, Symphodus melops, an ecologically and commercially important fish species. Photos are taken during repeated catches of the same individuals from a wild population, where the intervals between individual sightings might range from a few days to several years. Our model achieves a one-shot accuracy of 0.35, a 5-shot accuracy of 0.56, and a 100-shot accuracy of 0.88, on our dataset.

Unlocking the potential of deep learning for marine ecology: overview, applications, and outlook

Sep 29, 2021

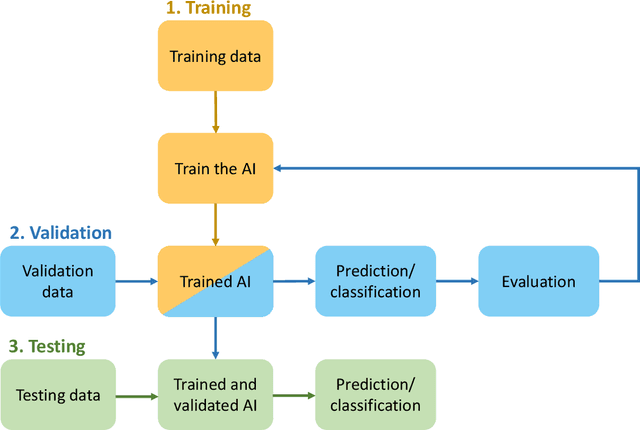

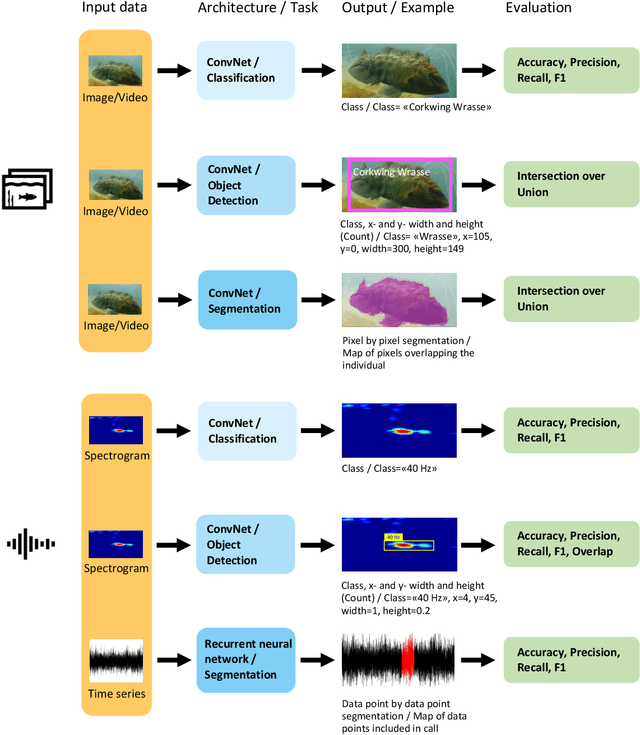

Abstract:The deep learning revolution is touching all scientific disciplines and corners of our lives as a means of harnessing the power of big data. Marine ecology is no exception. These new methods provide analysis of data from sensors, cameras, and acoustic recorders, even in real time, in ways that are reproducible and rapid. Off-the-shelf algorithms can find, count, and classify species from digital images or video and detect cryptic patterns in noisy data. Using these opportunities requires collaboration across ecological and data science disciplines, which can be challenging to initiate. To facilitate these collaborations and promote the use of deep learning towards ecosystem-based management of the sea, this paper aims to bridge the gap between marine ecologists and computer scientists. We provide insight into popular deep learning approaches for ecological data analysis in plain language, focusing on the techniques of supervised learning with deep neural networks, and illustrate challenges and opportunities through established and emerging applications of deep learning to marine ecology. We use established and future-looking case studies on plankton, fishes, marine mammals, pollution, and nutrient cycling that involve object detection, classification, tracking, and segmentation of visualized data. We conclude with a broad outlook of the field's opportunities and challenges, including potential technological advances and issues with managing complex data sets.

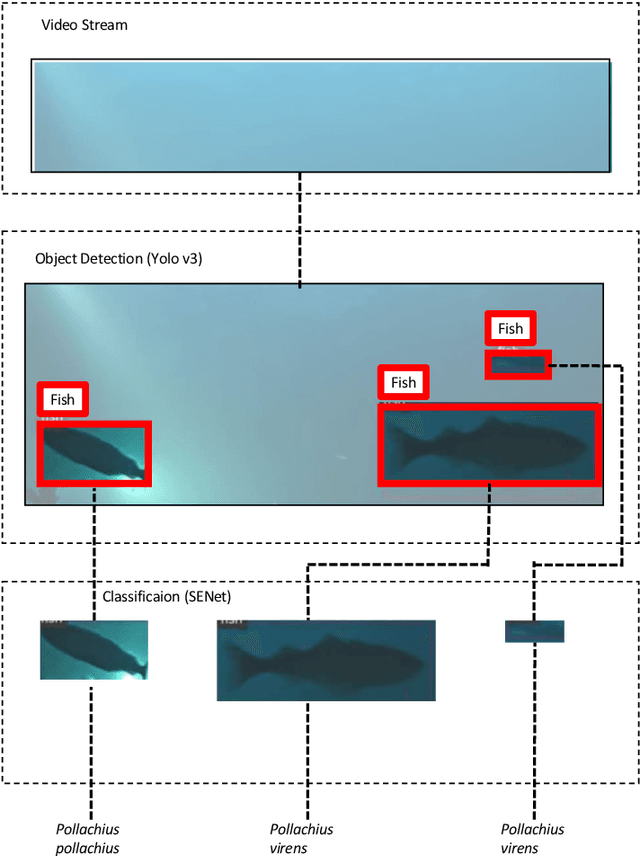

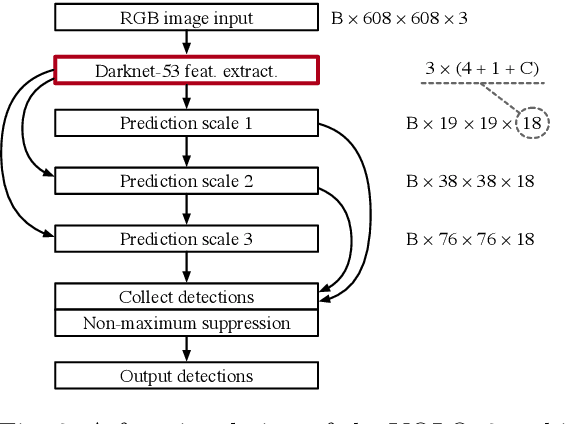

Temperate Fish Detection and Classification: a Deep Learning based Approach

May 14, 2020

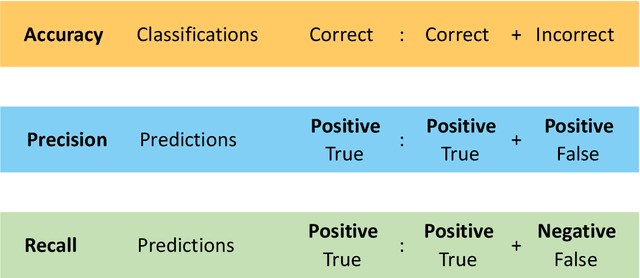

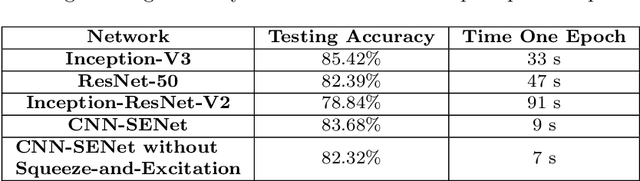

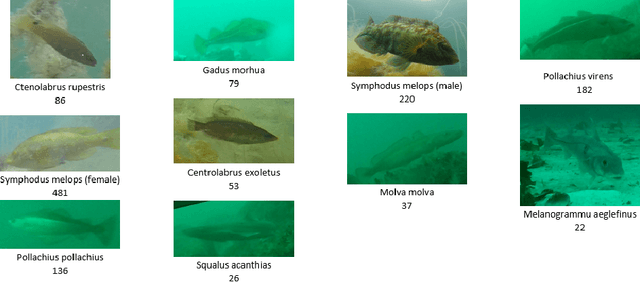

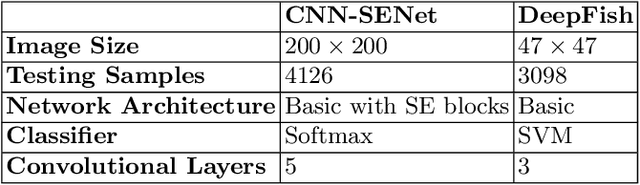

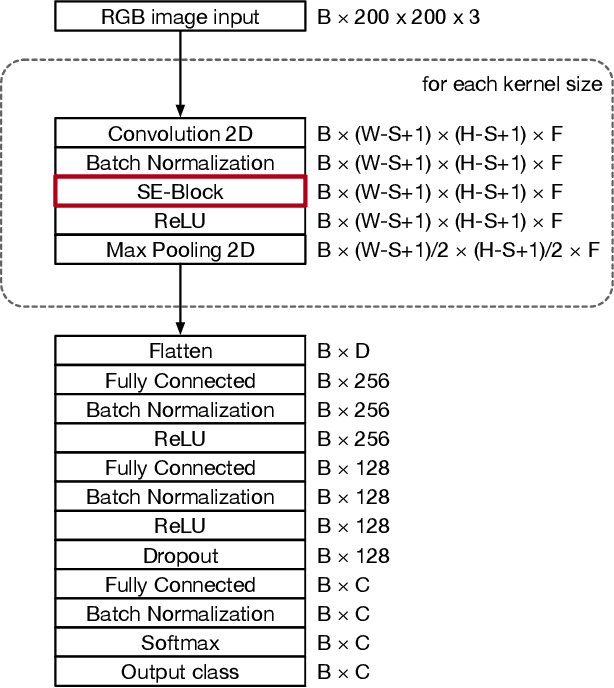

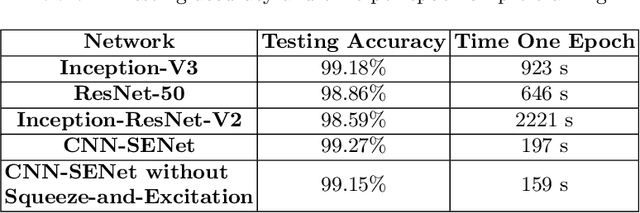

Abstract:A wide range of applications in marine ecology extensively uses underwater cameras. Still, to efficiently process the vast amount of data generated, we need to develop tools that can automatically detect and recognize species captured on film. Classifying fish species from videos and images in natural environments can be challenging because of noise and variation in illumination and the surrounding habitat. In this paper, we propose a two-step deep learning approach for the detection and classification of temperate fishes without pre-filtering. The first step is to detect each single fish in an image, independent of species and sex. For this purpose, we employ the You Only Look Once (YOLO) object detection technique. In the second step, we adopt a Convolutional Neural Network (CNN) with the Squeeze-and-Excitation (SE) architecture for classifying each fish in the image without pre-filtering. We apply transfer learning to overcome the limited training samples of temperate fishes and to improve the accuracy of the classification. This is done by training the object detection model with ImageNet and the fish classifier via a public dataset (Fish4Knowledge), whereupon both the object detection and classifier are updated with temperate fishes of interest. The weights obtained from pre-training are applied to post-training as a priori. Our solution achieves the state-of-the-art accuracy of 99.27\% on the pre-training. The percentage values for accuracy on the post-training are good; 83.68\% and 87.74\% with and without image augmentation, respectively, indicating that the solution is viable with a more extensive dataset.

Biometric Fish Classification of Temperate Species Using Convolutional Neural Network with Squeeze-and-Excitation

Apr 04, 2019

Abstract:Our understanding and ability to effectively monitor and manage coastal ecosystems are severely limited by observation methods. Automatic recognition of species in natural environment is a promising tool which would revolutionize video and image analysis for a wide range of applications in marine ecology. However, classifying fish from images captured by underwater cameras is in general very challenging due to noise and illumination variations in water. Previous classification methods in the literature relies on filtering the images to separate the fish from the background or sharpening the images by removing background noise. This pre-filtering process may negatively impact the classification accuracy. In this work, we propose a Convolutional Neural Network (CNN) using the Squeeze-and-Excitation (SE) architecture for classifying images of fish without pre-filtering. Different from conventional schemes, this scheme is divided into two steps. The first step is to train the fish classifier via a public data set, i.e., Fish4Knowledge, without using image augmentation, named as pre-training. The second step is to train the classifier based on a new data set consisting of species that we are interested in for classification, named as post-training. The weights obtained from pre-training are applied to post-training as a priori. This is also known as transfer learning. Our solution achieves the state-of-the-art accuracy of 99.27% accuracy on the pre-training. The accuracy on the post-training is 83.68%. Experiments on the post-training with image augmentation yields an accuracy of 87.74%, indicating that the solution is viable with a larger data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge