Temperate Fish Detection and Classification: a Deep Learning based Approach

Paper and Code

May 14, 2020

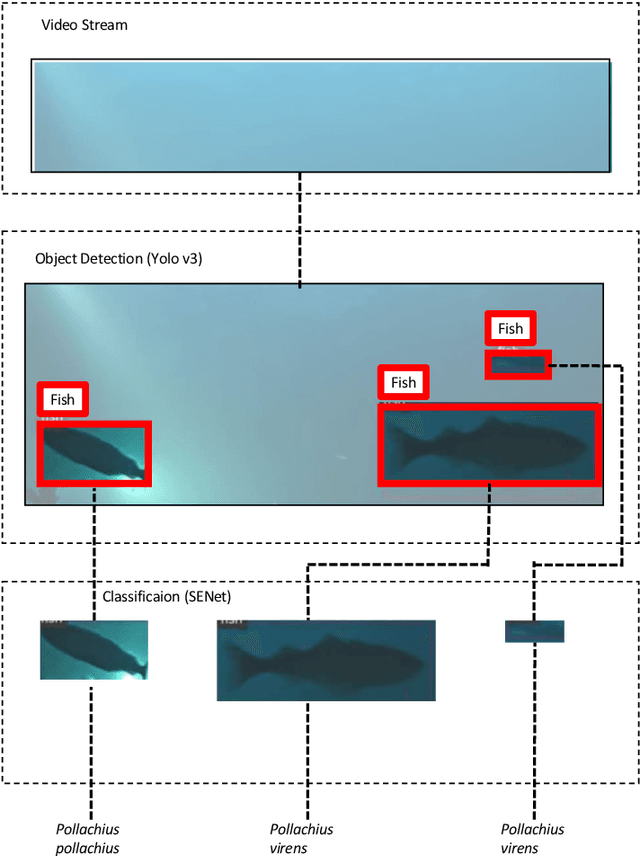

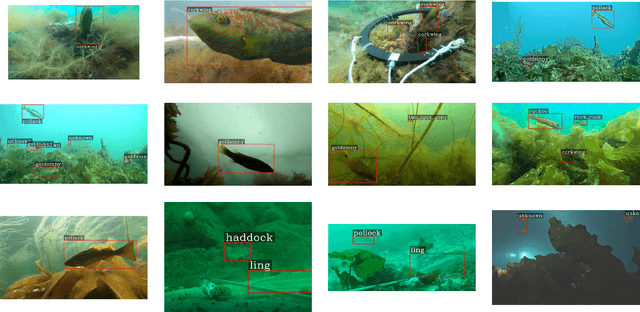

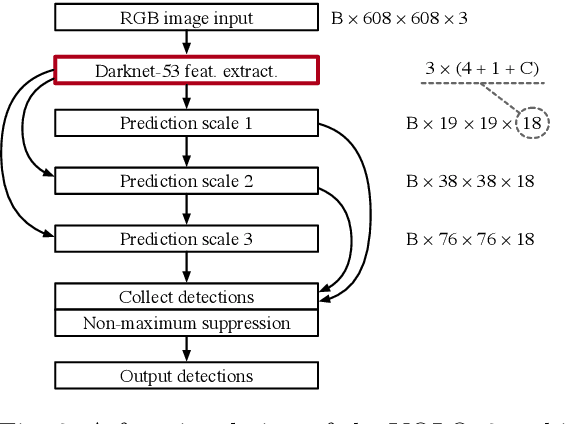

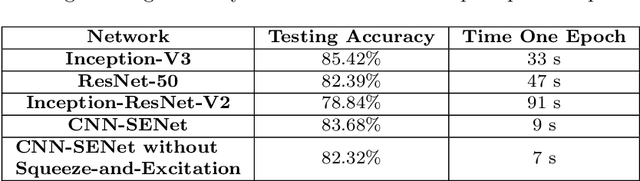

A wide range of applications in marine ecology extensively uses underwater cameras. Still, to efficiently process the vast amount of data generated, we need to develop tools that can automatically detect and recognize species captured on film. Classifying fish species from videos and images in natural environments can be challenging because of noise and variation in illumination and the surrounding habitat. In this paper, we propose a two-step deep learning approach for the detection and classification of temperate fishes without pre-filtering. The first step is to detect each single fish in an image, independent of species and sex. For this purpose, we employ the You Only Look Once (YOLO) object detection technique. In the second step, we adopt a Convolutional Neural Network (CNN) with the Squeeze-and-Excitation (SE) architecture for classifying each fish in the image without pre-filtering. We apply transfer learning to overcome the limited training samples of temperate fishes and to improve the accuracy of the classification. This is done by training the object detection model with ImageNet and the fish classifier via a public dataset (Fish4Knowledge), whereupon both the object detection and classifier are updated with temperate fishes of interest. The weights obtained from pre-training are applied to post-training as a priori. Our solution achieves the state-of-the-art accuracy of 99.27\% on the pre-training. The percentage values for accuracy on the post-training are good; 83.68\% and 87.74\% with and without image augmentation, respectively, indicating that the solution is viable with a more extensive dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge