Krishanu Sarker

Reducing Risk and Uncertainty of Deep Neural Networks on Diagnosing COVID-19 Infection

Apr 28, 2021

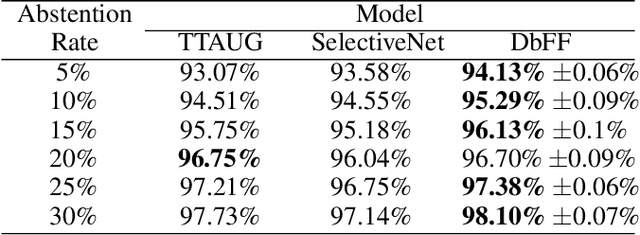

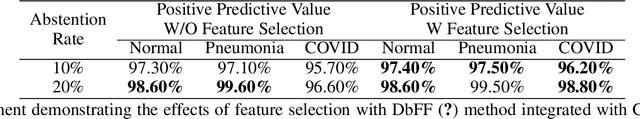

Abstract:Effective and reliable screening of patients via Computer-Aided Diagnosis can play a crucial part in the battle against COVID-19. Most of the existing works focus on developing sophisticated methods yielding high detection performance, yet not addressing the issue of predictive uncertainty. In this work, we introduce uncertainty estimation to detect confusing cases for expert referral to address the unreliability of state-of-the-art (SOTA) DNNs on COVID-19 detection. To the best of our knowledge, we are the first to address this issue on the COVID-19 detection problem. In this work, we investigate a number of SOTA uncertainty estimation methods on publicly available COVID dataset and present our experimental findings. In collaboration with medical professionals, we further validate the results to ensure the viability of the best performing method in clinical practice.

A Unified Plug-and-Play Framework for Effective Data Denoising and Robust Abstention

Sep 25, 2020

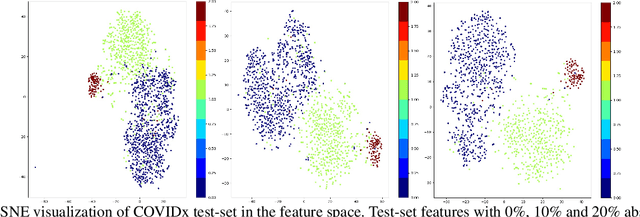

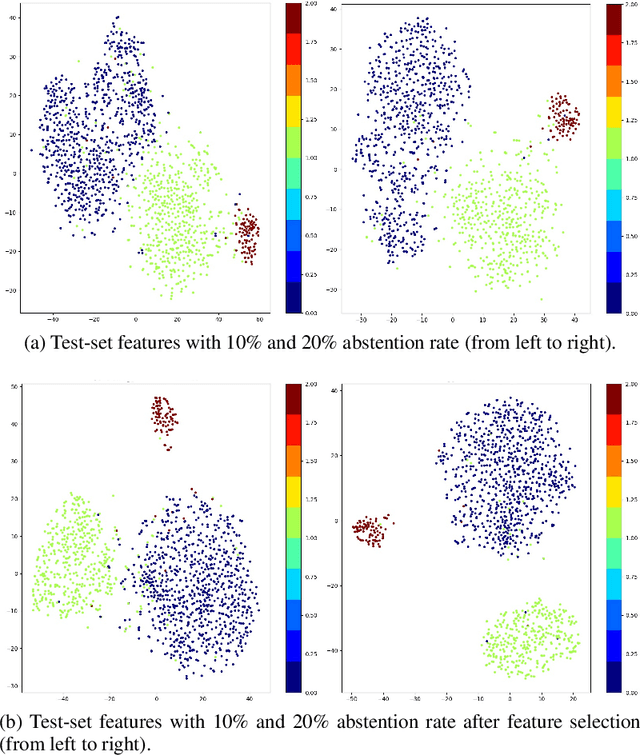

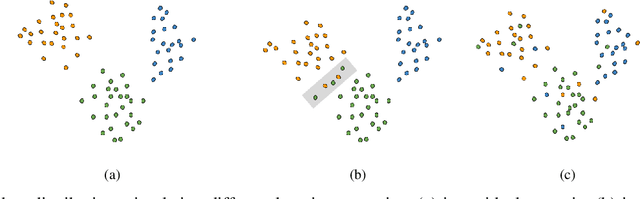

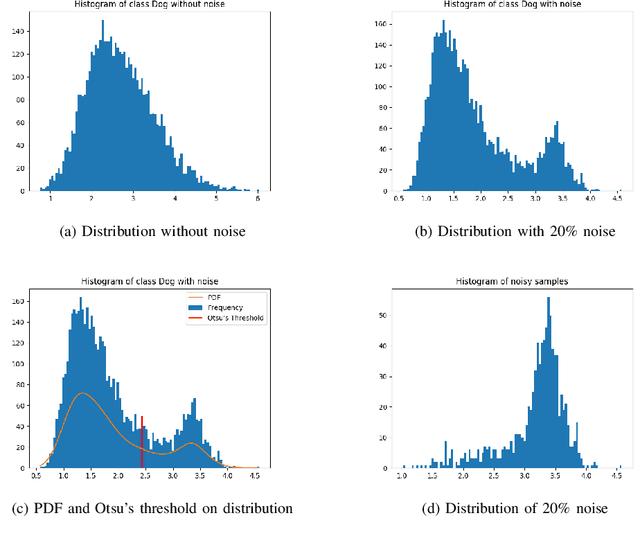

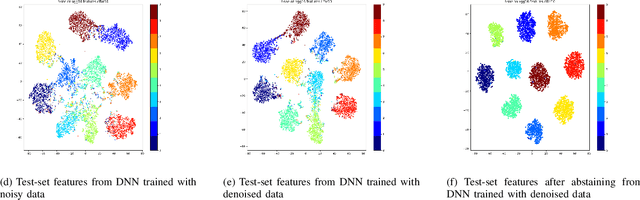

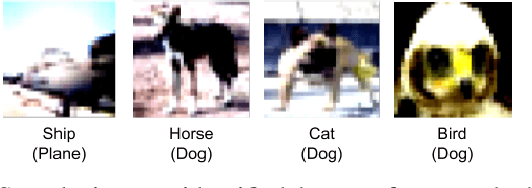

Abstract:The success of Deep Neural Networks (DNNs) highly depends on data quality. Moreover, predictive uncertainty makes high performing DNNs risky for real-world deployment. In this paper, we aim to address these two issues by proposing a unified filtering framework leveraging underlying data density, that can effectively denoise training data as well as avoid predicting uncertain test data points. Our proposed framework leverages underlying data distribution to differentiate between noise and clean data samples without requiring any modification to existing DNN architectures or loss functions. Extensive experiments on multiple image classification datasets and multiple CNN architectures demonstrate that our simple yet effective framework can outperform the state-of-the-art techniques in denoising training data and abstaining uncertain test data.

Towards Robust Human Activity Recognition from RGB Video Stream with Limited Labeled Data

Dec 16, 2018

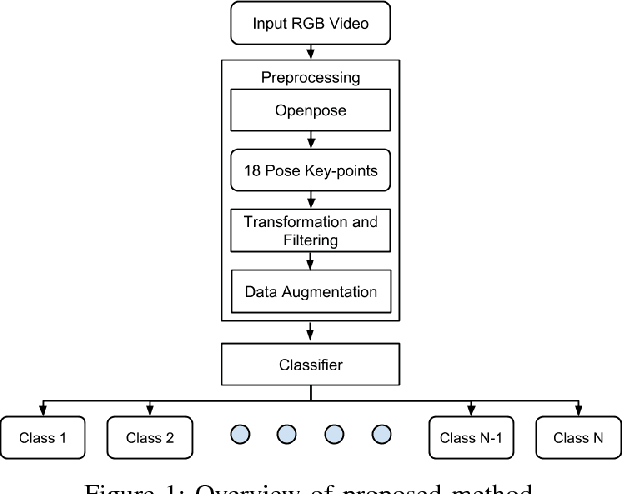

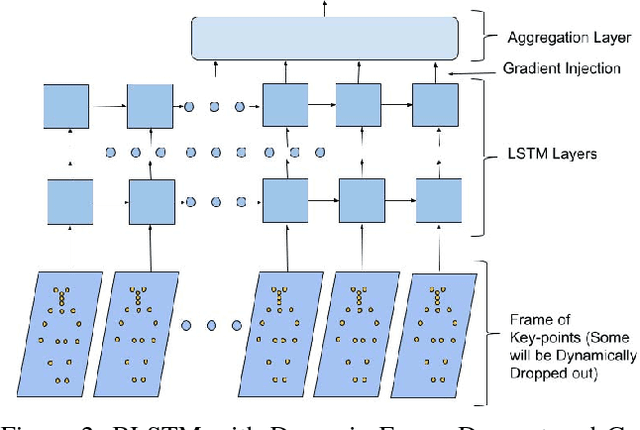

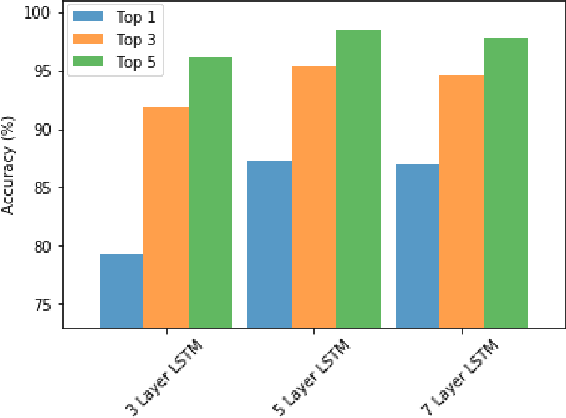

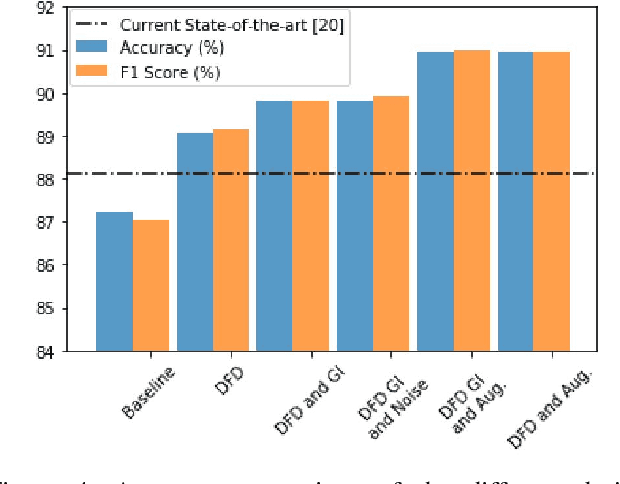

Abstract:Human activity recognition based on video streams has received numerous attentions in recent years. Due to lack of depth information, RGB video based activity recognition performs poorly compared to RGB-D video based solutions. On the other hand, acquiring depth information, inertia etc. is costly and requires special equipment, whereas RGB video streams are available in ordinary cameras. Hence, our goal is to investigate whether similar or even higher accuracy can be achieved with RGB-only modality. In this regard, we propose a novel framework that couples skeleton data extracted from RGB video and deep Bidirectional Long Short Term Memory (BLSTM) model for activity recognition. A big challenge of training such a deep network is the limited training data, and exploring RGB-only stream significantly exaggerates the difficulty. We therefore propose a set of algorithmic techniques to train this model effectively, e.g., data augmentation, dynamic frame dropout and gradient injection. The experiments demonstrate that our RGB-only solution surpasses the state-of-the-art approaches that all exploit RGB-D video streams by a notable margin. This makes our solution widely deployable with ordinary cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge