A Unified Plug-and-Play Framework for Effective Data Denoising and Robust Abstention

Paper and Code

Sep 25, 2020

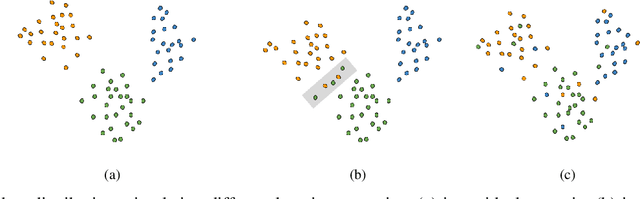

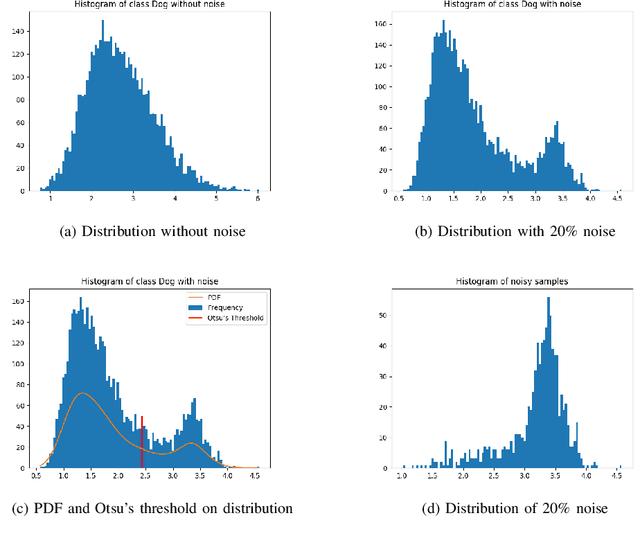

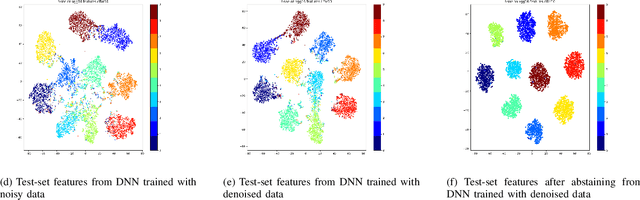

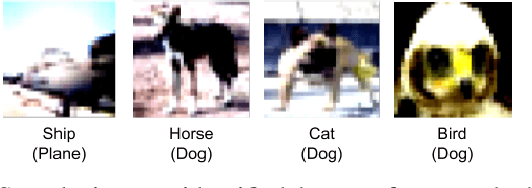

The success of Deep Neural Networks (DNNs) highly depends on data quality. Moreover, predictive uncertainty makes high performing DNNs risky for real-world deployment. In this paper, we aim to address these two issues by proposing a unified filtering framework leveraging underlying data density, that can effectively denoise training data as well as avoid predicting uncertain test data points. Our proposed framework leverages underlying data distribution to differentiate between noise and clean data samples without requiring any modification to existing DNN architectures or loss functions. Extensive experiments on multiple image classification datasets and multiple CNN architectures demonstrate that our simple yet effective framework can outperform the state-of-the-art techniques in denoising training data and abstaining uncertain test data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge