Koundinya Vajjha

Formalization of a Stochastic Approximation Theorem

Feb 12, 2022Abstract:Stochastic approximation algorithms are iterative procedures which are used to approximate a target value in an environment where the target is unknown and direct observations are corrupted by noise. These algorithms are useful, for instance, for root-finding and function minimization when the target function or model is not directly known. Originally introduced in a 1951 paper by Robbins and Monro, the field of Stochastic approximation has grown enormously and has come to influence application domains from adaptive signal processing to artificial intelligence. As an example, the Stochastic Gradient Descent algorithm which is ubiquitous in various subdomains of Machine Learning is based on stochastic approximation theory. In this paper, we give a formal proof (in the Coq proof assistant) of a general convergence theorem due to Aryeh Dvoretzky, which implies the convergence of important classical methods such as the Robbins-Monro and the Kiefer-Wolfowitz algorithms. In the process, we build a comprehensive Coq library of measure-theoretic probability theory and stochastic processes.

CertRL: Formalizing Convergence Proofs for Value and Policy Iteration in Coq

Sep 23, 2020

Abstract:Reinforcement learning algorithms solve sequential decision-making problems in probabilistic environments by optimizing for long-term reward. The desire to use reinforcement learning in safety-critical settings inspires a recent line of work on formally constrained reinforcement learning; however, these methods place the implementation of the learning algorithm in their Trusted Computing Base. The crucial correctness property of these implementations is a guarantee that the learning algorithm converges to an optimal policy. This paper begins the work of closing this gap by developing a Coq formalization of two canonical reinforcement learning algorithms: value and policy iteration for finite state Markov decision processes. The central results are a formalization of Bellman's optimality principle and its proof, which uses a contraction property of Bellman optimality operator to establish that a sequence converges in the infinite horizon limit. The CertRL development exemplifies how the Giry monad and mechanized metric coinduction streamline optimality proofs for reinforcement learning algorithms. The CertRL library provides a general framework for proving properties about Markov decision processes and reinforcement learning algorithms, paving the way for further work on formalization of reinforcement learning algorithms.

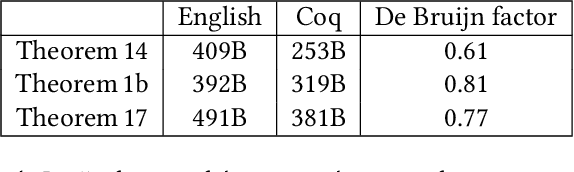

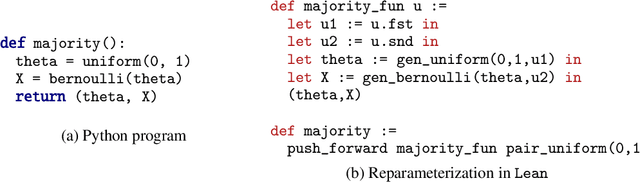

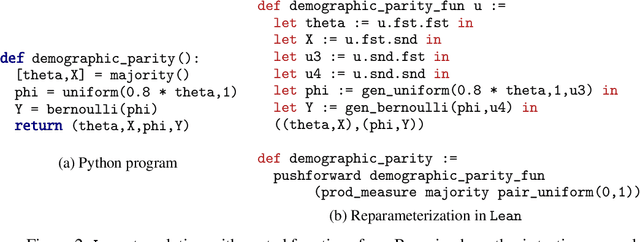

Verification of ML Systems via Reparameterization

Jul 14, 2020

Abstract:As machine learning is increasingly used in essential systems, it is important to reduce or eliminate the incidence of serious bugs. A growing body of research has developed machine learning algorithms with formal guarantees about performance, robustness, or fairness. Yet, the analysis of these algorithms is often complex, and implementing such systems in practice introduces room for error. Proof assistants can be used to formally verify machine learning systems by constructing machine checked proofs of correctness that rule out such bugs. However, reasoning about probabilistic claims inside of a proof assistant remains challenging. We show how a probabilistic program can be automatically represented in a theorem prover using the concept of \emph{reparameterization}, and how some of the tedious proofs of measurability can be generated automatically from the probabilistic program. To demonstrate that this approach is broad enough to handle rather different types of machine learning systems, we verify both a classic result from statistical learning theory (PAC-learnability of decision stumps) and prove that the null model used in a Bayesian hypothesis test satisfies a fairness criterion called demographic parity.

A Formal Proof of PAC Learnability for Decision Stumps

Nov 29, 2019Abstract:We present a machine-checked, formal proof of PAC learnability of the concept class of decision stumps. A formal proof has every step checked and justified using fundamental axioms of mathematics. We construct and check our proof using the Lean theorem prover. Though such a proof appears simple, a few analytic and measure-theoretic subtleties arise when carrying it out fully formally. We explain how we can cleanly separate out the parts that deal with these subtleties by using Lean features and a category theoretic construction called the Giry monad.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge