Konstantinos E. Themelis

Online Low-Rank Subspace Learning from Incomplete Data: A Bayesian View

Feb 12, 2016

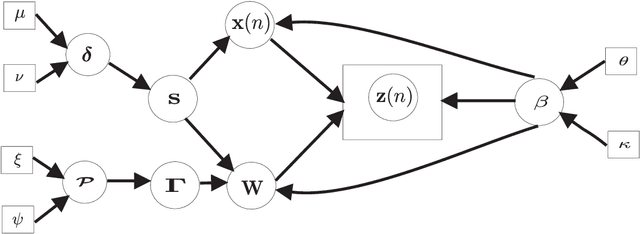

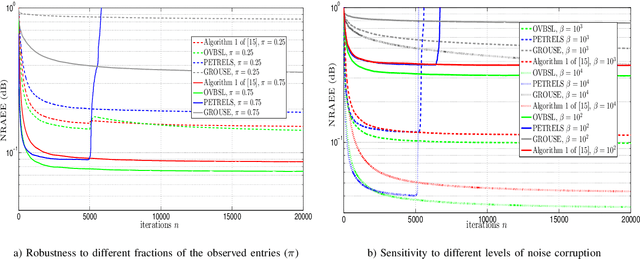

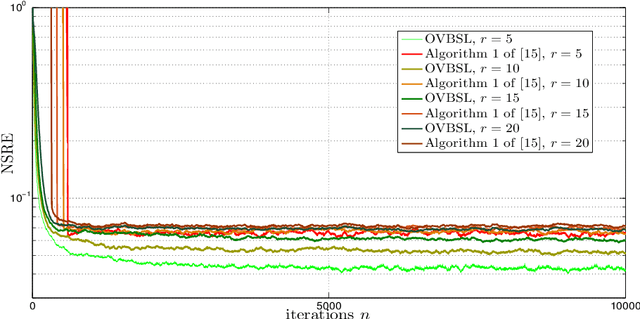

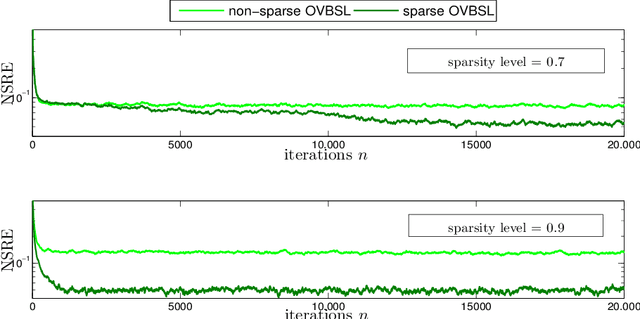

Abstract:Extracting the underlying low-dimensional space where high-dimensional signals often reside has long been at the center of numerous algorithms in the signal processing and machine learning literature during the past few decades. At the same time, working with incomplete (partly observed) large scale datasets has recently been commonplace for diverse reasons. This so called {\it big data era} we are currently living calls for devising online subspace learning algorithms that can suitably handle incomplete data. Their envisaged objective is to {\it recursively} estimate the unknown subspace by processing streaming data sequentially, thus reducing computational complexity, while obviating the need for storing the whole dataset in memory. In this paper, an online variational Bayes subspace learning algorithm from partial observations is presented. To account for the unawareness of the true rank of the subspace, commonly met in practice, low-rankness is explicitly imposed on the sought subspace data matrix by exploiting sparse Bayesian learning principles. Moreover, sparsity, {\it simultaneously} to low-rankness, is favored on the subspace matrix by the sophisticated hierarchical Bayesian scheme that is adopted. In doing so, the proposed algorithm becomes adept in dealing with applications whereby the underlying subspace may be also sparse, as, e.g., in sparse dictionary learning problems. As shown, the new subspace tracking scheme outperforms its state-of-the-art counterparts in terms of estimation accuracy, in a variety of experiments conducted on simulated and real data.

A variational Bayes framework for sparse adaptive estimation

Jan 13, 2014

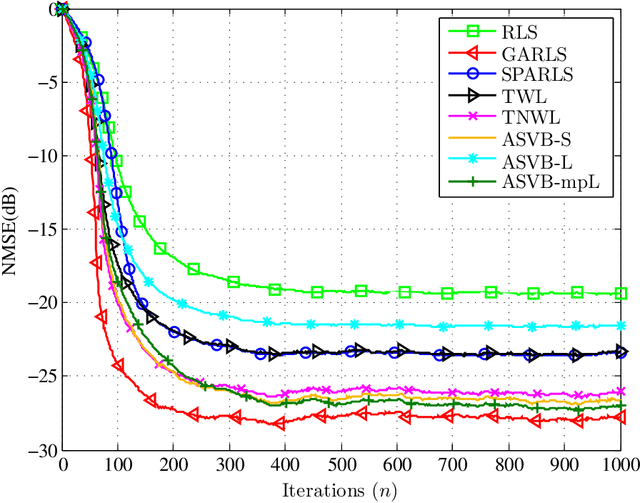

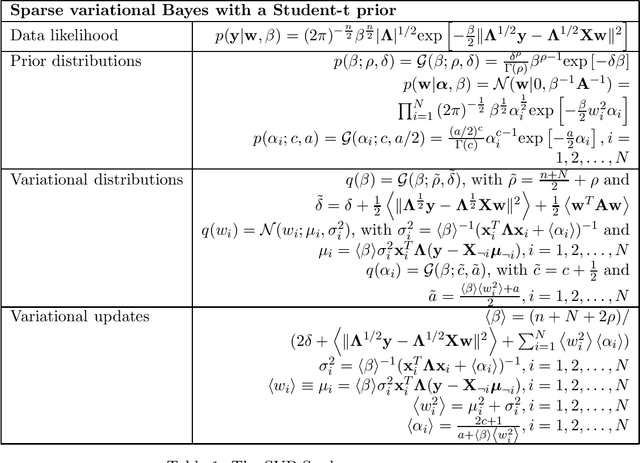

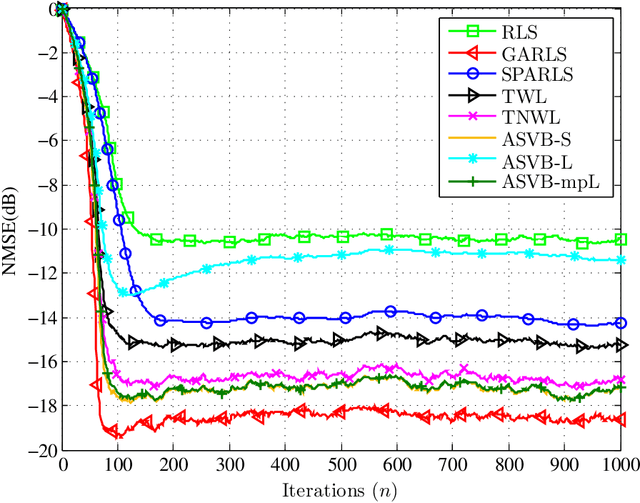

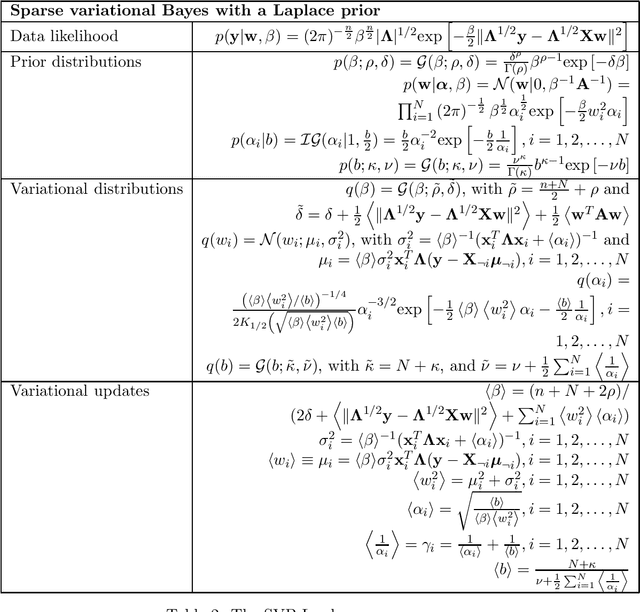

Abstract:Recently, a number of mostly $\ell_1$-norm regularized least squares type deterministic algorithms have been proposed to address the problem of \emph{sparse} adaptive signal estimation and system identification. From a Bayesian perspective, this task is equivalent to maximum a posteriori probability estimation under a sparsity promoting heavy-tailed prior for the parameters of interest. Following a different approach, this paper develops a unifying framework of sparse \emph{variational Bayes} algorithms that employ heavy-tailed priors in conjugate hierarchical form to facilitate posterior inference. The resulting fully automated variational schemes are first presented in a batch iterative form. Then it is shown that by properly exploiting the structure of the batch estimation task, new sparse adaptive variational Bayes algorithms can be derived, which have the ability to impose and track sparsity during real-time processing in a time-varying environment. The most important feature of the proposed algorithms is that they completely eliminate the need for computationally costly parameter fine-tuning, a necessary ingredient of sparse adaptive deterministic algorithms. Extensive simulation results are provided to demonstrate the effectiveness of the new sparse variational Bayes algorithms against state-of-the-art deterministic techniques for adaptive channel estimation. The results show that the proposed algorithms are numerically robust and exhibit in general superior estimation performance compared to their deterministic counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge