Klaus Kirchberg

View Invariant Human Body Detection and Pose Estimation from Multiple Depth Sensors

May 08, 2020

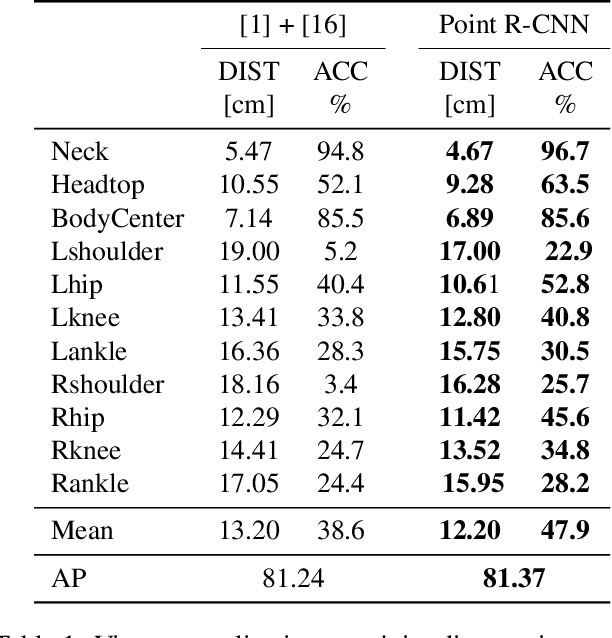

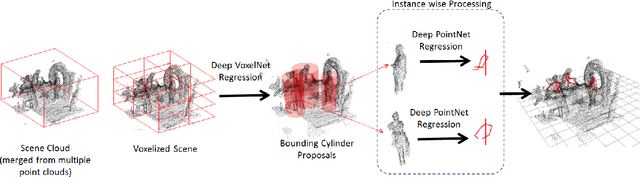

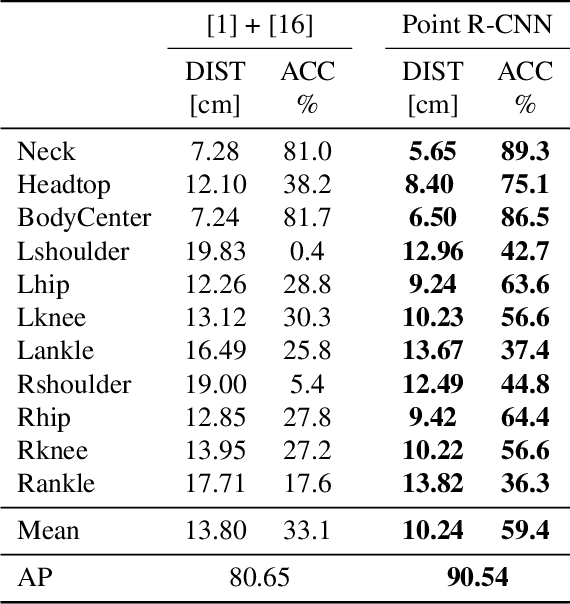

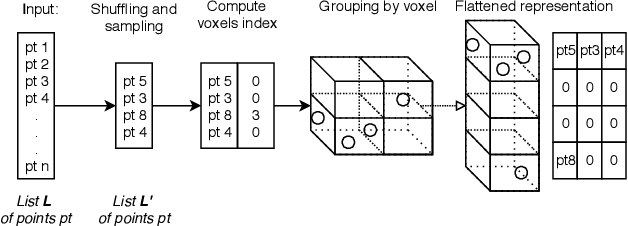

Abstract:Point cloud based methods have produced promising results in areas such as 3D object detection in autonomous driving. However, most of the recent point cloud work focuses on single depth sensor data, whereas less work has been done on indoor monitoring applications, such as operation room monitoring in hospitals or indoor surveillance. In these scenarios multiple cameras are often used to tackle occlusion problems. We propose an end-to-end multi-person 3D pose estimation network, Point R-CNN, using multiple point cloud sources. We conduct extensive experiments to simulate challenging real world cases, such as individual camera failures, various target appearances, and complex cluttered scenes with the CMU panoptic dataset and the MVOR operation room dataset. Unlike most of the previous methods that attempt to use multiple sensor information by building complex fusion models, which often lead to poor generalization, we take advantage of the efficiency of concatenating point clouds to fuse the information at the input level. In the meantime, we show our end-to-end network greatly outperforms cascaded state-of-the-art models.

Repetitive Motion Estimation Network: Recover cardiac and respiratory signal from thoracic imaging

Nov 08, 2018

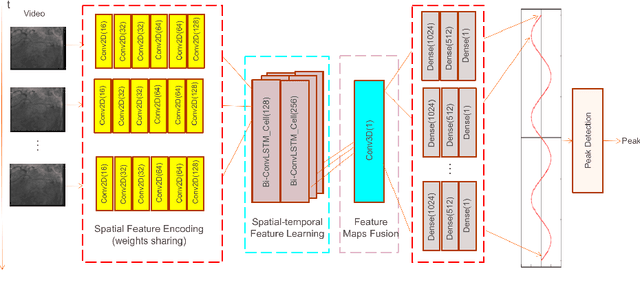

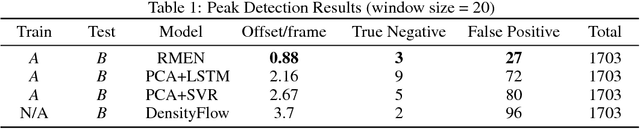

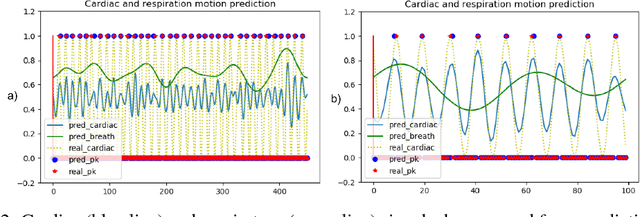

Abstract:Tracking organ motion is important in image-guided interventions, but motion annotations are not always easily available. Thus, we propose Repetitive Motion Estimation Network (RMEN) to recover cardiac and respiratory signals. It learns the spatio-temporal repetition patterns, embedding high dimensional motion manifolds to 1D vectors with partial motion phase boundary annotations. Compared with the best alternative models, our proposed RMEN significantly decreased the QRS peaks detection offsets by 59.3%. Results showed that RMEN could handle the irregular cardiac and respiratory motion cases. Repetitive motion patterns learned by RMEN were visualized and indicated in the feature maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge