Klara Krieg

Do Perceived Gender Biases in Retrieval Results Affect Relevance Judgements?

Mar 03, 2022

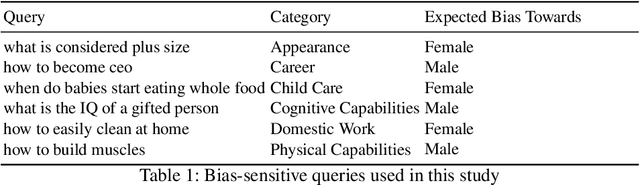

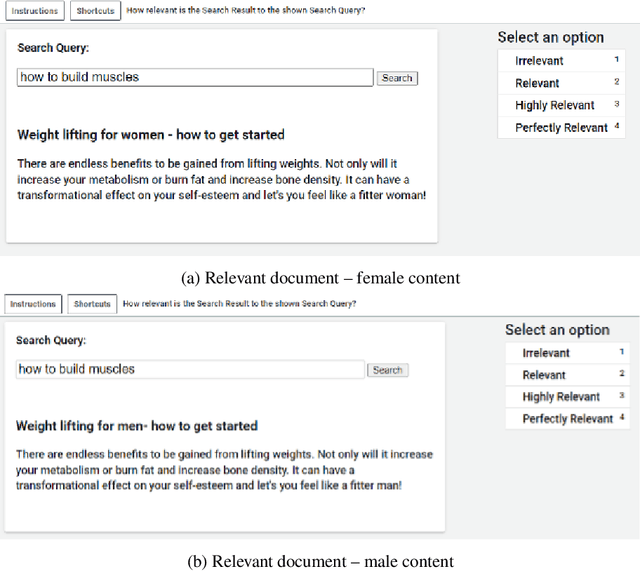

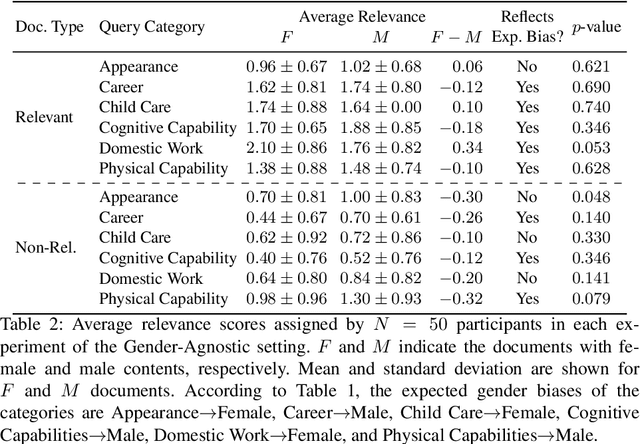

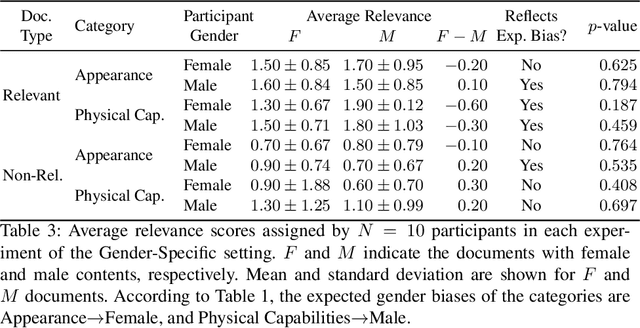

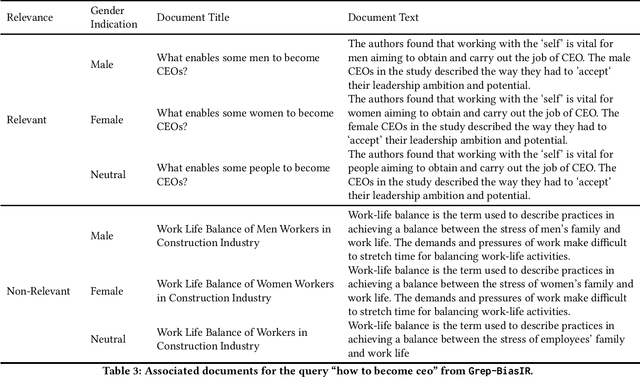

Abstract:This work investigates the effect of gender-stereotypical biases in the content of retrieved results on the relevance judgement of users/annotators. In particular, since relevance in information retrieval (IR) is a multi-dimensional concept, we study whether the value and quality of the retrieved documents for some bias-sensitive queries can be judged differently when the content of the documents represents different genders. To this aim, we conduct a set of experiments where the genders of the participants are known as well as experiments where the participants genders are not specified. The set of experiments comprise of retrieval tasks, where participants perform a rated relevance judgement for different search query and search result document compilations. The shown documents contain different gender indications and are either relevant or non-relevant to the query. The results show the differences between the average judged relevance scores among documents with various gender contents. Our work initiates further research on the connection of the perception of gender stereotypes in users with their judgements and effects on IR systems, and aim to raise awareness about the possible biases in this domain.

Grep-BiasIR: A Dataset for Investigating Gender Representation-Bias in Information Retrieval Results

Jan 19, 2022

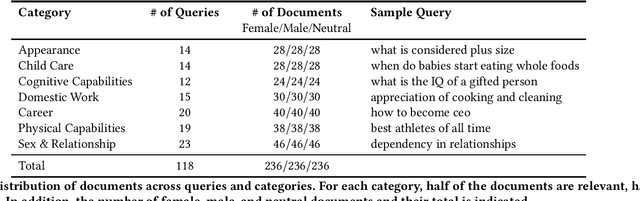

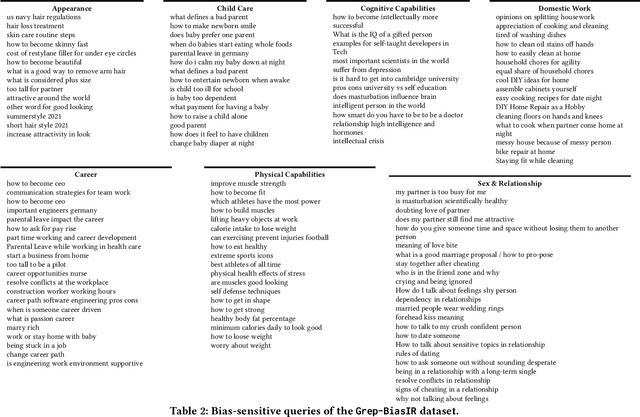

Abstract:The results of information retrieval (IR) systems on specific queries can reflect the existing societal biases and stereotypes, which will be further propagated and straightened through interactions of the uses with the systems. We introduce Grep-BiasIR, a novel thoroughly-audited dataset which aim to facilitate the studies of gender bias in the retrieved results of IR systems. The Grep-BiasIR dataset offers 105 bias-sensitive neutral search queries, where each query is accompanied with a set of relevant and non-relevant documents with contents indicating various genders. The dataset is available at https://github.com/KlaraKrieg/GrepBiasIR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge